I’ve written a information on the best way to carry out this information evaluation and generate the graph within the earlier part. I’m utilizing the dataset from the town of Barcelona as an example the completely different information evaluation steps.

After downloading the listings.csv.gz information from InsideAirbnb I opened them in Python with out decompressing. I’m utilizing Polars for this mission simply to develop into aware of the instructions (you need to use Pandas if you want):

import polars as pl

df=pl.read_csv('listings.csv.gz')

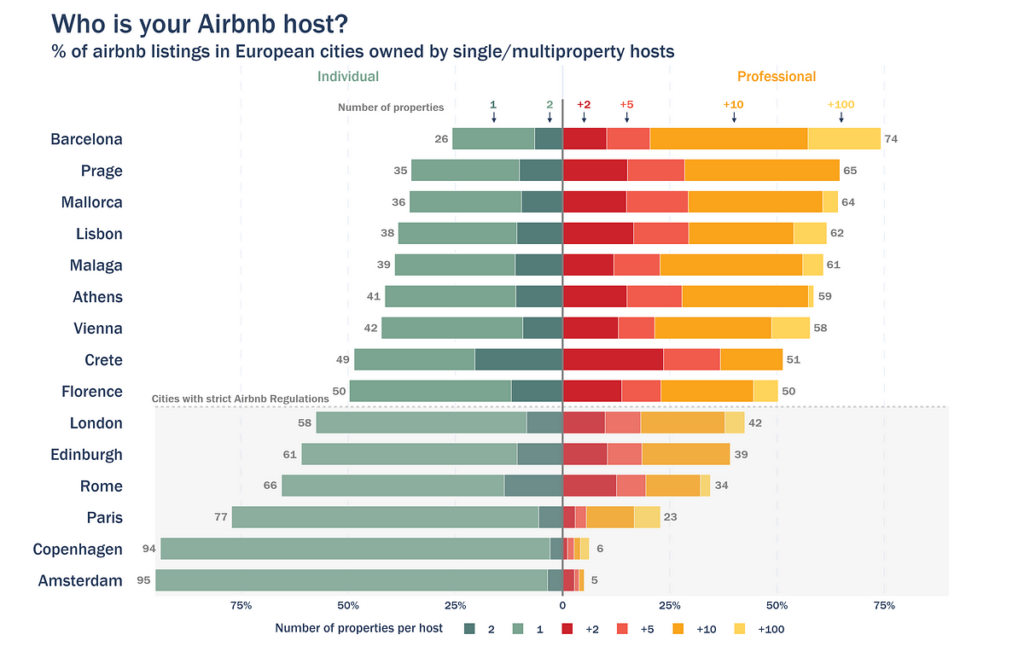

Listed here are the cities that I used for the evaluation and the variety of listings in every dataset:

Should you like this packed bubble plot, ensure that to test my final article:

First look into the dataset and that is what it appears to be like like:

The content material relies on obtainable information in every itemizing URL, and it comprises rows for every itemizing and 75 columns that element from description, neighbourhood, and variety of bedrooms, to scores, minimal variety of nights and value.

As talked about earlier, despite the fact that this dataset has infinite potential, I’ll focus solely on multi-property possession.

After downloading the information, there’s little information cleansing to do:

1- Filtering “property_type” to solely “Total rental items” to filter out room listings.

2- Filtering “has_availability” to “t”(True) to take away non-active listings.

import polars as pl

#I renamed the listings.csv.gz file to cityname_listings.csv.gz.

df=pl.read_csv('barcelona_listings.csv.gz')

df=df.filter((pl.col('property_type')=="Total rental unit")&(pl.col('has_availability')=="t"))

For information processing, I reworked the unique information into a special construction that might permit me to quantify what number of listings within the dataset are owned by the identical host. Or, rephrased, what proportion of the town listings are owned by multi-property hosts. That is how I approached it:

- Carried out a value_counts on the “host_id” column to rely what number of listings are owned by the identical host id.

- Created 5 completely different bins to quantify multi-property ranges: 1 property, 2 properties, +2 properties, +5 properties, +10 properties and +100 properties.

- Carried out a polars.lower to bin the rely of listings per host_id (steady worth) into my discrete classes (bins)

host_count=df['host_id'].value_counts().type('rely')

breaks = [1,2,5,10,100]

labels = ['1','2','+2','+5','+10','+100']

host_count = host_count.with_columns(

pl.col("rely").lower(breaks=breaks, labels=labels, left_closed=False).alias("binned_counts")

)

host_count

That is the end result. Host_id, variety of listings, and bin class. Information proven corresponds to the town of Barcelona.

Please take a second to grasp that host id 346367515 (final on the listing) owns 406 listings? Is the airbnb neighborhood feeling beginning to really feel like an phantasm at this level?

To get a metropolis common view, impartial of the host_id, I joined the host_count dataframe with the unique df to match every itemizing to the right multi-property label. Afterwards, all that’s left is an easy value_counts() on every property label to get the whole variety of listings that fall beneath that class.

I additionally added a proportion column to quantify the burden of every label

df=df.be a part of(host_count,on='host_id',how='left')graph_data=df['binned_counts'].value_counts().type('binned_counts')

total_sum=graph_data['count'].sum()

graph_data=graph_data.with_columns(((pl.col('rely')/total_sum)*100).spherical().forged(pl.Int32).alias('proportion'))

Don’t fear, I’m a visible individual too, right here’s the graph illustration of the desk:

import plotly.specific as pxpalette=["#537c78","#7ba591","#cc222b","#f15b4c","#faa41b","#ffd45b"]

# I wrote the text_annotation manually trigger I like modifying the x place

text_annotation=['19%','7%','10%','10%','37%','17%']

text_annotation_xpos=[17,5,8,8,35,15]

text_annotation_ypos=[5,4,3,2,1,0]

annotations_text=[

dict(x=x,y=y,text=text,showarrow=False,font=dict(color="white",weight='bold',size=20))

for x,y,text in zip(text_annotation_xpos,text_annotation_ypos,text_annotation)

]

fig = px.bar(graph_data, x="proportion",y='binned_counts',orientation='h',colour='binned_counts',

color_discrete_sequence=palette,

category_orders={"binned_counts": ["1", "2", "+2","+5","+10","+100"]}

)

fig.update_layout(

top=700,

width=1100,

template='plotly_white',

annotations=annotations_text,

xaxis_title="% of listings",

yaxis_title="Variety of listings owned by the identical host",

title=dict(textual content="Prevalence of multi-property in Barcelona's airbnb listings<br><sup>% of airbnb listings in Barcelona owned by multiproperty hosts</sup>",font=dict(dimension=30)),

font=dict(

household="Franklin Gothic"),

legend=dict(

orientation='h',

x=0.5,

y=-0.125,

xanchor='heart',

yanchor='backside',

title="Variety of properties per host"

))

fig.update_yaxes(anchor='free',shift=-10,

tickfont=dict(dimension=18,weight='regular'))

fig.present()

Again to the query at first: how can I conclude that the Airbnb essence is misplaced in Barcelona?

- Most listings (64%) are owned by hosts with greater than 5 properties. A major 17% of listings are managed by hosts who personal greater than 100 properties

- Solely 26% of listings belong to hosts with simply 1 or 2 properties.

Should you want to analyse a couple of metropolis on the identical time, you need to use a perform that performs all cleansing and processing without delay:

import polars as pldef airbnb_per_host(file,ptype,neighbourhood):

df=pl.read_csv(file)

if neighbourhood:

df=df.filter((pl.col('property_type')==ptype)&(pl.col('neighbourhood_group_cleansed')==neighbourhood)&

(pl.col('has_availability')=="t"))

else:

df=df.filter((pl.col('property_type')==ptype)&(pl.col('has_availability')=="t"))

host_count=df['host_id'].value_counts().type('rely')

breaks=[1,2,5,10,100]

labels=['1','2','+2','+5','+10','+100']

host_count = host_count.with_columns(

pl.col("rely").lower(breaks=breaks, labels=labels, left_closed=False).alias("binned_counts"))

df=df.be a part of(host_count,on='host_id',how='left')

graph_data=df['binned_counts'].value_counts().type('binned_counts')

total_sum=graph_data['count'].sum()

graph_data=graph_data.with_columns(((pl.col('rely')/total_sum)*100).alias('proportion'))

return graph_data

After which run it for each metropolis in your folder:

import os

import glob# please keep in mind that I renamed my information to : cityname_listings.csv.gz

df_combined = pl.DataFrame({

"binned_counts": pl.Collection(dtype=pl.Categorical),

"rely": pl.Collection(dtype=pl.UInt32),

"proportion": pl.Collection(dtype=pl.Float64),

"metropolis":pl.Collection(dtype=pl.String)

})

city_files =glob.glob("*.csv.gz")

for file in city_files:

file_name=os.path.basename(file)

metropolis=file_name.break up('_')[0]

print('Scanning began for --->',metropolis)

information=airbnb_per_host(file,'Total rental unit',None)

information=information.with_columns(pl.lit(metropolis.capitalize()).alias("metropolis"))

df_combined = pl.concat([df_combined, data], how="vertical")

print('Completed scanning of ' +str(len(city_files)) +' cities')

Try my GitHub repository for the code to construct this graph because it’s a bit too lengthy to connect right here:

And that’s it!

Let me know your ideas within the feedback and, within the meantime, I want you a really fulfilling and genuine Airbnb expertise in your subsequent keep 😉

All photographs and code on this article are by the writer