What Did I Be taught from Constructing LLM Purposes in 2024? — Half 2

An engineer’s journey to constructing LLM-powered purposes

In part 1 of this series, we mentioned use case choice, constructing a group and the significance of making a prototype early into your LLM-based product improvement journey. Let’s choose it up from there — in case you are pretty happy along with your prototype and able to transfer ahead, begin with planning a improvement strategy. It’s additionally essential to resolve in your productionizing technique from an early part.

With current developments with new fashions and a handful of SDKs in market, it’s straightforward to really feel the urge to construct cool options comparable to brokers into your LLM-powered software within the early part. Let’s take a step again and resolve the must-have and nice-to-have options as per your use case. Start by figuring out the core functionalities which can be important in your software to satisfy the first enterprise goals. As an illustration, in case your software is designed to offer buyer assist, the flexibility to grasp and reply to consumer queries precisely can be vital characteristic. However, options like personalised suggestions is likely to be thought of as a nice-to-have characteristic for future scope.

Discover your ‘match’

If you wish to construct your resolution from an idea or prototype, a top-down design mannequin can work greatest. On this strategy, you begin with a excessive degree conceptual design of the appliance with out going into a lot particulars, after which take separate parts to develop every additional. This design won’t yield the perfect outcomes at very first, however units you up for an iterative strategy, the place you may enhance and consider every element of the app and check the end-to-end resolution in subsequent iterations.

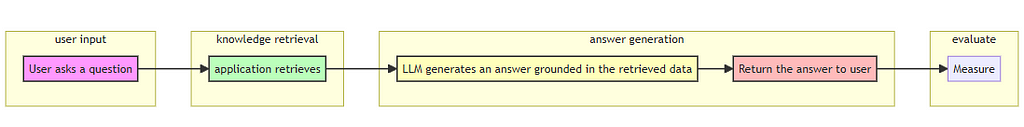

For an instance of this design strategy, we are able to contemplate a RAG (Retrieval Augmented Era) primarily based software. These purposes usually have 2 high-level parts — a retrieval element (which searches and retrieves related paperwork for consumer question) and a generative element (which produces a grounded reply from the retrieved paperwork).

Situation: Construct a useful assistant bot to diagnose and resolve technical points by providing related options from a technical information base containing troubleshooting tips.

STEP 1 – construct the conceptual prototype: Define the general structure of the bot with out going into a lot particulars.

- Knowledge Assortment: Collect a pattern dataset from the information base, with questions and solutions related to your area.

- Retrieval Part: Implement a primary retrieval system utilizing a easy keyword-based search, which might evolve right into a extra superior vector-based search in future iterations.

- Generative Part: Combine an LLM on this element and feed the retrieval outcomes by means of immediate to generate a grounded and contextual reply.

- Integration: Mix the retrieval and generative parts to create a end-to-end circulation.

- Execution: Determine the assets to run every element. For instance, retrieval element may be constructed utilizing Azure AI search, it gives each keyword-based and superior vector-based retrieval mechanisms. LLMs from Azure AI foundry can be utilized for technology element. Lastly, create an app to combine these parts.

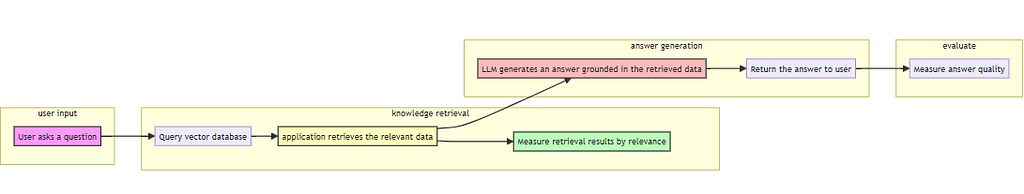

STEP 2 – Enhance Retrieval Part: Begin exploring how every element may be improved extra. For a RAG-based resolution, the standard of retrieval must be exceptionally good to make sure that probably the most related and correct data is retrieved, which in flip permits the technology element to supply contextually acceptable response for the top consumer.

- Arrange knowledge ingestion: Arrange an information pipeline to ingest the information base into your retrieval element. This step must also contemplate preprocessing the information to take away noise, extract key data, picture processing and so on.

- Use vector database: Improve to a vector database to boost the system for extra contextual retrieval. Pre-process the information additional by splitting textual content into chunks and producing embeddings for every chunk utilizing an embedding mannequin. The vector database ought to have functionalities for including and deleting knowledge and querying with vectors for straightforward integration.

- Analysis: Choice and rank of paperwork in retrieval outcomes is essential, because it impacts the following step of the answer closely. Whereas precision and recall offers a reasonably good concept of the search outcomes’ accuracy, you may also think about using MRR (imply reciprocal rank) or NDCG (normalized discounted cumulative achieve) to evaluate the rating of the paperwork within the retrieval outcomes. Contextual relevancy determines whether or not the doc chunks are related to producing the best reply for a consumer enter.

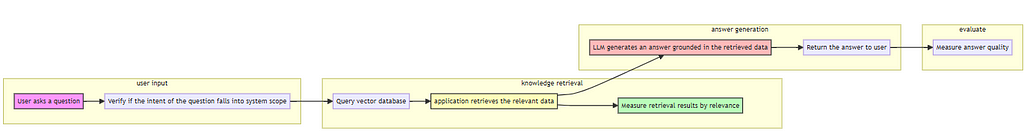

STEP 3 — Improve Generative Part to supply extra related and higher output:

- Intent filter: Filter out questions that don’t fall into the scope of your information base. This step will also be used to dam undesirable and offensive prompts.

- Modify immediate and context: Enhance your prompts e.g. together with few shot examples, clear directions, response construction, and so on. to tune the LLM output as per your want. Additionally feed dialog historical past to the LLM in every flip to take care of context for a consumer chat session. If you would like the mannequin to invoke instruments or capabilities, then put clear directions and annotations within the immediate. Apply model management on prompts in every iteration of experiment part for change monitoring. This additionally helps to roll again in case your system’s conduct degrades after a launch.

- Seize the reasoning of the mannequin: Some purposes use an extra step to seize the rationale behind output generated by LLMs. That is helpful for inspection when the mannequin produces unpredictable output.

- Analysis: For the solutions produced by a RAG-based system, you will need to measure a) the factual consistency of the reply in opposition to the context offered by retrieval element and b) how related the reply is to the question. In the course of the MVP part, we often check with few inputs. Nonetheless whereas creating for manufacturing, we should always perform analysis in opposition to an intensive floor fact or golden dataset created from the information base in every step of the experiment. It’s higher if the bottom fact can include as a lot as potential real-world examples (frequent questions from the goal shoppers of the system). If you happen to’re trying to implement analysis framework, have a look here.

However, let’s contemplate one other state of affairs the place you’re integrating AI in a enterprise course of. Take into account an internet retail firm’s name middle transcripts, for which summarization and sentiment evaluation are wanted to be generated and added right into a weekly report. To develop this, begin with understanding the prevailing system and the hole AI is attempting to fill. Subsequent, begin designing low-level parts protecting system integration in thoughts. This may be thought of as bottom-up design as every element may be developed individually after which be built-in into the ultimate system.

- Knowledge assortment and pre-processing: Contemplating confidentiality and the presence of non-public knowledge within the transcripts, redact or anonymize the information as wanted. Use a speech-to-text mannequin to transform audio into textual content.

- Summarization: Experiment and select between extractive summarization (choosing key sentences) and abstractive summarization (new sentences that convey the identical which means) as per the ultimate report’s want. Begin with a easy immediate, and take consumer suggestions to enhance the accuracy and relevance of generated abstract additional.

- Sentiment evaluation: Use domain-specific few photographs examples and immediate tuning to extend accuracy in detecting sentiment from transcripts. Instructing LLM to offer reasoning can assist to boost the output high quality.

- Report technology: Use report device like Energy BI to combine the output from earlier parts collectively.

- Analysis: Use the identical ideas of iterative analysis course of with metrics for LLM-dependent parts.

This design additionally helps to catch points early on in every component-level which may be addressed with out altering the general design. Additionally permits AI-driven innovation in present legacy techniques.

LLM software improvement doesn’t comply with a one-size-fits-all strategy. More often than not it’s essential to achieve a fast win to validate whether or not the present strategy is bringing worth or reveals potential to satisfy expectations. Whereas constructing a brand new AI-native system from scratch sounds extra promising for the longer term, then again integrating AI in present enterprise processes even in a small capability can carry lots of effectivity. Selecting both of those relies upon upon your group’s assets, readiness to undertake AI and long-term imaginative and prescient. It’s crucial to contemplate the trade-offs and create a sensible technique to generate long-term worth on this space.

Guaranteeing high quality by means of an automatic analysis course of

Enhancing the success issue of LLM-based software lies with iterative technique of evaluating the result from the appliance. This course of often begins from selecting related metrics in your use case and gathering real-world examples for a floor fact or golden dataset. As your software will develop from MVP to product, it is strongly recommended to provide you with a CI/CE/CD (Steady Integration/Steady Analysis/Steady Deployment) course of to standardize and automate the analysis course of and calculating metrics scores. This automation has additionally been known as LLMOps in current instances, derived from MLOps. Instruments like PromptFlow, Vertex AI Studio, Langsmith, and so on. present the platform and SDKs for automating analysis course of.

Evaluating LLMs and LLM-based purposes is not the identical

Often an LLM is put by means of a normal benchmarks analysis earlier than it’s launched. Nonetheless that doesn’t assure your LLM-powered software will all the time carry out as anticipated. Particularly a RAG-based system which makes use of doc retrievals and immediate engineering steps to generate output, ought to be evaluated in opposition to a domain-specific, real-world dataset to gauge the efficiency.

For in-depth exploration on analysis metrics for numerous sort of use circumstances, I like to recommend this article.

How to decide on the correct LLM?

A number of components drive this choice for a product group.

- Mannequin functionality: Decide your mannequin want by the kind of drawback you’re fixing in your LLM product. For instance, contemplate these 2 use circumstances —

#1 A chatbot for an internet retail store handles product enquiries by means of textual content and pictures. A mannequin with multi-modal capabilities and decrease latency ought to have the ability to deal with the workload.

#2 However, contemplate a developer productiveness resolution, which is able to want a mannequin to generate and debug code snippets, you require a mannequin with superior reasoning that may produce extremely correct output.

2. Value and licensing: Costs range primarily based on a number of components comparable to mannequin complexity, enter measurement, enter sort, and latency necessities. Fashionable LLMs like OpenAI’s fashions cost a hard and fast value per 1M or 1K tokens, which might scale considerably with utilization. Fashions with superior logical reasoning functionality often value extra, comparable to OpenAI’s o1 mannequin $15.00 / 1M enter tokens in comparison with GPT-4o which prices $2.50 / 1M enter tokens. Moreover, if you wish to promote your LLM product, be certain to examine the industrial licensing phrases of the LLM. Some fashions could have restrictions or require particular licenses for industrial use.

3. Context Window Size: This turns into essential to be used circumstances the place the mannequin must course of a considerable amount of knowledge in immediate directly. The info may be doc extracts, dialog historical past, perform name outcomes and so on.

4. Velocity: Use circumstances like chatbot for on-line retail store must generate output very quick, therefore a mannequin with decrease latency is essential on this state of affairs. Additionally, UX enchancment e.g. streaming responses renders the output chunk by chunk, thus offering a greater expertise for the consumer.

6. Integration with present system: Make sure that the LLM supplier may be seamlessly built-in along with your present techniques. This consists of compatibility with APIs, SDKs, and different instruments you might be utilizing.

Selecting a mannequin for manufacturing typically includes balancing trade-offs. It’s vital to experiment with completely different fashions early within the improvement cycle and set not solely use-case particular analysis metrics, additionally efficiency and value as benchmarks for comparability.

Accountable AI

The moral use of LLMs is essential to make sure that these applied sciences profit society whereas minimizing potential hurt. A product group should prioritize transparency, equity, and accountability of their LLM software.

For instance, contemplate a LLM-based system being utilized in healthcare amenities to assist physician diagnose and deal with sufferers extra effectively. The system should not misuse affected person’s private knowledge e.g. medical historical past, signs and so on. Additionally the outcomes from the purposes ought to have transparency and reasoning behind any suggestion it generates. It shouldn’t be biased or discriminatory in the direction of any group of individuals.

Whereas evaluating the LLM-driven element output high quality in every iteration, be certain to look out for any potential threat comparable to dangerous content material, biases, hate speech and so on.t. Crimson teaming, an idea from cybersecurity, has not too long ago emerged as a greatest apply to uncover any threat and vulnerabilities. Throughout this train, crimson teamers try and ‘trick’ the fashions generate dangerous or undesirable content material by way of utilizing numerous methods of prompting. That is adopted by each automated and guide evaluate of flagged outputs to resolve upon a mitigation technique. As your product evolves, in every stage you may instruct crimson teamers to check completely different LLM-driven parts of your app and likewise the whole software as a complete to ensure each facet is coated.

Make all of it prepared for Manufacturing

On the finish, LLM software is a product and we are able to use frequent rules for optimizing it additional earlier than deploying to manufacturing atmosphere.

- Logging and monitoring will assist you to seize token utilization, latency, any subject from LLM supplier facet, software efficiency and so on. You may examine for utilization tendencies of your product, which supplies insights into the LLM product’s effectiveness, utilization spikes and value administration. Moreover, organising alerts for uncommon spikes in utilization can stop finances overruns. By analyzing utilization patterns and recurring value, you may scale your infrastructure and alter or replace mannequin quota accordingly.

- Caching can retailer LLM outputs, decreasing the token utilization and finally the price. Caching additionally helps with consistency in generative output, and reduces latency for user-facing purposes. Nonetheless, since LLM purposes wouldn’t have a particular set of inputs, cache storage can enhance exponentially in some situations, comparable to chatbots, the place every consumer enter may have to be cached, even when the anticipated reply is identical. This could result in important storage overhead. To handle this, the idea of semantic caching has been launched. In Semantic caching, comparable immediate inputs are grouped collectively primarily based on their which means utilizing an embedding mannequin. This strategy helps in managing the cache storage extra effectively.

- Gathering consumer suggestions ensures that the AI-enabled software can serve its goal in a greater means. If potential, attempt to collect suggestions from a set of pilot customers in every iteration with the intention to gauge if the product is assembly expectations and which areas require additional enhancements. For instance, an LLM-powered chatbot may be up to date to assist extra languages and in consequence appeal to extra various customers. With new capabilities of LLMs being launched very continuously, there’s lots of potential to enhance the capabilities and add new options rapidly.

Conclusion

Good luck along with your journey in constructing LLM-powered apps! There are quite a few developments and limitless potentials on this discipline. Organizations are adopting generative AI with a big selection of use circumstances. Just like another product, develop your AI-enabled software protecting the enterprise goals in thoughts. For merchandise like chatbots, finish consumer satisfaction is every little thing. Embrace the challenges, right now if a selected state of affairs doesn’t work out, don’t surrender, tomorrow it will possibly work out with a distinct strategy or a brand new mannequin. Studying and staying up-to-date with AI developments are the important thing to constructing efficient AI-powered merchandise.

Observe me if you wish to learn extra such content material about new and thrilling expertise. In case you have any suggestions, please depart a remark. Thanks 🙂

What Did I Learn from Building LLM Applications in 2024? — Part 2 was initially printed in Towards Data Science on Medium, the place individuals are persevering with the dialog by highlighting and responding to this story.