Till just lately, an “AI enterprise” referred completely to corporations like OpenAI that developed massive language fashions (LLMs) and associated machine studying options. Now, any enterprise, typically a conventional one, could be thought-about an “AI enterprise” if it harnesses AI for automation and workflow refinement. However not each firm is aware of the place to start this transition.

As a tech startup CEO, my aim is to debate how one can combine AI into your enterprise and overcome a significant hurdle: customizing a third-party LLM to create an appropriate AI resolution tailor-made to your particular wants. As a former CTO who has collaborated with individuals from many fields, I’ve set a further aim of laying it out in a means that non-engineers can simply perceive.

Combine AI to streamline your enterprise and customise choices

Since each enterprise interacts with shoppers, customer- or partner-facing roles are a common facet of commerce. These roles contain dealing with information, whether or not you’re promoting tires, managing a warehouse, or organizing international journey like I do. Swift and correct responses are essential. You have to present the proper data rapidly, using essentially the most related sources each from inside your enterprise and your broader market as nicely. This includes coping with an unlimited array of information.

That is the place AI excels. It stays “on responsibility” constantly, processing information and making calculations instantaneously. AI embedded in enterprise operations can manifest in several kinds, from “seen” AI assistants like speaking chatbots (the primary focus of this text) to “invisible” ones just like the silent filters powering e-commerce web sites, together with rating algorithms and recommender techniques.

Take into account the traveltech {industry}. A buyer desires to e book a visit to Europe, they usually wish to know:

- the most effective airfare offers

- the best journey season for nice climate

- cities that characteristic museums with Renaissance artwork

- inns that provide vegetarian choices and a tennis courtroom close by

Earlier than AI, responding to those queries would have concerned processing every subquery individually after which cross-referencing the outcomes by hand. Now, with an AI-powered resolution, my crew and I can deal with all these requests concurrently and with lightning velocity. This isn’t about my enterprise although: the identical holds true for nearly each {industry}. So, if you wish to optimize prices and bolster your efficiency, switching to AI is inevitable.

High-quality-tune your AI mannequin to concentrate on particular business wants

You is perhaps questioning, “This sounds nice, however how do I combine AI into my operations?” Fortuitously, as we speak’s market provides a wide range of commercially out there LLMs, relying in your preferences and goal area: ChatGPT, Claude, Grok, Gemini, Mistral, ERNIE, and YandexGPT, simply to call a number of. When you’ve discovered one you want — ideally one which’s open-sourced like Llama — the following step is fine-tuning.

In a nutshell, fine-tuning is the method of enhancing a pretrained AI mannequin from an upstream supplier, akin to Meta, for a particular downstream software, i.e., your enterprise. This implies taking a mannequin and “adjusting it” to suit extra narrowly outlined wants. High-quality-tuning doesn’t really add extra information; as an alternative, you assign larger “weights” to sure elements of the present dataset, successfully telling the AI mannequin, “That is necessary, this isn’t.”

Let’s say you’re operating a bar and wish to create an AI assistant to assist bartenders combine cocktails or practice new workers. The phrase “punch” will seem in your uncooked AI mannequin, but it surely has a number of frequent meanings. Nevertheless, in your case, “punch” refers particularly to the blended drink. So, fine-tuning can be instructing your mannequin to disregard references to MMA when it encounters the phrase “punch.”

Implement RAG to utilize the newest information

With that mentioned, even a well-fine-tuned mannequin isn’t sufficient, as a result of most companies want new information regularly. Suppose you’re constructing an AI assistant for a dentistry follow. Throughout fine-tuning, you defined to the mannequin that “bridge” refers to dental restoration, not civic structure or the cardboard sport. To date, so good. However how do you get your AI assistant to include data that solely emerged in a analysis piece revealed final week? What you’ll want to do is feed new information into your AI mannequin, a course of often called retrieval-augmented technology (RAG).

RAG includes taking information from an exterior supply, past the pretrained LLM you’re utilizing, and updating your AI resolution with this new data. Let’s say you’re creating an AI assistant to assist a consumer, knowledgeable analyst, in monetary consulting or auditing. Your AI chatbot must be up to date with the newest quarterly statements. This particular, just lately launched information can be your RAG supply.

It’s necessary to notice that using RAG doesn’t remove the necessity for fine-tuning. Certainly, RAG with out fine-tuning may work for some Q&A system that depends completely on exterior information, for instance an AI chatbot that lists NBA stats from previous seasons. However, a fine-tuned AI chatbot may show ample with out RAG for duties like PDF summarization, that are usually loads much less domain-specific. Nevertheless, usually, a customer-facing AI chatbot or a strong AI assistant tailor-made to your crew’s wants would require a mix of each processes.

Transfer away from vectors for RAG information extraction

The first problem for anybody trying to make the most of RAG is figuring out easy methods to put together their new information supply successfully. When a consumer question is made, your domain-specific AI chatbot retrieves data from the information supply. The relevance of this data relies on what kind of information you extracted throughout preprocessing. So, whereas RAG will all the time present your AI chatbot with exterior information, the standard of its responses is topic to your planning.

Making ready your exterior information supply means extracting simply the proper data and never feeding your mannequin redundant or conflicting data that would compromise the AI assistant’s output accuracy. Going again to the fintech instance, for those who’re all for parameters like funds invested in abroad initiatives or month-to-month funds on spinoff contracts, you shouldn’t litter RAG with unrelated information, akin to social safety funds.

In case you ask ML engineers easy methods to obtain this, most are more likely to point out “vector” methodology. Though vectors are helpful, they’ve two main drawbacks: the multi-stage course of is very complicated, and it finally fails to ship nice accuracy.

In case you really feel confused by the picture above, you’re not alone. Being a purely technical, non-linguistic methodology, the vector route makes an attempt to make use of subtle instruments to section massive paperwork into smaller items. This typically (all the time) leads to a lack of intricate semantic relationships and a diminished grasp of language context.

Suppose you’re concerned within the automotive provide chain, and also you want particular tire gross sales figures for the Pacific Northwest. Your information supply — the newest {industry} reviews — include nationwide information. Due to how vectors work, you may find yourself extracting irrelevant information, akin to New England figures. Alternatively, you may find yourself extracting associated however not precisely the proper information out of your goal area, akin to hubcap gross sales. In different phrases, your extracted information will doubtless be related however imprecise. Your AI assistant’s efficiency can be affected accordingly when it retrieves this information throughout consumer queries, resulting in misguided or incomplete responses.

Create information maps for higher RAG navigation

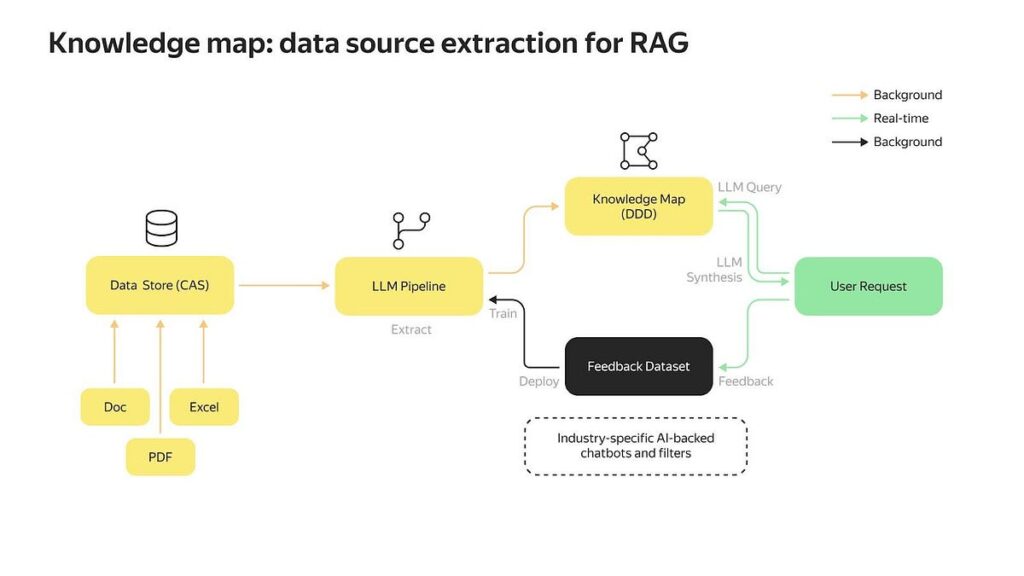

Fortunately, there’s now a more moderen and extra easy methodology — information maps — which is already being applied by respected tech corporations like CLOVA X and Trustbit*. Utilizing information maps reduces RAG contamination throughout information extraction, leading to extra structured retrieval throughout dwell consumer queries.

A information map for enterprise is just like a driving map. Simply as an in depth map results in a smoother journey, a information map improves information extraction by charting out all essential data. That is achieved with the assistance of area consultants, in-house or exterior, who’re intimately conversant in the specifics of your {industry}.

When you’ve developed this “what’s there to know” blueprint of your enterprise panorama, integrating a information map ensures that your up to date AI assistant will reference this map when trying to find solutions. For example, to organize an LLM for oil-industry-specific RAG, area consultants may define the molecular variations between the most recent artificial diesel and conventional petroleum diesel. With this data map, the extraction course of for RAG turns into extra focused, enhancing the accuracy and relevance of the Q&A chatbot throughout real-time information retrieval.

Crucially, not like vector-based RAG techniques that simply retailer information as numbers and may’t study or adapt, a information map permits for ongoing in-the-loop enhancements. Consider it as having a dynamic, editable system that will get higher by means of suggestions the extra you employ it. That is akin to performers who refine their acts primarily based on viewers reactions to make sure every present is best than the final. This implies your AI system’s capability will evolve regularly as enterprise calls for change and new benchmarks are set.

Key takeaway

If your enterprise goals to streamline workflow and optimize processes by leveraging industry-relevant AI, it’s important to transcend mere fine-tuning.

As we’ve already seen, with few exceptions, a strong AI assistant, whether or not it’s serving clients or workers, can’t perform successfully with out contemporary information from RAG. To make sure high-quality information extraction and efficient RAG implementation, corporations ought to create domain-specific information maps as an alternative of counting on the extra ubiquitous numerical vector databases.

Whereas this text could not reply all of your questions, I hope it’ll steer you in the proper course. I encourage you to debate these methods along with your teammates to think about additional steps.

*How We Construct Higher Rag Methods With Information Maps, Trustbit, https://www.trustbit.tech/en/wie-wir-mit-knowledge-maps-bessere-rag-systeme-bauen Accessed 1 Nov. 2024