Information science practitioners encounter quite a few challenges when dealing with various knowledge sorts throughout varied tasks, every demanding distinctive processing strategies. A typical impediment is working with knowledge codecs that conventional machine studying fashions battle to course of successfully, leading to subpar mannequin efficiency. Since most machine studying algorithms are optimized for numerical knowledge, remodeling categorical knowledge into numerical type is crucial. Nevertheless, this usually oversimplifies complicated categorical relationships, particularly when the characteristic have excessive cardinality — which means a lot of distinctive values — which complicates processing and impedes mannequin accuracy.

Excessive cardinality refers back to the variety of distinctive components inside a characteristic, particularly addressing the distinct rely of categorical labels in a machine studying context. When a characteristic has many distinctive categorical labels, it has excessive cardinality, which might complicate mannequin processing. To make categorical knowledge usable in machine studying, these labels are sometimes transformed to numerical type utilizing encoding strategies based mostly on knowledge complexity. One widespread technique is One-Sizzling Encoding, which assigns every distinctive label a definite binary vector. Nevertheless, with high-cardinality knowledge, One-Sizzling Encoding can dramatically improve dimensionality, resulting in complicated, high-dimensional datasets that require important computational capability for mannequin coaching and doubtlessly decelerate efficiency.

Think about a dataset with 2,000 distinctive IDs, every ID linked to considered one of solely three nations. On this case, whereas the ID characteristic has a cardinality of two,000 (since every ID is exclusive), the nation characteristic has a cardinality of simply 3. Now, think about a characteristic with 100,000 categorical labels that should be encoded utilizing One-Sizzling Encoding. This is able to create an especially high-dimensional dataset, resulting in inefficiency and important useful resource consumption.

A broadly adopted answer amongst knowledge scientists is Ok-Fold Goal Encoding. This encoding technique helps cut back characteristic cardinality by changing categorical labels with target-mean values, based mostly on Ok-Fold cross-validation. By specializing in particular person knowledge patterns, Ok-Fold Goal Encoding lowers the chance of overfitting, serving to the mannequin be taught particular relationships throughout the knowledge somewhat than overly basic patterns that may hurt mannequin efficiency.

Ok-Fold Goal Encoding entails dividing the dataset into a number of equally-sized subsets, often called “folds,” with “Ok” representing the variety of these subsets. By folding the dataset into a number of teams, this technique calculates the cross-subset weighted imply for every categorical label, enhancing the encoding’s robustness and lowering overfitting dangers.

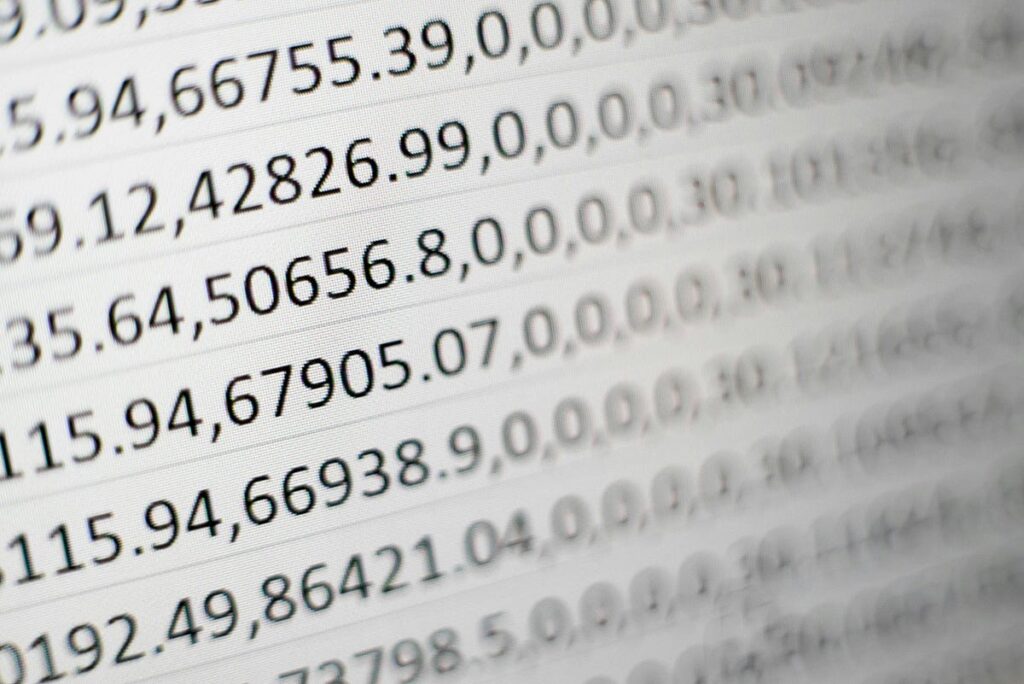

Utilizing an instance from Fig 1. of a pattern dataset of Indonesian home flights emissions for every flight cycle, we are able to put this method into follow. The bottom query to ask with this dataset is “What’s the weighted imply for every categorical labels in ‘Airways’ by taking a look at characteristic ‘HC Emission’ ?”. Nevertheless, you would possibly include the identical query folks been asking me about. “However, for those who simply calculated them utilizing the focused characteristic, couldn’t it end result as one other excessive cardinality characteristic?”. The easy reply is “Sure, it might”.

Why?

In circumstances the place a big dataset has a extremely random goal characteristic with out identifiable patterns, Ok-Fold Goal Encoding would possibly produce all kinds of imply values for every categorical label, doubtlessly preserving excessive cardinality somewhat than lowering it. Nevertheless, the first aim of Ok-Fold Goal Encoding is to deal with excessive cardinality, not essentially to cut back it drastically. This technique works greatest when there’s a significant correlation between the goal characteristic and segments of the info inside every categorical label.

How does Ok-Fold Goal Encoding function? The only option to clarify that is that, in every fold, you calculate the imply of the goal characteristic from the opposite folds. This strategy offers every categorical label with a singular weight, represented as a numerical worth, making it extra informative. Let’s have a look at an instance calculation utilizing our dataset for a clearer understanding.

To calculate the burden of the ‘AirAsia’ label for the primary remark, begin by splitting the info into a number of folds, as proven in Fig 2. You possibly can assign folds manually to make sure equal distribution, or automate this course of utilizing the next pattern code:

import seaborn as sns

import matplotlib.pyplot as plt# In an effort to cut up our knowledge into a number of elements equally lets assign KFold numbers to every of the info randomly.

# Calculate the variety of samples per fold

num_samples = len(df) // 8

# Assign fold numbers

df['kfold'] = np.repeat(np.arange(1, 9), num_samples)

# Deal with any remaining samples (if len(df) is just not divisible by 8)

remaining_samples = len(df) % 8

if remaining_samples > 0:

df.loc[-remaining_samples:, 'kfold'] = np.arange(1, remaining_samples + 1)

# Shuffle once more to make sure randomness

fold_df = df.pattern(frac=1, random_state=42).reset_index(drop=True)