The U.S. Justice Division has revealed it has taken actions to takedown a classy data operation powered by AI, reportedly orchestrated by Russia. In response to the division, the operation concerned almost 1,000 accounts on the social platform X, which assumed American identities.

The operation is claimed to be linked to Russia’s state-run RT Information community and managed by the nation’s federal safety service. The intention behind this was to “disseminate disinformation to sow discord in the US and elsewhere,” as per the court documents.

The Justice Division at this time introduced the seizure of two domains and the search of 968 social media accounts utilized by Russian actors to create an AI-enhanced social media bot farm that unfold disinformation in the US and overseas. Study extra: https://t.co/ibmqaruf5U pic.twitter.com/apWv6rGRYL

— FBI (@FBI) July 9, 2024

These X accounts have been allegedly set as much as disseminate pro-Russian propaganda. Nevertheless, they weren’t operated by people however have been automated “bots.” RT, previously often called Russia In the present day, broadcasts in English amongst different languages and is notably extra influential on-line than by way of conventional broadcast strategies.

The initiative for this bot operation was reportedly traced again to RT’s deputy editor-in-chief in 2022 and acquired backing and funding from an official on the Federal Safety Service, the first successor to the KGB. The Justice Division additionally took management of two web sites instrumental in managing the bot accounts and compelled X to give up particulars on 968 accounts believed to be bots.

Russian bot fams utilizing AI

The FBI, together with Dutch intelligence and Canadian cybersecurity officers, warned about “Meliorator,” a instrument able to creating “genuine showing social media personas en masse.” It may well additionally generate textual content and pictures, and echo disinformation from different bots.

Courtroom paperwork revealed that AI was used to create the accounts to disseminate anti-Ukraine sentiments.

“In the present day’s actions signify a primary in disrupting a Russian-sponsored generative AI-enhanced social media bot farm,” mentioned FBI Director Christopher Wray.

“Russia supposed to make use of this bot farm to disseminate AI-generated international disinformation, scaling their work with the help of AI to undermine our companions in Ukraine and affect geopolitical narratives favorable to the Russian authorities,” Wray added.

The accounts have since been eliminated by X, and screenshots offered by FBI investigators confirmed that they’d attracted only a few followers.

Faking American identities

The Washington Post reported a significant loophole that allowed bots to bypass X’s safety measures. In response to the information outlet, they “can copy-paste OTPs from their electronic mail accounts to log in.” The Justice Division reported that this operation’s use of U.S.-based domains constitutes a violation of the Worldwide Emergency Financial Powers Act. As well as, the monetary transactions supporting these operations infringe upon U.S. federal cash laundering legal guidelines.

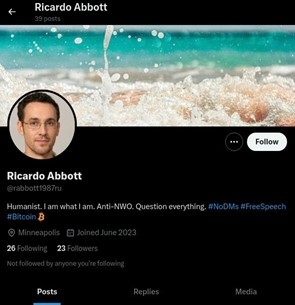

Many of those fabricated profiles copied American identities, utilizing U.S.-sounding names and specifying areas throughout the U.S. on X. The Justice Division identified that these profiles usually featured headshots in opposition to grey backgrounds, which appeared to have been created utilizing AI.

For instance, a profile underneath the identify Ricardo Abbott, claiming to be from Minneapolis, circulated a video of Russian President Vladimir Putin defending Russia’s involvement in Ukraine. One other, named Sue Williamson, shared a video of Putin explaining that the battle in Ukraine was not about territory however about “rules on which the New World Order shall be primarily based.” These posts have been subsequently preferred and shared by fellow bots throughout the community.

Additional particulars from the Justice Division revealed that linked electronic mail accounts might be created if the consumer owns the web area. As an illustration, management over the area www.instance.com permits electronic mail addresses like [email protected] to be opened.

On this case, the perpetrators managed and used the domains “mlrtr.com” and “otanmail.com,” each registered by way of a U.S.-based service, to arrange electronic mail servers that supported the creation of pretend social media accounts by way of their bot farm expertise.

Featured picture: Canva / Ideogram