Software program design rules for thoughtfully designing dependable, high-performing LLM functions. A framework to bridge the hole between potential and production-grade efficiency.

Massive Language Fashions (LLMs) maintain immense potential, however creating dependable production-grade functions stays difficult. After constructing dozens of LLM methods, I’ve distilled the components for achievement into 4 elementary rules that any group can apply.

“LLM-Native apps are 10% refined mannequin, and 90% experimenting data-driven engineering work.”

Constructing production-ready LLM functions requires cautious engineering practices. When customers can’t work together instantly with the LLM, the immediate have to be meticulously composed to cowl all nuances, as iterative person suggestions could also be unavailable.

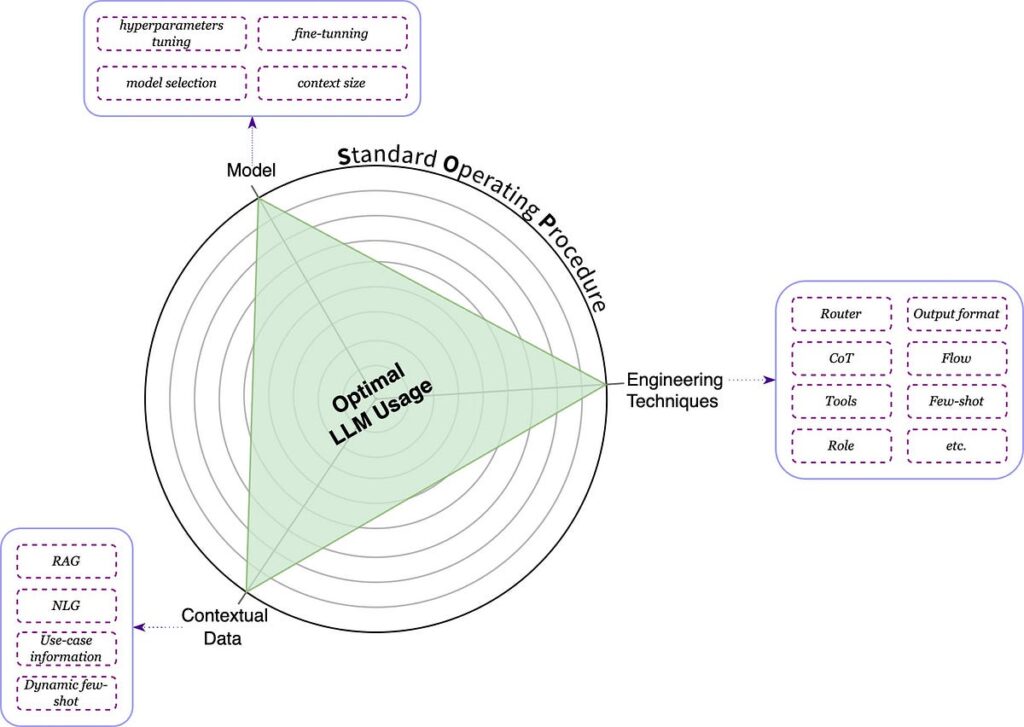

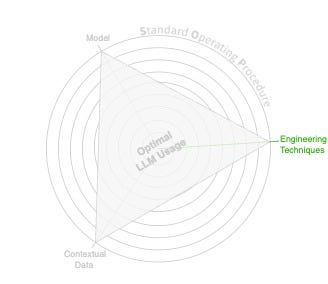

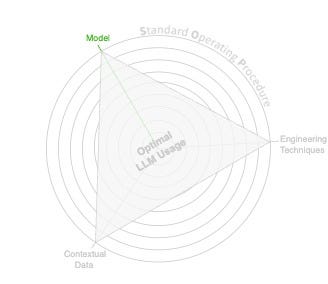

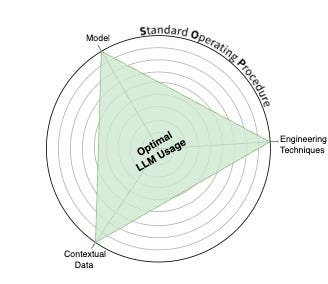

Introducing the LLM Triangle Ideas

The LLM Triangle Ideas encapsulate the important pointers for constructing efficient LLM-native apps. They supply a stable conceptual framework, information builders in setting up sturdy and dependable LLM-native functions, and supply route and help.

The Key Apices

The LLM Triangle Ideas introduces 4 programming rules that can assist you design and construct LLM-Native apps.

The primary precept is the Commonplace Working Process (SOP). The SOP guides the three apices of our triangle: Mannequin, Engineering Methods, and Contextual Knowledge.

Optimizing the three apices rules via the lens of the SOP is the important thing to making sure a high-performing LLM-native app.

1. Commonplace Working Process (SOP)

Standard Operating Procedure (SOP) is a widely known terminology within the industrial world. It’s a set of step-by-step directions compiled by giant organizations to assist their employees perform routine operations whereas sustaining high-quality and related outcomes every time. This virtually turns inexperienced or low-skilled employees into specialists by writing detailed directions.

The LLM Triangle Ideas borrows the SOP paradigm and encourages you to contemplate the mannequin an inexperienced/unskilled employee. We will guarantee higher-quality outcomes by “instructing” the mannequin how an professional would carry out this process.

“With out an SOP, even essentially the most highly effective LLM will fail to ship persistently high-quality outcomes.”

When fascinated with the SOP guiding precept, we should always determine what methods will assist us implement the SOP most successfully.

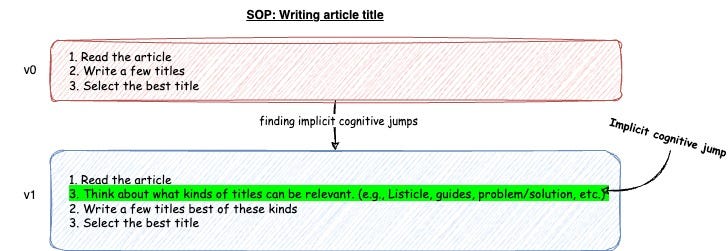

1.1. Cognitive modeling

To create an SOP, we have to take our best-performing employees (area specialists), mannequin how they assume and work to attain the identical outcomes, and write down all the pieces they do.

After modifying and formalizing it, we’ll have detailed directions to assist each inexperienced or low-skilled employee succeed and yield wonderful work.

Like people, it’s important to scale back the cognitive load of the duty by simplifying or splitting it. Following a easy step-by-step instruction is extra easy than a prolonged, advanced process.

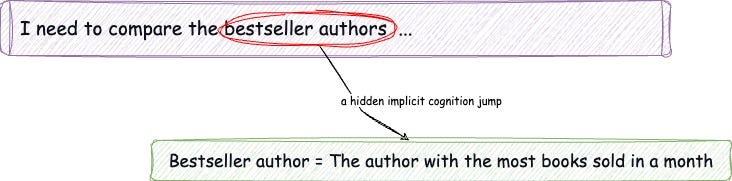

Throughout this course of, we determine the hidden implicit cognition “jumps” — the small, unconscious steps specialists take that considerably affect the result. These delicate, unconscious, typically unstated assumptions or choices can considerably have an effect on the ultimate end result.

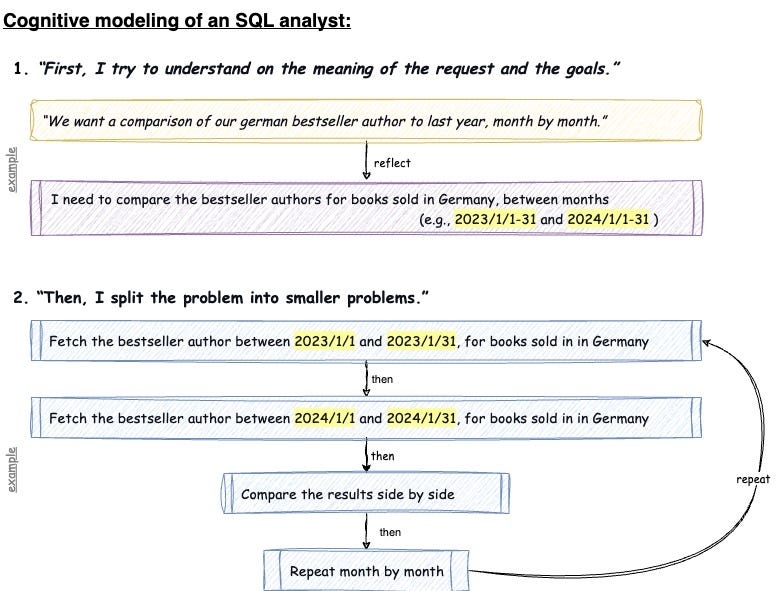

For instance, let’s say we need to mannequin an SQL analyst. We’ll begin by interviewing them and ask them a couple of questions, such as:

- What do you do when you’re requested to research a enterprise drawback?

- How do you be certain your answer meets the request?

- <reflecting the method as we perceive to the interviewee>

- Does this precisely seize your course of? <getting corrections>

- And many others.

The implicit cognition course of takes many shapes and varieties; a typical instance is a “domain-specific definition.” For instance, “bestseller” is likely to be a outstanding time period for our area professional, however not for everybody else.

Ultimately, we’ll have a full SOP “recipe” that enables us to emulate our top-performing analyst.

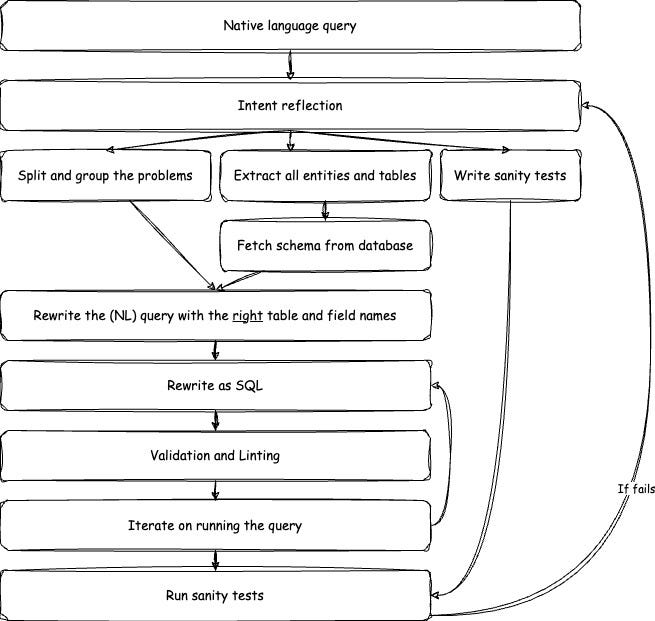

When mapping out these advanced processes, it may be useful to visualise them as a graph. That is particularly useful when the method is nuanced and entails many steps, situations, and splits.

Our remaining answer ought to mimic the steps outlined within the SOP. On this stage, attempt to ignore the implementation—later, you may implement it throughout one or many steps/chains all through our answer.

Not like the remainder of the rules, the cognitive modeling (SOP writing) is the solely standalone course of. It’s extremely beneficial that you just mannequin your course of earlier than writing code. That being mentioned, whereas implementing it, you would possibly return and alter it primarily based on new insights or understandings you gained.

Now that we perceive the significance of making a well-defined SOP, that guides our enterprise understanding of the issue, let’s discover how we will successfully implement it utilizing varied engineering methods.

2. Engineering Methods

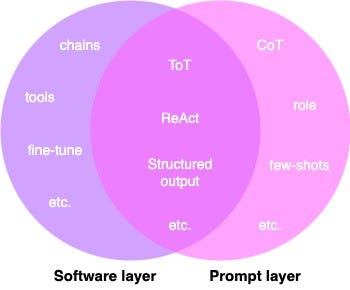

Engineering Techniques enable you virtually implement your SOP and get essentially the most out of the mannequin. When fascinated with the Engineering Methods precept, we should always contemplate what instruments(methods) in our toolbox might help us implement and form our SOP and help the mannequin in speaking properly with us.

Some engineering methods are solely carried out within the immediate layer, whereas many require a software program layer to be efficient, and a few mix each layers.

Whereas many small nuances and methods are found each day, I’ll cowl two major methods: workflow/chains and brokers.

2.1. LLM-Native architectures (aka stream engineering or chains)

The LLM-Native Structure describes the agentic stream your app goes via to yield the duty’s end result.

Every step in our stream is a standalone course of that should happen to attain our process. Some steps can be carried out just by deterministic code; for some, we are going to use an LLM (agent).

To do this, we will mirror on the Commonplace Working Process (SOP) we drew and assume:

- Which SOP steps ought to we glue collectively to the identical agent? And what steps ought to we break up as totally different brokers?

- What SOP steps ought to be executed in a standalone method (however they is likely to be fed with info from earlier steps)?

- What SOP steps can we carry out in a deterministic code?

- And many others.

Earlier than navigating to the following step in our structure/graph, we should always outline its key properties:

- Inputs and outputs — What’s the signature of this step? What’s required earlier than we will take an motion? (this will additionally function an output format for an agent)

- High quality assurances—What makes the response “ok”? Are there instances that require human intervention within the loop? What sorts of assertions can we configure?

- Autonomous degree — How a lot management do we’d like over the end result’s high quality? What vary of use instances can this stage deal with? In different phrases, how a lot can we belief the mannequin to work independently at this level?

- Triggers — What’s the subsequent step? What defines the following step?

- Non-functional — What’s the required latency? Do we’d like particular enterprise monitoring right here?

- Failover management — What sort of failures(systematic and agentic) can happen? What are our fallbacks?

- State administration — Do we’d like a particular state administration mechanism? How will we retrieve/save states (outline the indexing key)? Do we’d like persistence storage? What are the totally different usages of this state(e.g., cache, logging, and so forth.)?

- And many others.

2.2. What are brokers?

An LLM agent is a standalone part of an LLM-Native structure that entails calling an LLM.

It’s an occasion of LLM utilization with the immediate containing the context. Not all brokers are equal — Some will use “instruments,” some received’t; some is likely to be used “simply as soon as” within the stream, whereas others may be referred to as recursively or a number of instances, carrying the earlier enter and outputs.

2.2.1. Brokers with instruments

Some LLM brokers can use “instruments” — predefined features for duties like calculations or internet searches. The agent outputs directions specifying the software and enter, which the applying executes, returning the end result to the agent.

To know the idea, let’s take a look at a easy immediate implementation for software calling. This will work even with fashions not natively skilled to name instruments:

You might be an assistant with entry to those instruments:

- calculate(expression: str) -> str - calculate a mathematical expression

- search(question: str) -> str - seek for an merchandise within the stock

Given an enter, Reply with a YAML with keys: `func`(str) and `arguments`(map) or `message`(str).Given enter

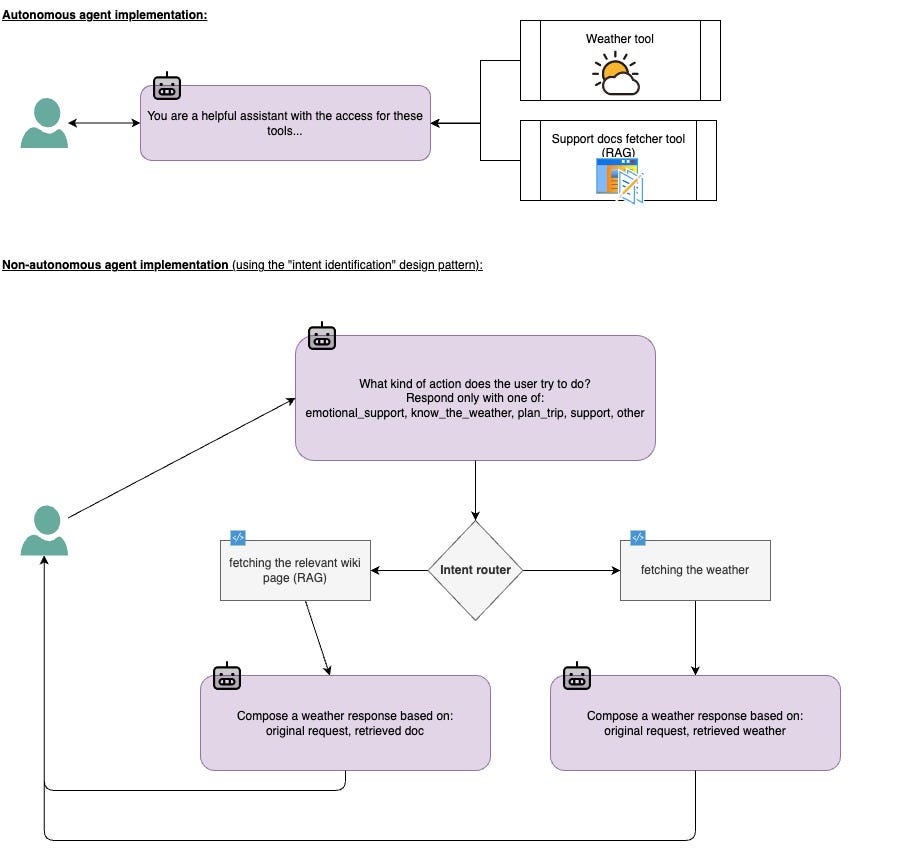

It’s essential to tell apart between brokers with instruments (therefore autonomous brokers) and brokers whose output can result in performing an motion:

Autonomous brokers are given the suitable to resolve if they need to act and with what motion. In distinction, a (nonautonomous) agent merely “processes” our request(e.g., classification), and primarily based on this course of, our deterministic code performs an motion, and the mannequin has zero management over that.

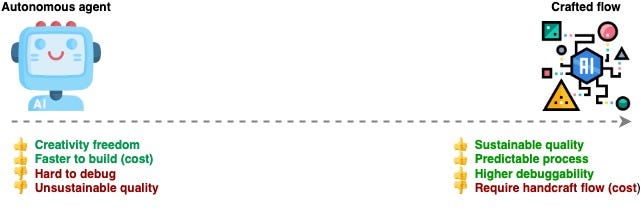

As we enhance the agent’s autonomy in planning and executing duties, we improve its decision-making capabilities however probably scale back management over output high quality. Though this would possibly appear to be a magical answer to make it extra “good” or “superior,” it comes with the price of dropping management over the high quality.

Beware the attract of absolutely autonomous brokers. Whereas their structure would possibly look interesting and easier, utilizing it for all the pieces (or because the preliminary PoC) is likely to be very deceiving from the “actual manufacturing” instances. Autonomous brokers are onerous to debug and unpredictable(response with unstable high quality), which makes them unusable for manufacturing.

At the moment, brokers (with out implicit steering) will not be excellent at planning advanced processes and often skip important steps. For instance, in our “Wikipedia author” use-case, they’ll simply begin writing and skip the systematic course of. This makes brokers (and autonomous brokers particularly) solely nearly as good because the mannequin, or extra precisely — solely nearly as good as the info they had been skilled on relative to your process.

As an alternative of giving the agent (or a swarm of brokers) the freedom to do all the pieces end-to-end, attempt to hedge their process to a particular area of your stream/SOP that requires this sort of agility or creativity. This will yield higher-quality outcomes as a result of you may take pleasure in each worlds.

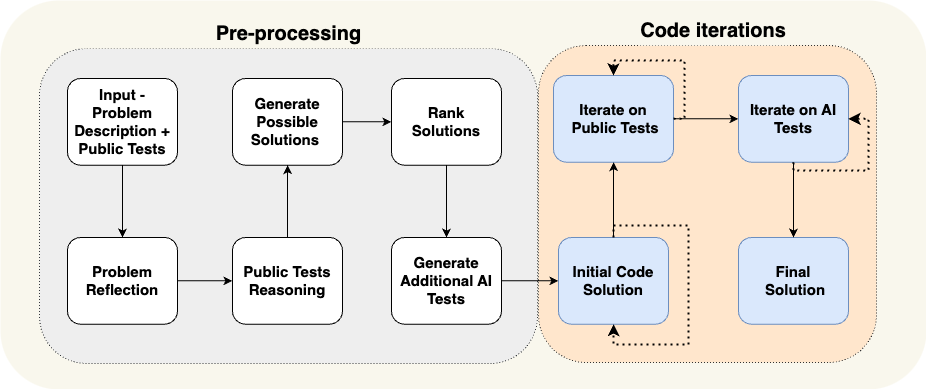

A superb instance is AlphaCodium: By combining a structured stream with totally different brokers (together with a novel agent that iteratively writes and checks code), they elevated GPT-4 accuracy (move@5) on CodeContests from 19% to 44%.

Whereas engineering methods lay the groundwork for implementing our SOP and optimizing LLM-native functions, we should additionally rigorously contemplate one other crucial part of the LLM Triangle: the mannequin itself.

3. Mannequin

The mannequin we select is a crucial part of our venture’s success—a big one (equivalent to GPT-4 or Claude Opus) would possibly yield higher outcomes however be fairly pricey at scale, whereas a smaller mannequin is likely to be much less “good” however assist with the price range. When fascinated with the Mannequin precept, we should always purpose to determine our constraints and objectives and how much mannequin might help us fulfill them.

“Not all LLMs are created equal. Match the mannequin to the mission.”

The reality is that we don’t at all times want the biggest mannequin; it depends upon the duty. To seek out the suitable match, we should have an experimental process and take a look at a number of variations of our answer.

It helps to have a look at our “inexperienced employee” analogy — a really “good” employee with many educational credentials in all probability will reach some duties simply. Nonetheless, they is likely to be overqualified for the job, and hiring a “cheaper” candidate can be way more cost-effective.

When contemplating a mannequin, we should always outline and examine options primarily based on the tradeoffs we’re prepared to take:

- Activity Complexity — Easier duties (equivalent to summarization) are simpler to finish with smaller fashions, whereas reasoning often requires bigger fashions.

- Inference infrastructure — Ought to it run on the cloud or edge gadgets? The mannequin dimension would possibly affect a small telephone, however it may be tolerated for cloud-serving.

- Pricing — What worth can we tolerate? Is it cost-effective contemplating the enterprise affect and predicated utilization?

- Latency — Because the mannequin grows bigger, the latency grows as properly.

- Labeled information — Do we now have information we will use instantly to counterpoint the mannequin with examples or related info that isn’t skilled upon?

In lots of instances, till you’ve gotten the “in-house experience,” it helps to pay a little bit additional for an skilled employee — the identical applies to LLMs.

In case you don’t have labeled information, begin with a stronger (bigger) mannequin, accumulate information, after which put it to use to empower a mannequin utilizing a few-shot or fine-tuning.

3.1. Wonderful-tuning a mannequin

There are a couple of elements that you could contemplate earlier than resorting to fine-tune a mannequin:

- Privateness — Your information would possibly embrace items of personal info that have to be stored from the mannequin. You have to anonymize your information to keep away from authorized liabilities in case your information accommodates non-public info.

- Legal guidelines, Compliance, and Knowledge Rights — Some authorized questions may be raised when coaching a mannequin. For instance, the OpenAI terms-of-use coverage prevents you from coaching a mannequin with out OpenAI utilizing generated responses. One other typical instance is complying with the GDPR’s legal guidelines, which require a “proper for revocation,” the place a person can require the corporate to take away info from the system. This raises authorized questions on whether or not the mannequin ought to be retrained or not.

- Updating latency — The latency or information cutoff is way larger when coaching a mannequin. Not like embedding the brand new info through the context (see “4. Contextual Knowledge” part under), which supplies speedy latency, coaching the mannequin is an extended course of that takes time. Attributable to that, fashions are retrained much less typically.

- Growth and operation — Implementing a reproducible, scalable, and monitored fine-tuning pipeline is crucial whereas repeatedly evaluating the outcomes’ efficiency. This advanced course of requires fixed upkeep.

- Value — Retraining is taken into account costly attributable to its complexity and the extremely intensive assets(GPUs) required per coaching.

The power of LLMs to behave as in-context learners and the truth that the newer fashions help a a lot bigger context window simplify our implementation dramatically and may present wonderful outcomes even with out fine-tuning. As a result of complexity of fine-tuning, utilizing it as a final resort or skipping it fully is beneficial.

Conversely, fine-tuning fashions for particular duties (e.g., structured JSON output) or domain-specific language may be extremely environment friendly. A small, task-specific mannequin may be extremely efficient and less expensive in inference than giant LLMs. Select your answer correctly, and assess all of the related concerns earlier than escalating to LLM coaching.

“Even essentially the most highly effective mannequin requires related and well-structured contextual information to shine.”

4. Contextual Knowledge

LLMs are in-context learners. That signifies that by offering task-specific info, the LLM agent might help us to carry out it with out particular coaching or fine-tuning. This allows us to “train” new data or abilities simply. When fascinated with the Contextual Knowledge precept, we should always purpose to prepare and mannequin the out there information and tips on how to compose it inside our immediate.

To compose our context, we embrace the related (contextual) info inside the immediate we ship to the LLM. There are two sorts of contexts we will use:

- Embedded contexts — embedded info items supplied as a part of the immediate.

You're the useful assistant of <title>, a <function> at <firm>

- Attachment contexts — An inventory of knowledge items glues by the start/finish of the immediate

Summarize the supplied emails whereas maintaining a pleasant tone.

---

<email_0>

<email_1>

Contexts are often carried out utilizing a “immediate template” (equivalent to jinja2 or mustache or just native formatting literal strings); this manner, we will compose them elegantly whereas maintaining the essence of our immediate:

# Embedded context with an attachment context

immediate = f"""

You're the useful assistant of {title}. {title} is a {function} at {firm}.

Assist me write a {tone} response to the connected e-mail.

At all times signal your e-mail with:

{signature}

---

{e-mail}

"""

4.1. Few-shot studying

Few-shot studying is a strong option to “train” LLMs by instance with out requiring in depth fine-tuning. Offering a couple of consultant examples within the immediate can information the mannequin in understanding the specified format, model, or process.

As an illustration, if we would like the LLM to generate e-mail responses, we may embrace a couple of examples of well-written responses within the immediate. This helps the mannequin study the popular construction and tone.

We will use numerous examples to assist the mannequin catch totally different nook instances or nuances and study from them. Subsequently, it’s important to incorporate a wide range of examples that cowl a variety of eventualities your software would possibly encounter.

As your software grows, you might contemplate implementing “Dynamic few-shot,” which entails programmatically deciding on essentially the most related examples for every enter. Whereas it will increase your implementation complexity, it ensures the mannequin receives essentially the most applicable steering for every case, considerably bettering efficiency throughout a variety of duties with out pricey fine-tuning.

4.2. Retrieval Augmented Era

Retrieval Augmented Generation (RAG) is a method for retrieving related paperwork for added context earlier than producing a response. It’s like giving the LLM a fast peek at particular reference materials to assist inform its reply. This retains responses present and factual while not having to retrain the mannequin.

As an illustration, on a help chatbot software, RAG may pull related help-desk wiki pages to tell the LLM’s solutions.

This strategy helps LLMs keep present and reduces hallucinations by grounding responses in retrieved details. RAG is especially useful for duties that require up to date or specialised data with out retraining the whole mannequin.

For instance, suppose we’re constructing a help chat for our product. In that case, we will use RAG to retrieve a related doc from our helpdesk wiki, then present it to an LLM agent and ask it to compose a solution primarily based on the query and supply a doc.

There are three key items to have a look at whereas implementing RAG:

- Retrieval mechanism — Whereas the normal implementation of RAG entails retrieving a related doc utilizing a vector similarity search, typically it’s higher or cheaper to make use of easier strategies equivalent to keyword-based search (like BM-25).

- Listed information construction —Indexing the whole doc naively, with out preprocessing, might restrict the effectiveness of the retrieval course of. Typically, we need to add an information preparation step, equivalent to getting ready an inventory of questions and solutions primarily based on the doc.

- Metadata—Storing related metadata permits for extra environment friendly referencing and filtering of knowledge (e.g., narrowing down wiki pages to solely these associated to the person’s particular product inquiry). This additional information layer streamlines the retrieval course of.

4.3. Offering related context

The context info related to your agent can range. Though it might appear useful, offering the mannequin (just like the “unskilled employee”) with an excessive amount of info may be overwhelming and irrelevant to the duty. Theoretically, this causes the mannequin to study irrelevant info (or token connections), which may result in confusion and hallucinations.

When Gemini 1.5 was launched and launched as an LLM that would course of as much as 10M tokens, some practitioners questioned whether or not the context was nonetheless a difficulty. Whereas it’s a unbelievable accomplishment, particularly for some use instances (equivalent to chat with PDFs), it’s nonetheless restricted, particularly when reasoning over varied paperwork.

Compacting the immediate and offering the LLM agent with solely related info is essential. This reduces the processing energy the mannequin invests in irrelevant tokens, improves the standard, optimizes the latency, and reduces the price.

There are lots of methods to enhance the relevancy of the supplied context, most of which relate to the way you retailer and catalog your information.

For RAG functions, it’s useful so as to add an information preparation that shapes the data you retailer (e.g., questions and solutions primarily based on the doc, then offering the LLM agent solely with the reply; this manner, the agent will get a summarized and shorter context), and use re-ranking algorithms on prime of the retrieved paperwork to refine the outcomes.

“Knowledge fuels the engine of LLM-native functions. A strategic design of contextual information unlocks their true potential.”

Conclusion and Implications

The LLM Triangle Ideas present a structured strategy to creating high-quality LLM-native functions, addressing the hole between LLMs’ huge potential and real-world implementation challenges. Builders can create extra dependable and efficient LLM-powered options by specializing in three key rules—the Mannequin, Engineering Methods, and Contextual Knowledge—all guided by a well-defined SOP.

Key takeaways

- Begin with a transparent SOP: Mannequin your professional’s cognitive course of to create a step-by-step information in your LLM software. Use it as a information whereas pondering of the opposite rules.

- Select the suitable mannequin: Steadiness capabilities with price, and contemplate beginning with bigger fashions earlier than probably shifting to smaller, fine-tuned ones.

- Leverage engineering methods: Implement LLM-native architectures and use brokers strategically to optimize efficiency and keep management. Experiment with totally different immediate methods to seek out the simplest immediate in your case.

- Present related context: Use in-context studying, together with RAG, when applicable, however be cautious of overwhelming the mannequin with irrelevant info.

- Iterate and experiment: Discovering the suitable answer typically requires testing and refining your work. I like to recommend studying and implementing the “Building LLM Apps: A Clear Step-By-Step Guide” suggestions for an in depth LLM-Native improvement course of information.

By making use of the LLM Triangle Ideas, organizations can transfer past a easy proof-of-concept and develop sturdy, production-ready LLM functions that really harness the facility of this transformative know-how.

In case you discover this whitepaper useful, please give it a couple of claps 👏 on Medium and share it together with your fellow AI lovers. Your help means the world to me! 🌍

Let’s hold the dialog going — be at liberty to achieve out through email or connect on LinkedIn 🤝

Particular due to Gal Peretz, Gad Benram, Liron Izhaki Allerhand, Itamar Friedman, Lee Twito, Ofir Ziv, Philip Tannor, and Shai Alon for insights, suggestions, and modifying notes.

The LLM Triangle Principles to Architect Reliable AI Apps was initially revealed in Towards Data Science on Medium, the place individuals are persevering with the dialog by highlighting and responding to this story.