The world of synthetic intelligence is witnessing a revolution, and at its forefront are giant language fashions that appear to develop extra highly effective by the day. From BERT to GPT-3 to PaLM, these AI giants are pushing the boundaries of what’s attainable in pure language processing. However have you ever ever questioned what fuels their meteoric rise in capabilities?

On this submit, we’ll embark on a captivating journey into the center of language mannequin scaling. We’ll uncover the key sauce that makes these fashions tick — a potent mix of three essential substances: mannequin dimension, coaching information, and computational energy. By understanding how these elements interaction and scale, we’ll achieve invaluable insights into the previous, current, and way forward for AI language fashions.

So, let’s dive in and demystify the scaling legal guidelines which are propelling language fashions to new heights of efficiency and functionality.

Desk of content material: This submit consists of the next sections:

- Introduction

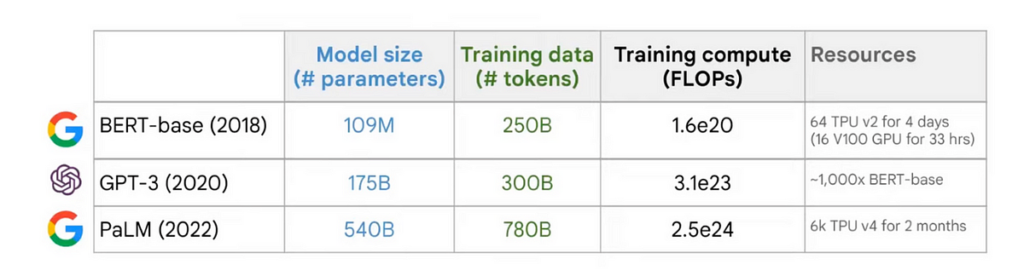

- Overview of latest language mannequin developments

- Key elements in language mannequin scaling