TL;DR

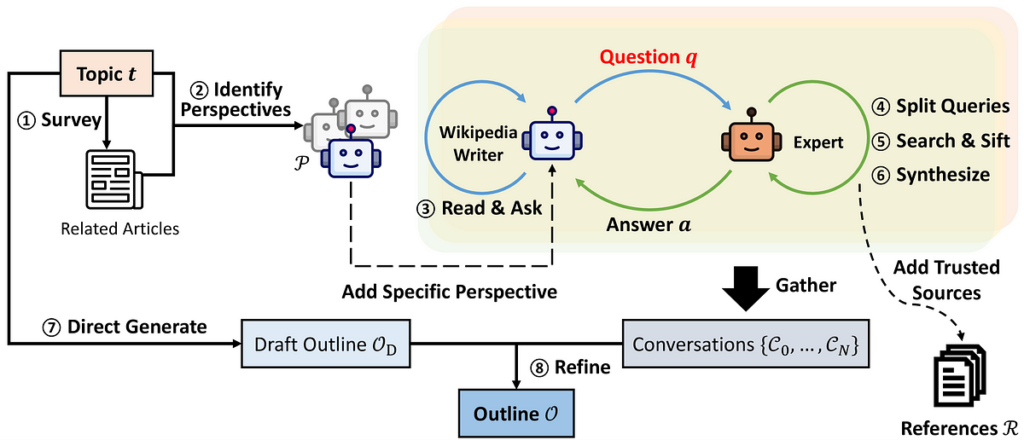

Using LLM brokers is changing into extra frequent for tackling multi-step long-context analysis duties the place conventional RAG direct prompting strategies can typically wrestle. On this article, we’ll discover a brand new and promising method developed by Stanford known as Synthesis of Topic Outlines via Retrieval and Multi-perspective Query Asking (STORM), which makes use of LLM brokers to simulate ‘Perspective-guided conversations’ to achieve advanced analysis targets and generate wealthy analysis articles that can be utilized by people of their pre-writing analysis. STORM was initially developed to collect info from net sources but in addition helps looking out a neighborhood doc vector retailer. On this article we’ll see find out how to implement STORM for AI-supported analysis on native PDFs, utilizing US FEMA catastrophe preparedness and help documentation.

It’s been superb to look at how utilizing LLMs for data retrieval has progressed in a comparatively brief time frame. Because the first paper on Retrieval Augmented Generation (RAG) in 2020, we’ve got seen the ecosystem develop to incorporate a cornucopia of available techniques. One of many extra superior is agentic RAG the place LLM brokers iterate and refine doc retrieval with a purpose to resolve extra advanced analysis duties. It’s just like how a human would possibly perform analysis, exploring a variety of various search queries to construct a greater thought of the context, typically discussing the subject with different people, and synthesizing all the pieces right into a remaining consequence. Single-turn RAG, even using strategies comparable to question growth and reranking, can wrestle with extra advanced multi-hop analysis duties like this.

There are fairly just a few patterns for data retrieval utilizing agent frameworks comparable to Autogen, CrewAI, and LangGraph in addition to particular AI analysis assistants comparable to GPT Researcher. On this article, we’ll take a look at an LLM-powered analysis writing system from Stanford College, known as Synthesis of Topic Outlines via Retrieval and Multi-perspective Query Asking (STORM).

STORM applies a intelligent method the place LLM brokers simulate ‘Perspective-guided conversations’ to achieve a analysis aim in addition to lengthen ‘outline-driven RAG’ for richer article era.

Configured to generate Wikipedia-style articles, it was examined with a cohort of 10 skilled Wikipedia editors.

Reception on the entire was optimistic, 70% of the editors felt that it could be a great tool of their pre-writing stage when researching a subject. I hope sooner or later surveys may embrace greater than 10 editors, however it must be famous that authors additionally benchmarked conventional article era strategies utilizing FreshWiki, a dataset of latest high-quality Wikipedia articles, the place STORM was discovered to outperform earlier strategies.

STORM is open source and accessible as a Python package with further implementations utilizing frameworks comparable to LangGraph. Extra not too long ago STORM has been enhanced to help human-AI collaborative data curation known as Co-STORM, placing a human proper within the middle of the AI-assisted analysis loop.

Although it considerably outperforms baseline strategies in each automated and human evaluations, there are some caveats that the authors acknowledge. It isn’t but multimodal, doesn’t produce skilled human-quality content material — it isn’t positioned but for this I really feel, being extra focused for pre-writing analysis than remaining articles — and there are some nuances round references that require some future work. That mentioned, you probably have a deep analysis process, it’s price testing.

You possibly can check out STORM online — it’s enjoyable! — configured to carry out analysis utilizing info on the internet.

Many organizations will wish to use AI analysis instruments with their very own inside information. The STORM authors have performed a pleasant job of documenting numerous approaches of utilizing STORM with completely different LLM suppliers and a neighborhood vector database, which implies it’s attainable to run STORM by yourself paperwork.

So let’s do that out!

Yow will discover the code for this text here, which incorporates setting setup directions and find out how to collate some pattern paperwork for this demo.

We are going to use 34 PDF paperwork to assist individuals put together for and reply to disasters, as created by america Federal Emergency Administration Company (FEMA). These paperwork maybe aren’t usually what individuals could wish to use for writing deep analysis articles, however I’m fascinated about seeing how AI may also help individuals put together for disasters.

…. and I’ve the code already written for processing FEMA stories from some earlier weblog posts, which I’ve included within the linked repo above. 😊

As soon as we’ve got our paperwork, we have to break up them into smaller paperwork in order that STORM can seek for particular matters throughout the corpus. Given STORM is initially aimed toward producing Wikipedia-style articles, I opted to strive two approaches, (i) Merely splitting the paperwork into sub-documents by web page utilizing LangChain’s PyPDFLoader, to create a crude simulation of a Wikipedia web page which incorporates a number of sub-topics. Many FEMA PDFs are single-page paperwork that don’t look too dissimilar to Wikipedia articles; (ii) Additional chunking the paperwork into smaller sections, extra more likely to cowl a discrete sub-topic.

These are in fact very fundamental approaches to parsing, however I wished to see how outcomes assorted relying on the 2 strategies. Any severe use of STORM on native paperwork ought to spend money on all the standard enjoyable round paring optimization.

def parse_pdfs():

"""

Parses all PDF information within the specified listing and hundreds their content material.This operate iterates via all information within the listing specified by PDF_DIR,

checks if they've a .pdf extension, and hundreds their content material utilizing PyPDFLoader.

The loaded content material from every PDF is appended to an inventory which is then returned.

Returns:

listing: A listing containing the content material of all loaded PDF paperwork.

"""

docs = []

pdfs = os.listdir(PDF_DIR)

print(f"We've got {len(pdfs)} pdfs")

for pdf_file in pdfs:

if not pdf_file.endswith(".pdf"):

proceed

print(f"Loading PDF: {pdf_file}")

file_path = f"{PDF_DIR}/{pdf_file}"

loader = PyPDFLoader(file_path)

docs = docs + loader.load()

print(f"Loaded {len(docs)} paperwork")

return docs

docs = parse_pdfs()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

chunks = text_splitter.split_documents(docs)

STORM’s example documentation requires that paperwork have metadata fields ‘URL’, ‘title’, and ‘description’, the place ‘URL’ must be distinctive. Since we’re splitting up PDF paperwork, we don’t have titles and descriptions of particular person pages and chunks, so I opted to generate these with a easy LLM name.

For URLs, we’ve got them for particular person PDF pages, however for chunks inside a web page. Refined data retrieval techniques can have metadata generated by structure detection fashions so the textual content chunk space may be highlighted within the corresponding PDF, however for this demo, I merely added an ‘_id’ question parameter the URL which does nothing however guarantee they’re distinctive for chunks.

def summarize_text(textual content, immediate):

"""

Generate a abstract of some textual content primarily based on the person's immediateArgs:

textual content (str) - the textual content to investigate

immediate (str) - immediate instruction on find out how to summarize the textual content, eg 'generate a title'

Returns:

abstract (textual content) - LLM-generated abstract

"""

messages = [

(

"system",

"You are an assistant that gives very brief single sentence description of text.",

),

("human", f"{prompt} :: nn {text}"),

]

ai_msg = llm.invoke(messages)

abstract = ai_msg.content material

return abstract

def enrich_metadata(docs):

"""

Makes use of an LLM to populate 'title' and 'description' for textual content chunks

Args:

docs (listing) - listing of LangChain paperwork

Returns:

docs (listing) - listing of LangChain paperwork with metadata fields populated

"""

new_docs = []

for doc in docs:

# pdf identify is final a part of doc.metadata['source']

pdf_name = doc.metadata["source"].break up("/")[-1]

# Discover row in df the place pdf_name is in URL

row = df[df["Document"].str.incorporates(pdf_name)]

web page = doc.metadata["page"] + 1

url = f"{row['Document'].values[0]}?id={str(uuid4())}#web page={web page}"

# We'll use an LLM to generate a abstract and title of the textual content, utilized by STORM

# That is only for the demo, correct software would have higher metadata

abstract = summarize_text(doc.page_content, immediate="Please describe this textual content:")

title = summarize_text(

doc.page_content, immediate="Please generate a 5 phrase title for this textual content:"

)

doc.metadata["description"] = abstract

doc.metadata["title"] = title

doc.metadata["url"] = url

doc.metadata["content"] = doc.page_content

# print(json.dumps(doc.metadata, indent=2))

new_docs.append(doc)

print(f"There are {len(docs)} docs")

return new_docs

docs = enrich_metadata(docs)

chunks = enrich_metadata(chunks)

STORM already helps the Qdrant vector store. I like to make use of frameworks comparable to LangChain and Llama Index the place attainable to make it simpler to vary suppliers down the highway, so I opted to make use of LangChain to construct a local Qdrant vector database persisted to the local file system relatively than STORM’s automated vector database administration. I felt this gives extra management and is extra recognizable to those that have already got pipelines for populating doc vector shops.

def build_vector_store(doc_type, docs):

"""

Givena listing of LangChain docs, will embed and create a file-system Qdrant vector database.

The folder consists of doc_type in its identify to keep away from overwriting.Args:

doc_type (str) - String to point stage of doc break up, eg 'pages',

'chunks'. Used to call the database save folder

docs (listing) - Checklist of langchain paperwork to embed and retailer in vector database

Returns:

Nothing returned by operate, however db saved to f"{DB_DIR}_{doc_type}".

"""

print(f"There are {len(docs)} docs")

save_dir = f"{DB_DIR}_{doc_type}"

print(f"Saving vectors to listing {save_dir}")

consumer = QdrantClient(path=save_dir)

consumer.create_collection(

collection_name=DB_COLLECTION_NAME,

vectors_config=VectorParams(dimension=num_vectors, distance=Distance.COSINE),

)

vector_store = QdrantVectorStore(

consumer=consumer,

collection_name=DB_COLLECTION_NAME,

embedding=embeddings,

)

uuids = [str(uuid4()) for _ in range(len(docs))]

vector_store.add_documents(paperwork=docs, ids=uuids)

build_vector_store("pages", docs)

build_vector_store("chunks", docs)

The STORM repo has some great examples of various engines like google and LLMs, in addition to utilizing a Qdrant vector retailer. I made a decision to mix numerous options from these, plus some additional post-processing as follows:

- Added potential to run with OpenAI or Ollama

- Added help for passing within the vector database listing

- Added a operate to parse the references metadata file so as to add references to the generated polished article. STORM generated these references in a JSON file however didn’t add them to the output article robotically. I’m undecided if this was on account of some setting I missed, however references are key to evaluating any AI analysis method, so I added this practice post-processing step.

- Lastly, I seen that open fashions have extra steerage in templates and personas on account of their following directions much less precisely than business fashions. I preferred the transparency of those controls and left them in for OpenAI in order that I may modify in future work.

Right here is all the pieces (see repo notebook for full code) …

def set_instructions(runner):

"""

Adjusts templates and personas for the STORM AI Analysis algorithm.Args:

runner - STORM runner object

Returns:

runner - STORM runner object with additional prompting

"""

# Open LMs are usually weaker in following output format.

# A technique for mitigation is so as to add one-shot instance to the immediate to exemplify the specified output format.

# For instance, we will add the next examples to the 2 prompts utilized in StormPersonaGenerator.

# Word that the instance must be an object of dspy.Instance with fields matching the InputField

# and OutputField within the immediate (i.e., dspy.Signature).

find_related_topic_example = Instance(

subject="Data Curation",

related_topics="https://en.wikipedia.org/wiki/Knowledge_managementn"

"https://en.wikipedia.org/wiki/Information_sciencen"

"https://en.wikipedia.org/wiki/Library_sciencen",

)

gen_persona_example = Instance(

subject="Data Curation",

examples="Title: Data managementn"

"Desk of Contents: HistorynResearchn Dimensionsn Strategiesn MotivationsnKM applied sciences"

"nKnowledge barriersnKnowledge retentionnKnowledge auditnKnowledge protectionn"

" Data safety methodsn Formal methodsn Casual methodsn"

" Balancing data safety and data sharingn Data safety dangers",

personas="1. Historian of Data Programs: This editor will deal with the historical past and evolution of information curation. They'll present context on how data curation has modified over time and its impression on trendy practices.n"

"2. Info Science Skilled: With insights from 'Info science', this editor will discover the foundational theories, definitions, and philosophy that underpin data curationn"

"3. Digital Librarian: This editor will delve into the specifics of how digital libraries function, together with software program, metadata, digital preservation.n"

"4. Technical professional: This editor will deal with the technical points of information curation, comparable to frequent options of content material administration techniques.n"

"5. Museum Curator: The museum curator will contribute experience on the curation of bodily gadgets and the transition of those practices into the digital realm.",

)

runner.storm_knowledge_curation_module.persona_generator.create_writer_with_persona.find_related_topic.demos = [

find_related_topic_example

]

runner.storm_knowledge_curation_module.persona_generator.create_writer_with_persona.gen_persona.demos = [

gen_persona_example

]

# A trade-off of including one-shot instance is that it'll enhance the enter size of the immediate. Additionally, some

# examples could also be very lengthy (e.g., an instance for writing a piece primarily based on the given info), which can

# confuse the mannequin. For these circumstances, you possibly can create a pseudo-example that's brief and simple to grasp to steer

# the mannequin's output format.

# For instance, we will add the next pseudo-examples to the immediate utilized in WritePageOutlineFromConv and

# ConvToSection.

write_page_outline_example = Instance(

subject="Instance Subject",

conv="Wikipedia Author: ...nExpert: ...nWikipedia Author: ...nExpert: ...",

old_outline="# Part 1n## Subsection 1n## Subsection 2n"

"# Part 2n## Subsection 1n## Subsection 2n"

"# Part 3",

define="# New Part 1n## New Subsection 1n## New Subsection 2n"

"# New Part 2n"

"# New Part 3n## New Subsection 1n## New Subsection 2n## New Subsection 3",

)

runner.storm_outline_generation_module.write_outline.write_page_outline.demos = [

write_page_outline_example

]

write_section_example = Instance(

information="[1]nInformation in doc 1n[2]nInformation in doc 2n[3]nInformation in doc 3",

subject="Instance Subject",

part="Instance Part",

output="# Instance Topicn## Subsection 1n"

"That is an instance sentence [1]. That is one other instance sentence [2][3].n"

"## Subsection 2nThis is another instance sentence [1].",

)

runner.storm_article_generation.section_gen.write_section.demos = [

write_section_example

]

return runner

def latest_dir(parent_folder):

"""

Discover the newest folder (by modified date) within the specified mum or dad folder.

Args:

parent_folder (str): The trail to the mum or dad folder the place the seek for the newest folder will probably be performed. Defaults to f"{DATA_DIR}/storm_output".

Returns:

str: The trail to essentially the most not too long ago modified folder throughout the mum or dad folder.

"""

# Discover most up-to-date folder (by modified date) in DATA_DIR/storm_data

# TODO, learn how precisely storm passes again its output listing to keep away from this hack

folders = [f.path for f in os.scandir(parent_folder) if f.is_dir()]

folder = max(folders, key=os.path.getmtime)

return folder

def generate_footnotes(folder):

"""

Generates footnotes from a JSON file containing URL info.

Args:

folder (str): The listing path the place the 'url_to_info.json' file is positioned.

Returns:

str: A formatted string containing footnotes with URLs and their corresponding titles.

"""

file = f"{folder}/url_to_info.json"

with open(file) as f:

information = json.load(f)

refs = {}

for rec in information["url_to_unified_index"]:

val = information["url_to_unified_index"][rec]

title = information["url_to_info"][rec]["title"].substitute('"', "")

refs[val] = f"- {val} [{title}]({rec})"

keys = listing(refs.keys())

keys.kind()

footer = ""

for key in keys:

footer += f"{refs[key]}n"

return footer, refs

def generate_markdown_article(output_dir):

"""

Generates a markdown article by studying a textual content file, appending footnotes,

and saving the consequence as a markdown file.

The operate performs the next steps:

1. Retrieves the newest listing utilizing the `latest_dir` operate.

2. Generates footnotes for the article utilizing the `generate_footnotes` operate.

3. Reads the content material of a textual content file named 'storm_gen_article_polished.txt'

positioned within the newest listing.

4. Appends the generated footnotes to the top of the article content material.

5. Writes the modified content material to a brand new markdown file named

STORM_OUTPUT_MARKDOWN_ARTICLE in the identical listing.

Args:

output_dir (str) - The listing the place the STORM output is saved.

"""

folder = latest_dir(output_dir)

footnotes, refs = generate_footnotes(folder)

with open(f"{folder}/storm_gen_article_polished.txt") as f:

textual content = f.learn()

# Replace textual content references like [10] to hyperlink to URLs

for ref in refs:

print(f"Ref: {ref}, Ref_num: {refs[ref]}")

url = refs[ref].break up("(")[1].break up(")")[0]

textual content = textual content.substitute(f"[{ref}]", f"[[{ref}]({url})]")

textual content += f"nn## Referencesnn{footnotes}"

with open(f"{folder}/{STORM_OUTPUT_MARKDOWN_ARTICLE}", "w") as f:

f.write(textual content)

def run_storm(subject, model_type, db_dir):

"""

This operate runs the STORM AI Analysis algorithm utilizing information

in a QDrant native database.

Args:

subject (str) - The analysis subject to generate the article for

model_type (str) - Considered one of 'openai' and 'ollama' to manage LLM used

db_dir (str) - Listing the place the QDrant vector database is

"""

if model_type not in ["openai", "ollama"]:

print("Unsupported model_type")

sys.exit()

# Clear lock so may be learn

if os.path.exists(f"{db_dir}/.lock"):

print(f"Eradicating lock file {db_dir}/.lock")

os.take away(f"{db_dir}/.lock")

print(f"Loading Qdrant vector retailer from {db_dir}")

engine_lm_configs = STORMWikiLMConfigs()

if model_type == "openai":

print("Utilizing OpenAI fashions")

# Initialize the language mannequin configurations

openai_kwargs = {

"api_key": os.getenv("OPENAI_API_KEY"),

"temperature": 1.0,

"top_p": 0.9,

}

ModelClass = (

OpenAIModel

if os.getenv("OPENAI_API_TYPE") == "openai"

else AzureOpenAIModel

)

# If you're utilizing Azure service, ensure the mannequin identify matches your personal deployed mannequin identify.

# The default identify right here is barely used for demonstration and should not match your case.

gpt_35_model_name = (

"gpt-4o-mini"

if os.getenv("OPENAI_API_TYPE") == "openai"

else "gpt-35-turbo"

)

gpt_4_model_name = "gpt-4o"

if os.getenv("OPENAI_API_TYPE") == "azure":

openai_kwargs["api_base"] = os.getenv("AZURE_API_BASE")

openai_kwargs["api_version"] = os.getenv("AZURE_API_VERSION")

# STORM is a LM system so completely different elements may be powered by completely different fashions.

# For a very good steadiness between price and high quality, you possibly can select a less expensive/sooner mannequin for conv_simulator_lm

# which is used to separate queries, synthesize solutions within the dialog. We advocate utilizing stronger fashions

# for outline_gen_lm which is answerable for organizing the collected info, and article_gen_lm

# which is answerable for producing sections with citations.

conv_simulator_lm = ModelClass(

mannequin=gpt_35_model_name, max_tokens=10000, **openai_kwargs

)

question_asker_lm = ModelClass(

mannequin=gpt_35_model_name, max_tokens=10000, **openai_kwargs

)

outline_gen_lm = ModelClass(

mannequin=gpt_4_model_name, max_tokens=10000, **openai_kwargs

)

article_gen_lm = ModelClass(

mannequin=gpt_4_model_name, max_tokens=10000, **openai_kwargs

)

article_polish_lm = ModelClass(

mannequin=gpt_4_model_name, max_tokens=10000, **openai_kwargs

)

elif model_type == "ollama":

print("Utilizing Ollama fashions")

ollama_kwargs = {

# "mannequin": "llama3.2:3b",

"mannequin": "llama3.1:newest",

# "mannequin": "qwen2.5:14b",

"port": "11434",

"url": "http://localhost",

"cease": (

"nn---",

), # dspy makes use of "nn---" to separate examples. Open fashions typically generate this.

}

conv_simulator_lm = OllamaClient(max_tokens=500, **ollama_kwargs)

question_asker_lm = OllamaClient(max_tokens=500, **ollama_kwargs)

outline_gen_lm = OllamaClient(max_tokens=400, **ollama_kwargs)

article_gen_lm = OllamaClient(max_tokens=700, **ollama_kwargs)

article_polish_lm = OllamaClient(max_tokens=4000, **ollama_kwargs)

engine_lm_configs.set_conv_simulator_lm(conv_simulator_lm)

engine_lm_configs.set_question_asker_lm(question_asker_lm)

engine_lm_configs.set_outline_gen_lm(outline_gen_lm)

engine_lm_configs.set_article_gen_lm(article_gen_lm)

engine_lm_configs.set_article_polish_lm(article_polish_lm)

max_conv_turn = 4

max_perspective = 3

search_top_k = 10

max_thread_num = 1

system = "cpu"

vector_db_mode = "offline"

do_research = True

do_generate_outline = True

do_generate_article = True

do_polish_article = True

# Initialize the engine arguments

output_dir=f"{STORM_OUTPUT_DIR}/{db_dir.break up('db_')[1]}"

print(f"Output listing: {output_dir}")

engine_args = STORMWikiRunnerArguments(

output_dir=output_dir,

max_conv_turn=max_conv_turn,

max_perspective=max_perspective,

search_top_k=search_top_k,

max_thread_num=max_thread_num,

)

# Setup VectorRM to retrieve info from your personal information

rm = VectorRM(

collection_name=DB_COLLECTION_NAME,

embedding_model=EMBEDDING_MODEL,

system=system,

okay=search_top_k,

)

# initialize the vector retailer, both on-line (retailer the db on Qdrant server) or offline (retailer the db regionally):

if vector_db_mode == "offline":

rm.init_offline_vector_db(vector_store_path=db_dir)

# Initialize the STORM Wiki Runner

runner = STORMWikiRunner(engine_args, engine_lm_configs, rm)

# Set directions for the STORM AI Analysis algorithm

runner = set_instructions(runner)

# run the pipeline

runner.run(

subject=subject,

do_research=do_research,

do_generate_outline=do_generate_outline,

do_generate_article=do_generate_article,

do_polish_article=do_polish_article,

)

runner.post_run()

runner.abstract()

generate_markdown_article(output_dir)

We’re able to run STORM!

For the analysis subject, I picked one thing that might be difficult to reply with a typical RAG system and which wasn’t properly lined within the PDF information so we will see how properly attribution works …

“Examine the monetary impression of several types of disasters and the way these impression communities”

Working this for each databases …

question = "Examine the monetary impression of several types of disasters and the way these impression communities"for doc_type in ["pages", "chunks"]:

db_dir = f"{DB_DIR}_{doc_type}"

run_storm(question=question, model_type="openai", db_dir=db_dir)

Utilizing OpenAI, the method took about 6 minutes on my Macbook professional M2 (16GB reminiscence). I might notice that different easier queries the place we’ve got extra supporting content material within the underlying paperwork had been a lot sooner (< 30 seconds in some circumstances).

STORM generates a set of output information …

It’s fascinating to evaluation the conversation_log.json and llm_call_history.json to see the perspective-guided conversations part.

For our analysis subject …

“Examine the monetary impression of several types of disasters and the way these impression communities”

Yow will discover the generated articles right here …

Some fast observations

This demo doesn’t get into a proper analysis — which may be more involved than single-hop RAG systems — however listed below are some subjective observations which will or will not be helpful …

- Parsing by web page or by smaller chunks produces cheap pre-reading stories {that a} human may use for researching areas associated to the monetary impression of disasters

- Each paring approaches offered citations all through, however utilizing smaller chunks appeared to lead to fewer. See for instance the Abstract sections in each of the above articles. The extra references to floor the evaluation, the higher!

- Parsing by smaller chunks appeared to typically create citations that weren’t related, one of many quotation challenges talked about within the STORM paper. See for instance quotation for supply ‘10’ within the summary section, which doesn’t correspond with the reference sentence.

- General, as anticipated for an algorithm developed on Wiki articles, splitting textual content by PDF appeared to provide a more cohesive and grounded article (to me!)

Although the enter analysis subject wasn’t lined in nice depth within the underlying paperwork, the generated report was an excellent place to begin for additional human evaluation

We didn’t get into Co-Storm on this article, which brings a human into the loop. This appears an excellent route for AI-empowered analysis and one thing I’m investigating.

Future work may additionally take a look at adjusting the system prompts and personas to the enterprise case. At the moment, these prompts are focused for a Wikipedia-like course of …

One other attainable route is to increase STORM’s connectors past Qdrant, for instance, to incorporate different vector shops, or higher nonetheless, generic help for Langchain and llama index vector shops. The authors encourage this sort of factor, a PR involving this file could also be in my future.

Working STORM with out an web connection could be a tremendous factor, because it opens up prospects for AI help within the discipline. As you possibly can see from the demo code, I added the power to run STORM with Ollama regionally hosted fashions, however the token throughput price was too low for the LLM agent dialogue part, so the system didn’t full on my laptop computer with small quantized fashions. A subject for a future weblog put up maybe!

Lastly, although the online User Interface is very nice, the demo UI that comes with the repo may be very fundamental and never one thing that might be utilized in manufacturing. Maybe the Standford staff would possibly launch the superior interface — perhaps it’s already someplace? — if not then work could be wanted right here.

This can be a fast demo to hopefully assist individuals get began with utilizing STORM on their very own paperwork. I haven’t gone into systematic analysis, one thing that might clearly should be performed if utilizing STORM in a reside setting. That mentioned, I used to be impressed at the way it appears to have the ability to get a comparatively nuanced analysis subject and generate well-cited pre-writing analysis content material that might assist me in my very own analysis.

Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks, Lewis et al., 2020

Retrieval-Augmented Generation for Large Language Models: A Survey, Yunfan et al., 2024

Assisting in Writing Wikipedia-like Articles From Scratch with Large Language Models, Shao et al., 2024

Into the Unknown Unknowns: Engaged Human Learning through Participation in Language Model Agent Conversations, Jiang et al., 2024

MultiHop-RAG: Benchmarking Retrieval-Augmented Generation for Multi-Hop Queries, Tang et al., 2024

Yow will discover the code for this text here

Please like this text if inclined and I’d be tremendous delighted when you adopted me! Yow will discover extra articles here or join on LinkedIn.