AI fashions, notably Giant Language Fashions (LLMs), want giant quantities of GPU reminiscence. For instance, within the case of the LLaMA 3.1 model, released in July 2024, the memory requirements are:

- The 8 billion parameter mannequin wants 16 GB reminiscence in 16-bit floating level weights

- The bigger 405 billion parameter mannequin wants 810 GB utilizing 16-bit floats

In a full-sized machine studying mannequin, the weights are represented as 32-bit floating level numbers. Trendy fashions have tons of of hundreds of thousands to tens (and even tons of) of billions of weights. Coaching and operating such giant fashions could be very resource-intensive:

- It takes plenty of compute (processing energy).

- It requires giant quantities of GPU reminiscence.

- It consumes giant quantities of vitality, Particularly, the largest contributors to this vitality consumption are:

– Performing numerous computations (matrix multiplications) utilizing 32-bit floats

– Information switch — copying the mannequin knowledge from reminiscence to the processing items.

Being extremely resource-intensive has two essential drawbacks:

- Coaching: Fashions with giant GPU necessities are costly and sluggish to coach. This limits new analysis and growth to teams with massive budgets.

- Inference: Giant fashions want specialised (and costly) {hardware} (devoted GPU servers) to run. They can’t be run on shopper gadgets like common laptops and cellphones.

Thus, end-users and private gadgets should essentially entry AI fashions through a paid API service. This results in a suboptimal consumer expertise for each shopper apps and their builders:

- It introduces latency as a result of community entry and server load.

- It additionally introduces finances constraints on builders constructing AI-based software program. With the ability to run AI fashions domestically — on shopper gadgets, would mitigate these issues.

Lowering the scale of AI fashions is subsequently an energetic space of analysis and growth. That is the primary of a collection of articles discussing methods of decreasing mannequin measurement, particularly by a way known as quantization. These articles are based mostly on learning the unique analysis papers. All through the collection, you will discover hyperlinks to the PDFs of the reference papers.

- The present introductory article provides an outline of various approaches to decreasing mannequin measurement. It introduces quantization as probably the most promising methodology and as a topic of present analysis.

- Quantizing the Weights of AI Models illustrates the arithmetics of quantization utilizing numerical examples.

- Quantizing Neural Network Models discusses the structure and strategy of making use of quantization to neural community fashions, together with the essential mathematical rules. Particularly, it focuses on easy methods to prepare fashions to carry out nicely throughout inference with quantized weights.

- Different Approaches to Quantization explains various kinds of quantization, similar to quantizing to totally different precisions, the granularity of quantization, deterministic and stochastic quantization, and totally different quantization strategies used throughout coaching fashions.

- Extreme Quantization: 1-bit AI Models is about binary quantization, which entails decreasing the mannequin weights from 32-bit floats to binary numbers. It reveals the mathematical rules of binary quantization and summarizes the strategy adopted by the primary researchers who carried out binary quantization of transformer-based fashions (BERT).

- Understanding 1-bit Large Language Models presents latest work on quantizing giant language fashions (LLMs) to make use of 1-bit (binary) weights, i.e. {-1, 1}. Particularly, the main target is on BitNet, which was the primary profitable try to revamp the transformer structure to make use of 1-bit weights.

- Understanding 1.58-bit Language Models discusses the quantization of neural community fashions, particularly LLMs, to make use of ternary weights ({-1, 0, +1}). That is additionally known as 1.58-bit quantization and it has proved to ship very promising outcomes. This matter has attracted a lot consideration within the tech press within the first half of 2024. The background defined within the earlier articles helps to get a deeper understanding of how and why LLMs are quantized to 1.58 bits.

Not counting on costly {hardware} would make AI functions extra accessible and speed up the event and adoption of latest fashions. Numerous strategies have been proposed and tried to sort out this problem of constructing high-performing but small-sized fashions.

Low-rank decomposition

Neural networks specific their weights within the type of high-dimensional tensors. It’s mathematically potential to decompose a high-ranked tensor right into a set of smaller-dimensional tensors. This makes the computations extra environment friendly. This is called Tensor rank decomposition. For instance, in Pc Imaginative and prescient fashions, weights are sometimes 4D tensors.

Lebedev et al, of their 2014 paper titled Speeding-Up Convolutional Neural Networks Using Fine-Tuned Cp-Decomposition display that utilizing a standard decomposition approach, Canonical Polyadic Decomposition (CP Decomposition), convolutions with 4D weight tensors (that are frequent in laptop imaginative and prescient fashions) could be decreased to a collection of 4 convolutions with smaller 2D tensors. Low Rank Adaptation (LoRA) is a contemporary (proposed in 2021) approach based mostly on an analogous strategy utilized to Giant Language Fashions.

Pruning

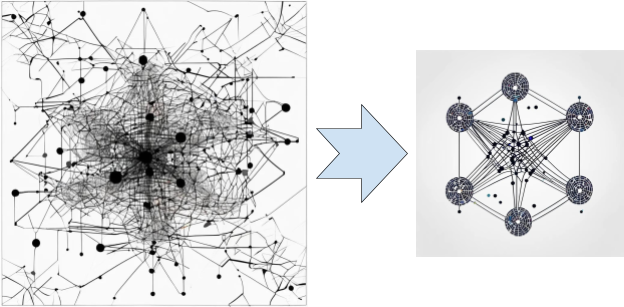

One other technique to scale back community measurement and complexity is by eliminating connections from a community. In a 1989 paper titled Optimal Brain Damage, Le Cun et al suggest deleting connections with small magnitudes and retraining the mannequin. Utilized iteratively, this strategy reduces half or extra of the weights of a neural community. The full paper is available on the website of Le Cun, who (as of 2024) is the chief AI scientist at Meta (Fb).

Within the context of huge language fashions, pruning is particularly difficult. SparseGPT, first shared by Frantar et al in a 2023 paper titled SparseGPT: Massive Language Models Can be Accurately Pruned in One-Shot, is a well known pruning methodology that efficiently reduces by half the scale of LLMs with out dropping a lot accuracy. Pruning LLMs to a fraction of their authentic measurement has not but been possible. The article, Pruning for Neural Networks, by Lei Mao, provides an introduction to this system.

Information Distillation

Information switch is a means of coaching a smaller (scholar) neural community to duplicate the habits of a bigger and extra complicated (trainer) neural community. In lots of instances, the coed is educated based mostly on the ultimate prediction layer of the trainer community. In different approaches, the coed can also be educated based mostly on the intermediate hidden layers of the trainer. Information Distillation has been used efficiently in some instances, however basically, the coed networks are unable to generalize to new unseen knowledge. They are usually overfitted to duplicate the trainer’s habits inside the coaching dataset.

Quantization

In a nutshell, quantization entails beginning with a mannequin with 32-bit or 16-bit floating level weights and making use of numerous methods to cut back the precision of the weights, to 8-bit integers and even binary (1-bit), with out sacrificing mannequin accuracy. Decrease precision weights have decrease reminiscence and computational wants.

The remainder of this text, from the following part onwards, and the remainder of this collection give an in-depth understanding of quantization.

Hybrid

Additionally it is potential to use totally different compression methods in sequence. Han et al, of their 2016 paper titled Compressing Deep Neural Networks with Pruning, Trained Quantization, and Huffman Coding, apply pruning adopted by quantization adopted by Huffman coding to compress the AlexNet mannequin by an element of 35X, to cut back the mannequin measurement from 240 MB to six.9 MB with out vital lack of accuracy. As of July 2024, such approaches have but to be tried on low-bit LLMs.

The “measurement” of a mannequin is especially decided by two components:

- The variety of weights (or parameters)

- The dimensions (size in bits) of every parameter.

It’s well-established that the variety of parameters in a mannequin is essential to its efficiency — therefore, decreasing the variety of parameters isn’t a viable strategy. Thus, making an attempt to cut back the size of every weight is a extra promising angle to discover.

Historically, LLMs are educated with 32-bit weights. Fashions with 32-bit weights are sometimes called full-sized fashions. Lowering the size (or precision) of mannequin parameters is known as quantization. 16-bit and 8-bit quantization are frequent approaches. Extra radical approaches contain quantizing to 4 bits, 2 bits, and even 1 bit. To grasp how larger precision numbers are quantized to decrease precision numbers, confer with Quantizing the Weights of AI Models, with examples of quantizing mannequin weights.

Quantization helps with decreasing the reminiscence necessities and decreasing the computational price of operating the mannequin. Sometimes, mannequin weights are quantized. Additionally it is frequent to quantize the activations (along with quantizing the weights). The operate that maps the floating level weights to their decrease precision integer variations is known as the quantizer, or quantization operate.

Quantization in Neural Networks

Simplistically, the linear and non-linear transformation utilized by a neural community layer could be expressed as:

Within the above expression:

- z denotes the output of the non-linear operate. Additionally it is known as the activation.

- Sigma is the non-linear activation operate. It’s typically the sigmoid operate or the tanh operate.

- W is the load matrix of that layer

- a is the enter vector

- B is the bias vector

- The matrix multiplication of the load and the enter is known as convolution. Including the bias to the product matrix is known as accumulation.

- The time period handed to the sigma (activation) operate is known as a Multiply-Accumulate (MAC) operation.

A lot of the computational workload in operating neural networks comes from the convolution operation — which entails the multiplication of many floating level numbers. Giant fashions with many weights have a really giant variety of convolution operations.

This computational price may doubtlessly be decreased by doing the multiplication in lower-precision integers as an alternative of floating-point numbers. In an excessive case, as mentioned in Understanding 1.58-bit Language Models, the 32-bit weights may doubtlessly be represented by ternary numbers {-1, 0, +1} and the multiplication operations would get replaced by a lot easier addition and subtraction operations. That is the instinct behind quantization.

The computational price of digital arithmetic is quadratically associated to the variety of bits. As studied by Siddegowda et al in their paper on Neural Network Quantization (Part 2.1), utilizing 8-bit integers as an alternative of 32-bit floats results in 16x larger efficiency, when it comes to vitality consumption. When there are billions of weights, the associated fee financial savings are very vital.

The quantizer operate maps the high-precision (sometimes 32-bit floating level weights) to lower-precision integer weights.

The “data” the mannequin has acquired through coaching is represented by the worth of its weights. When these weights are quantized to decrease precision, a portion of their info can also be misplaced. The problem of quantization is to cut back the precision of the weights whereas sustaining the accuracy of the mannequin.

One of many essential causes some quantization methods are efficient is that the relative values of the weights and the statistical properties of the weights are extra vital than their precise values. That is very true for giant fashions with hundreds of thousands or billions of weights. Later articles on quantized BERT models — BinaryBERT and BiBERT, on BitNet — which is a transformer LLM quantized down to binary weights, and on BitNet b1.58 — which quantizes transformers to use ternary weights, illustrate the usage of profitable quantization methods. A Visual Guide to Quantization, by Maarten Grootendoorst, has many illustrations and graphic depictions of quantization.

Inference means utilizing an AI mannequin to generate predictions, such because the classification of a picture, or the completion of a textual content string. When utilizing a full-precision mannequin, your complete knowledge move via the mannequin is in 32-bit floating level numbers. When operating inference via a quantized mannequin, many elements — however not all, of the information move are in decrease precision.

The bias is usually not quantized as a result of the variety of bias phrases is far lower than the variety of weights in a mannequin. So, the associated fee financial savings isn’t sufficient to justify the overhead of quantization. The accumulator’s output is in excessive precision. The output of the activation can also be in larger precision.

This text mentioned the necessity to scale back the scale of AI fashions and gave a high-level overview of how to attain decreased mannequin sizes. It then launched the fundamentals of quantization, a way that’s presently probably the most profitable in decreasing mannequin sizes whereas managing to take care of a suitable degree of accuracy.

The aim of this collection is to present you adequate background to understand the acute quantization of language fashions, ranging from simpler models like BERT earlier than lastly discussing 1-bit LLMs and the recent work on 1.58-bit LLMs. To this finish, the following few articles on this collection current a semi-technical deep dive into the totally different subtopics just like the mathematical operations behind quantization and the process of training quantized models. It is very important perceive that as a result of that is an energetic space of analysis and growth, there are few normal procedures and totally different employees undertake progressive strategies to attain higher outcomes.