Time sequence and extra particularly time sequence forecasting is a really well-known knowledge science downside amongst professionals and enterprise customers alike.

A number of forecasting strategies exist, which can be grouped as statistical or machine studying strategies for comprehension and a greater overview, however as a matter of reality, the demand for forecasting is so excessive that the out there choices are ample.

Machine studying strategies are thought-about state-of-the-art method in time sequence forecasting and are growing in reputation, because of the truth that they’re able to seize complicated non-linear relationships inside the knowledge and usually yield increased accuracy in forecasting [1]. One standard machine studying subject is the panorama of neural networks. Particularly for time sequence evaluation, recurrent neural networks have been developed and utilized to unravel forecasting issues [2].

Information science lovers would possibly discover the complexity behind such fashions intimidating and being one in every of you I can inform that I share that feeling. Nevertheless, this text goals to indicate that

regardless of the most recent developments in machine studying strategies, it isn’t essentially price pursuing probably the most complicated software when searching for an answer for a specific downside. Properly-established strategies enhanced with highly effective function engineering strategies might nonetheless present passable outcomes.

Extra particularly, I apply a Multi-Layer Perceptron mannequin and share the code and outcomes, so you may get a hands-on expertise on engineering time sequence options and forecasting successfully.

Extra exactly what I purpose at to offer for fellow self-taught professionals, may very well be summarized within the following factors:

- forecasting primarily based on real-world downside / knowledge

- methods to engineer time sequence options for capturing temporal patterns

- construct an MLP mannequin able to using combined variables: floats and integers (handled as categoricals through embedding)

- use MLP for level forecasting

- use MLP for multi-step forecasting

- assess function significance utilizing permutation function significance methodology

- retrain mannequin for a subset of grouped options (a number of teams, educated for particular person teams) to refine the function significance of grouped options

- consider the mannequin by evaluating to an

UnobservedComponentsmannequin

Please observe, that this text assumes the prior information of some key technical phrases and don’t intend to elucidate them in particulars. Discover these key phrases beneath, with references offered, which may very well be checked for readability:

- Time Collection [3]

- Prediction [4] — on this context it will likely be used to tell apart mannequin outputs within the coaching interval

- Forecast [4] — on this context it will likely be used to tell apart mannequin outputs within the take a look at interval

- Function Engineering [5]

- Autocorrelation [6]

- Partial Autocorrelation [6]

- MLP (Multi-Layer Perceptron) [7]

- Enter Layer [7]

- Hidden Layer [7]

- Output Layer [7]

- Embedding [8]

- State Area Fashions [9]

- Unobserved Elements Mannequin [9]

- RMSE (Root Imply Squared Error) [10]

- Function Significance [11]

- Permutation Function Significance [11]

The important packages used in the course of the evaluation are numpy and pandas for knowledge manipulation, plotly for interactive charts, statsmodels for statistics and state area modeling and at last, tensorflow for MLP architcture.

Notice: because of technical limitations, I’ll present the code snippets for interactive plotting, however the figures will likely be static offered right here.

import opendatasets as od

import numpy as np

import pandas as pd

import plotly.graph_objects as go

from plotly.subplots import make_subplots

import tensorflow as tffrom sklearn.preprocessing import StandardScaler

from sklearn.inspection import permutation_importance

import statsmodels.api as sm

from statsmodels.tsa.stattools import acf, pacf

import datetime

import warnings

warnings.filterwarnings('ignore')

The information is loaded robotically utilizing opendatasets.

dataset_url = "https://www.kaggle.com/datasets/robikscube/hourly-energy-consumption/"

od.obtain(dataset_url)

df = pd.read_csv(".hourly-energy-consumption" + "AEP_hourly.csv", index_col=0)

df.sort_index(inplace = True)

Hold in my thoughts, that knowledge cleansing was an important first step with the intention to progress with the evaluation. In case you are within the particulars and likewise in state area modeling, please discuss with my earlier article here. ☚📰 In a nutshell, the next steps have been performed:

- Figuring out gaps, when particular timestamps are lacking (solely single steps have been recognized)

- Carry out imputation (utilizing imply of earlier and subsequent data)

- Figuring out and dropping duplicates

- Set timestamp column as index for dataframe

- Set dataframe index frequency to hourly, as a result of it’s a requirement for additional processing

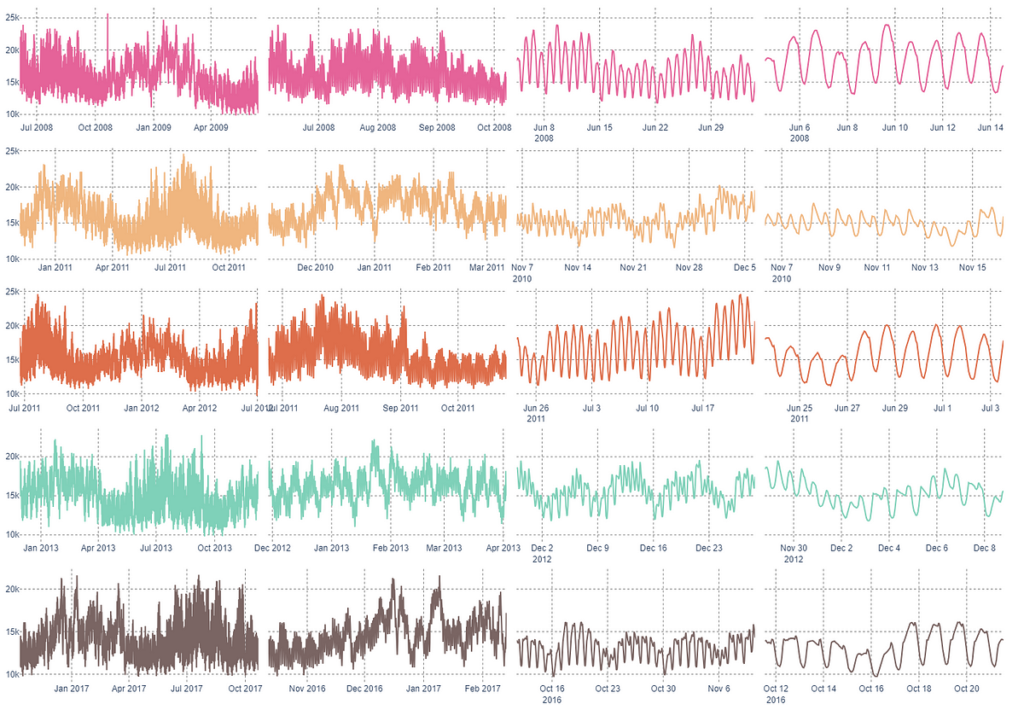

After making ready the info, let’s discover it by drawing 5 random timestamp samples and examine the time sequence at totally different scales.

fig = make_subplots(rows=5, cols=4, shared_yaxes=True, horizontal_spacing=0.01, vertical_spacing=0.04)# drawing a random pattern of 5 indices with out repetition

pattern = sorted([x for x in np.random.choice(range(0, len(df), 1), 5, replace=False)])

# zoom x scales for plotting

intervals = [9000, 3000, 720, 240]

colours = ["#E56399", "#F0B67F", "#DE6E4B", "#7FD1B9", "#7A6563"]

# s for pattern datetime begin

for si, s in enumerate(pattern):

# p for interval size

for pi, p in enumerate(intervals):

cdf = df.iloc[s:(s+p+1),:].copy()

fig.add_trace(go.Scatter(x=cdf.index,

y=cdf.AEP_MW.values,

marker=dict(coloration=colours[si])),

row=si+1, col=pi+1)

fig.update_layout(

font=dict(household="Arial"),

margin=dict(b=8, l=8, r=8, t=8),

showlegend=False,

peak=1000,

paper_bgcolor="#FFFFFF",

plot_bgcolor="#FFFFFF")

fig.update_xaxes(griddash="dot", gridcolor="#808080")

fig.update_yaxes(griddash="dot", gridcolor="#808080")