Enterprise paperwork, corresponding to advanced stories, product catalogs, design recordsdata, monetary statements, technical manuals, and market evaluation stories, normally include multimodal information (textual content in addition to visible content material corresponding to graphs, charts, maps, photographs, infographics, diagrams, and blueprints, and many others.). Discovering the correct info from these paperwork requires a semantic search of textual content and associated pictures for a given question posed by a buyer or an organization worker. As an example, an organization’s product is likely to be described via its title, textual description, and pictures. Equally, a mission proposal would possibly embody a mix of textual content, charts illustrating finances allocations, maps displaying geographical protection, and photographs of previous initiatives.

Correct and fast search of multimodal info is vital for enhancing enterprise productiveness. Enterprise information is commonly unfold throughout varied sources in textual content and picture codecs, making retrieving all related info effectively difficult. Whereas generative AI strategies, significantly these leveraging LLMs, improve information administration in enterprise (e.g., retrieval augment generation, graph RAGs, amongst others), they face limitations in accessing multimodal, scattered information. Strategies that unify totally different information varieties permit customers to question numerous codecs with pure language prompts. This functionality can profit staff and administration inside an organization and enhance buyer expertise. It could actually have a number of use instances, corresponding to clustering related subjects and discovering thematic tendencies, constructing advice engines, partaking clients with extra related content material, sooner entry to info for improved decision-making, delivering user-specific search outcomes, enhancing person interactions to really feel extra intuitive and pure, and decreasing time spent discovering info, to call a couple of.

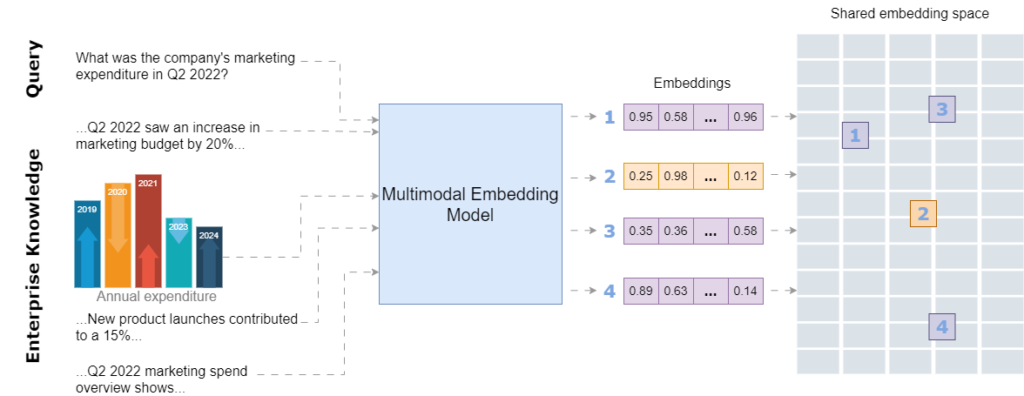

In trendy AI fashions, information is processed as numerical vectors generally known as embeddings. Specialised AI fashions, known as embedding models, rework information into numerical representations that can be utilized to seize and evaluate similarities in which means or options effectively. Embeddings are extraordinarily helpful for semantic search and information mapping and function the foundational spine of at this time’s subtle LLMs.

This text explores the potential of embedding fashions (significantly multimodal embedding fashions launched later) for enhancing semantic search throughout a number of information varieties in enterprise functions. The article begins by explaining the idea of embeddings for readers unfamiliar with how embeddings work in AI. It then discusses the idea of multimodal embeddings, explaining how the info from a number of information codecs will be mixed into unified embeddings that seize cross-modal relationships and may very well be immensely helpful for business-related info search duties. Lastly, the article explores a just lately launched multimodal embedding mannequin for multimodal semantic seek for enterprise functions.

Understanding Embedding House & Semantic Search

Embeddings are saved in a vector area the place related ideas are situated shut to one another. Think about the embedding area as a library the place books on associated subjects are shelved collectively. For instance, in an embedding area, embeddings for phrases like “desk” and “chair” can be close to to one another, whereas “airplane” and “baseball” can be additional aside. This spatial association allows fashions to determine and retrieve associated gadgets successfully and enhances a number of duties like advice, search, and clustering.

To reveal how embeddings are computed and visualized, let’s create some classes of various ideas. The whole code is accessible on GitHub.

classes = {

"Fruits": ["Apple", "Banana", "Orange", "Grape", "Mango", "Peach", "Pineapple"],

"Animals": ["Dog", "Cat", "Elephant", "Tiger", "Lion", "Monkey", "Rabbit"],

"Nations": ["Canada", "France", "India", "Japan", "Brazil", "Germany", "Australia"],

"Sports activities": ["Soccer", "Basketball", "Tennis", "Baseball", "Cricket", "Swimming", "Running"],

"Music Genres": ["Rock", "Jazz", "Classical", "Hip Hop", "Pop", "Blues"],

"Professions": ["Doctor", "Engineer", "Teacher", "Artist", "Chef", "Lawyer", "Pilot"],

"Automobiles": ["Car", "Bicycle", "Motorcycle", "Airplane", "Train", "Boat", "Bus"],

"Furnishings": ["Chair", "Table", "Sofa", "Bed", "Desk", "Bookshelf", "Cabinet"],

"Feelings": ["Happiness", "Sadness", "Anger", "Fear", "Surprise", "Disgust", "Calm"],

"Climate": ["Hurricane", "Tornado", "Blizzard", "Heatwave", "Thunderstorm", "Fog"],

"Cooking": ["Grilling", "Boiling", "Frying", "Baking", "Steaming", "Roasting", "Poaching"]}

I’ll now use an embedding mannequin (Cohere’s embed-english-v3.0 mannequin which is the main focus of this text and might be mentioned intimately after this instance) to compute the embeddings of those ideas, as proven within the following code snippet. The next libraries must be put in for operating this code.

!pip set up cohere umap-learn seaborn matplotlib numpy pandas regex altair scikit-learn ipython faiss-cpu

This code computes the textual content embeddings of the above-mentioned ideas and shops them in a NumPy array.

import cohere

import umap

import seaborn as sns

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd# Initialize Cohere shopper

co = cohere.Shopper(api_key=os.getenv("COHERE_API_KEY_2"))

# Flatten classes and ideas

labels = []

ideas = []

for class, gadgets in classes.gadgets():

labels.lengthen(deep-dives * len(gadgets))

ideas.lengthen(gadgets)

# Generate textual content embeddings for all ideas with corrected input_type

embeddings = co.embed(

texts=ideas,

mannequin="embed-english-v3.0",

input_type="search_document" # Corrected enter kind for textual content

).embeddings

# Convert to NumPy array

embeddings = np.array(embeddings)

Embeddings can have a whole lot or 1000’s of dimensions that aren’t doable to visualise immediately. Therefore, we scale back the dimensionality of embeddings to make high-dimensional information visually interpretable. After computing the embeddings, the next code maps the embeddings to a 2-dimensional area utilizing the UMAP (Uniform Manifold Approximation and Projection) dimensionality discount technique in order that we will plot and analyze how related ideas cluster collectively.

# Dimensionality discount utilizing UMAP

reducer = umap.UMAP(n_neighbors=20, random_state=42)

reduced_embeddings = reducer.fit_transform(embeddings)# Create DataFrame for visualization

df = pd.DataFrame({

"x": reduced_embeddings[:, 0],

"y": reduced_embeddings[:, 1],

"Class": labels,

"Idea": ideas

})

# Plot utilizing Seaborn

plt.determine(figsize=(12, 8))

sns.scatterplot(information=df, x="x", y="y", hue="Class", fashion="Class", palette="Set2", s=100)

# Add labels to every level

for i in vary(df.form[0]):

plt.textual content(df["x"][i] + 0.02, df["y"][i] + 0.02, df["Concept"][i], fontsize=9)

plt.legend(loc="decrease proper")

plt.title("Visualization of Embeddings by Class")

plt.xlabel("UMAP Dimension 1")

plt.ylabel("UMAP Dimension 2")

plt.savefig("C:/Customers/h02317/Downloads/embeddings.png",dpi=600)

plt.present()

Right here is the visualization of the embeddings of those ideas in a 2D area.

Semantically related gadgets are grouped within the embedding area, whereas ideas with distant meanings are situated farther aside (e.g., international locations are clustered farther from different classes).

For instance how a search question maps to its matching idea inside this area, we first retailer the embeddings in a vector database (FAISS vector retailer). Subsequent, we compute the question’s embeddings in the identical manner and determine a “neighborhood” within the embedding area the place embeddings carefully match the question’s semantics. This proximity is calculated utilizing Euclidean distance or cosine similarity between the question embeddings and people saved within the vector database.

import cohere

import numpy as np

import re

import pandas as pd

from tqdm import tqdm

from datasets import load_dataset

import umap

import altair as alt

from sklearn.metrics.pairwise import cosine_similarity

import warnings

from IPython.show import show, Markdown

import faiss

import numpy as np

import pandas as pd

from sklearn.preprocessing import normalize

warnings.filterwarnings('ignore')

pd.set_option('show.max_colwidth', None)# Normalize embeddings (non-compulsory however really useful for cosine similarity)

embeddings = normalize(np.array(embeddings))

# Create FAISS index

dimension = embeddings.form[1]

index = faiss.IndexFlatL2(dimension) # L2 distance, can use IndexFlatIP for inside product (cosine similarity)

index.add(embeddings) # Add embeddings to the FAISS index

# Embed the question

question = "Which is the biggest European nation?"

query_embedding = co.embed(texts=[query], mannequin="embed-english-v3.0", input_type="search_document").embeddings[0]

query_embedding = normalize(np.array([query_embedding])) # Normalize question embedding

# Seek for nearest neighbors

ok = 5 # Variety of nearest neighbors

distances, indices = index.search(query_embedding, ok)

# Format and show outcomes

outcomes = pd.DataFrame({

'texts': [concepts[i] for i in indices[0]],

'distance': distances[0]

})

show(Markdown(f"Question: {question}"))

# Convert DataFrame to markdown format

def print_markdown_results(df):

markdown_text = f"Nearest neighbors:nn"

markdown_text += "| Texts | Distance |n"

markdown_text += "|-------|----------|n"

for _, row in df.iterrows():

markdown_text += f"| {row['texts']} | {row['distance']:.4f} |n"

show(Markdown(markdown_text))

# Show leads to markdown

print_markdown_results(outcomes)

Listed here are the top-5 closest matches to the question, ranked by their smallest distances from the question’s embedding among the many saved ideas.

As proven, France is the proper match for this question among the many given ideas. Within the visualized embedding area, the question’s place falls throughout the ‘nation’ group.

The entire strategy of semantic search is depicted within the following determine.

Multimodal Embeddings

Textual content embeddings are efficiently used for semantic search and retrieval increase era (RAG). A number of embedding fashions are used for this function, corresponding to from OpenAI’s, Google, Cohere, and others. Equally, a number of open-source fashions can be found on the Hugging Face platform corresponding to all-MiniLM-L6-v2. Whereas these fashions are very helpful for text-to-text semantic search, they can’t cope with picture information which is a vital supply of data in enterprise paperwork. Furthermore, companies typically must rapidly seek for related pictures both from paperwork or from huge picture repositories with out correct metadata.

This drawback is partially addressed by some multimodal embedding fashions, corresponding to OpenAI’s CLIP, which connects textual content and pictures and can be utilized to acknowledge all kinds of visible ideas in pictures and affiliate them with their names. Nevertheless, it has very restricted textual content enter capability and reveals low efficiency for text-only and even text-to-image retrieval duties.

A mixture of textual content and picture embedding fashions can also be used to cluster textual content and picture information into separate areas; nevertheless, it results in weak search outcomes which are biased towards text-only information. In multimodal RAGs, a mix of a textual content embedding mannequin and a multimodal LLM is used to reply each from textual content and pictures. For the main points of growing a multimodal RAG, please learn my following article.

A multimodal embedding mannequin ought to be capable to embody each picture and textual content information inside a single database which is able to scale back complexity in comparison with sustaining two separate databases. On this manner, the mannequin will prioritize the which means behind information, as an alternative of biasing in the direction of a particular modality.

By storing all modalities in a single embedding area, the mannequin will be capable to join textual content with related pictures and retrieve and evaluate info throughout totally different codecs. This unified method enhances search relevance and permits for a extra intuitive exploration of interconnected info throughout the shared embedding area.

Exploring a Multimodal Embedding Mannequin for a Enterprise Use-Case

Cohere recently introduced a multimodal embedding mannequin, Embed 3, which might generate embeddings from each textual content and pictures and retailer them in a unified embedding area. According to Cohere’s blog, the mannequin reveals spectacular efficiency for a wide range of multimodal duties corresponding to zero-shot, text-to-image, graphs and charts, eCommerce catalogs, and design recordsdata, amongst others.

On this article, I discover Cohere’s multimodal embedding mannequin for text-to-image, text-to-text, and image-to-image retrieval duties for a enterprise situation wherein the shoppers seek for merchandise from a web-based product catalog utilizing both textual content queries or pictures. Utilizing text-to-image, text-to-text, and image-to-image retrieval in a web-based product catalog brings a number of benefits to companies in addition to clients. This method permits clients to seek for merchandise in a versatile manner, both by typing a question or importing a picture. As an example, a buyer who sees an merchandise they like can add a photograph, and the mannequin will retrieve visually related merchandise from the catalog together with all the main points in regards to the product. Equally, clients can seek for particular merchandise by describing their traits slightly than utilizing the precise product title.

The next steps are concerned on this use case.

- Demonstrating how multimodal information (textual content and pictures) will be parsed from a product catalog utilizing LlamaParse.

- Making a multimodal index utilizing Cohere’s multimodal mannequin embed-english-v3.0.

- Making a multimodal retriever and testing it for a given question.

- Making a multimodal question engine with a immediate template to question the multimodal vector database for text-to-text and text-to-image duties (mixed)

- Retrieving related pictures and textual content from the vector database and sending it to an LLM to generate the ultimate response.

- Testing for image-to-image retrieval duties.

I generated an instance furnishings catalog of a fictitious firm utilizing OpenAI’s DALL-E picture generator. The catalog includes 4 classes of a complete of 36 product pictures with descriptions. Right here is the snapshot of the primary web page of the product catalog.

The whole code and the pattern information can be found on GitHub. Let’s talk about it step-by-step.

Cohere’s embedding mannequin is used within the following manner.

model_name = "embed-english-v3.0"

api_key = "COHERE_API_KEY"

input_type_embed = "search_document" #for picture embeddings, input_type_embed = "picture"

# Create a cohere shopper.

co = cohere.Shopper(api_key)

textual content = ['apple','chair','mango']

embeddings = co.embed(texts=listing(textual content),

mannequin=model_name,

input_type=input_type_embed).embeddings

The mannequin will be examined utilizing Cohere’s trial API keys by making a free account on their website.

To reveal how multimodal information will be extracted, I used LlamaParse to extract product pictures and textual content from the catalog. This course of is detailed in my previous article. LlamaParse can be utilized by creating an account on Llama Cloud website to get an API key. The free API key permits 1000 pages of credit score restrict per day.

The next libraries must be put in to run the code on this article.

!pip set up nest-asyncio python-dotenv llama-parse qdrant-client

The next piece of code masses the API keys of Llama Cloud, Cohere, and OpenAI from an setting file (.env). OpenAI’s multimodal LLM, GPT-4o, is used to generate the ultimate response.

import os

import time

import nest_asyncio

from typing import Checklist

from dotenv import load_dotenvfrom llama_parse import LlamaParse

from llama_index.core.schema import ImageDocument, TextNode

from llama_index.embeddings.cohere import CohereEmbedding

from llama_index.multi_modal_llms.openai import OpenAIMultiModal

from llama_index.core import Settings

from llama_index.core.indices import MultiModalVectorStoreIndex

from llama_index.vector_stores.qdrant import QdrantVectorStore

from llama_index.core import StorageContext

import qdrant_client

from llama_index.core import SimpleDirectoryReader

# Load setting variables

load_dotenv()

nest_asyncio.apply()

# Set API keys

COHERE_API_KEY = os.getenv("COHERE_API_KEY")

LLAMA_CLOUD_API_KEY = os.getenv("LLAMA_CLOUD_API_KEY")

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

The next code extracts the textual content and picture nodes from the catalog utilizing LlamaParse. The extracted textual content and pictures are saved to a specified path.

# Extract textual content nodes

def get_text_nodes(json_list: Checklist[dict]) -> Checklist[TextNode]:

return [TextNode(text=page["text"], metadata={"web page": web page["page"]}) for web page in json_list]# Extract picture nodes

def get_image_nodes(json_objs: Checklist[dict], download_path: str) -> Checklist[ImageDocument]:

image_dicts = parser.get_images(json_objs, download_path=download_path)

return [ImageDocument(image_path=image_dict["path"]) for image_dict in image_dicts]

# Save the textual content in textual content nodes to a file

def save_texts_to_file(text_nodes, file_path):

texts = [node.text for node in text_nodes]

all_text = "nn".be a part of(texts)

with open(file_path, "w", encoding="utf-8") as file:

file.write(all_text)

# Outline file paths

FILE_NAME = "furnishings.docx"

IMAGES_DOWNLOAD_PATH = "parsed_data"

# Initialize the LlamaParse parser

parser = LlamaParse(

api_key=LLAMA_CLOUD_API_KEY,

result_type="markdown",

)

# Parse doc and extract JSON information

json_objs = parser.get_json_result(FILE_NAME)

json_list = json_objs[0]["pages"]

#get textual content nodes

text_nodes = get_text_nodes(json_list)

#extract the pictures to a specified path

image_documents = get_image_nodes(json_objs, IMAGES_DOWNLOAD_PATH)

# Save the extracted textual content to a .txt file

file_path = "parsed_data/extracted_texts.txt"

save_texts_to_file(text_nodes, file_path)

Right here is the snapshot displaying the extracted textual content and metadata of one of many nodes.

I saved the textual content information to a .txt file. Here’s what the textual content within the .txt file seems like.

Right here’s the construction of the parsed information inside a folder

Word that the textual description has no reference to their respective pictures. The aim is to reveal that the embedding mannequin can retrieve the textual content in addition to the related pictures in response to a question because of the shared embedding area wherein the textual content and the related pictures are saved shut to one another.

Cohere’s trial API permits a restricted API price (5 API calls per minute). To embed all the pictures within the catalog, I created the next customized class to ship the extracted pictures to the embedding mannequin with some delay (30 seconds, smaller delays can be examined).

delay = 30

# Outline customized embedding class with a hard and fast delay after every embedding

class DelayCohereEmbedding(CohereEmbedding):

def get_image_embedding_batch(self, img_file_paths, show_progress=False):

embeddings = []

for img_file_path in img_file_paths:

embedding = self.get_image_embedding(img_file_path)

embeddings.append(embedding)

print(f"sleeping for {delay} seconds")

time.sleep(tsec) # Add a hard and fast 12-second delay after every embedding

return embeddings# Set the customized embedding mannequin within the settings

Settings.embed_model = DelayCohereEmbedding(

api_key=COHERE_API_KEY,

model_name="embed-english-v3.0"

)

The next code masses the parsed paperwork from the listing and creates a multimodal Qdrant Vector database and an index (adopted from LlamaIndex implementation).

# Load paperwork from the listing

paperwork = SimpleDirectoryReader("parsed_data",

required_exts=[".jpg", ".png", ".txt"],

exclude_hidden=False).load_data()# Arrange Qdrant vector retailer

shopper = qdrant_client.QdrantClient(path="furniture_db")

text_store = QdrantVectorStore(shopper=shopper, collection_name="text_collection")

image_store = QdrantVectorStore(shopper=shopper, collection_name="image_collection")

storage_context = StorageContext.from_defaults(vector_store=text_store, image_store=image_store)

# Create the multimodal vector index

index = MultiModalVectorStoreIndex.from_documents(

paperwork,

storage_context=storage_context,

image_embed_model=Settings.embed_model,

)

Lastly, a multimodal retriever is created to retrieve the matching textual content and picture nodes from the multimodal vector database. The variety of retrieved textual content nodes and pictures is outlined by similarity_top_k and image_similarity_top_k.

retriever_engine = index.as_retriever(similarity_top_k=4, image_similarity_top_k=4)

Let’s check the retriever for the question “Discover me a chair with metallic stands”. A helper perform display_images plots the retrieved pictures.

###check retriever

from llama_index.core.response.notebook_utils import display_source_node

from llama_index.core.schema import ImageNode

import matplotlib.pyplot as plt

from PIL import Picturedef display_images(file_list, grid_rows=2, grid_cols=3, restrict=9):

"""

Show pictures from a listing of file paths in a grid.

Parameters:

- file_list: Checklist of picture file paths.

- grid_rows: Variety of rows within the grid.

- grid_cols: Variety of columns within the grid.

- restrict: Most variety of pictures to show.

"""

plt.determine(figsize=(16, 9))

rely = 0

for idx, file_path in enumerate(file_list):

if os.path.isfile(file_path) and rely < restrict:

img = Picture.open(file_path)

plt.subplot(grid_rows, grid_cols, rely + 1)

plt.imshow(img)

plt.axis('off')

rely += 1

plt.tight_layout()

plt.present()

question = "Discover me a chair with metallic stands"

retrieval_results = retriever_engine.retrieve(question)

retrieved_image = []

for res_node in retrieval_results:

if isinstance(res_node.node, ImageNode):

retrieved_image.append(res_node.node.metadata["file_path"])

else:

display_source_node(res_node, source_length=200)

display_images(retrieved_image)

The textual content node and the pictures retrieved by the retriever are proven beneath.

The textual content nodes and pictures retrieved listed below are near the question embeddings, however not all could also be related. The subsequent step is to ship these textual content nodes and pictures to a multimodal LLM to refine the choice and generate the ultimate response. The immediate template qa_tmpl_str guides the LLM’s habits on this choice and response era course of.

import logging

from llama_index.core.schema import NodeWithScore, ImageNode, MetadataMode# Outline the template with specific directions

qa_tmpl_str = (

"Context info is beneath.n"

"---------------------n"

"{context_str}n"

"---------------------n"

"Utilizing the supplied context and pictures (not prior information), "

"reply the question. Embody solely the picture paths of pictures that immediately relate to the reply.n"

"Your response needs to be formatted as follows:n"

"End result: [Provide answer based on context]n"

"Related Picture Paths: array of picture paths of related pictures solely separated by comman"

"Question: {query_str}n"

"Reply: "

)

qa_tmpl = PromptTemplate(qa_tmpl_str)

# Initialize multimodal LLM

multimodal_llm = OpenAIMultiModal(mannequin="gpt-4o", temperature=0.0, max_tokens=1024)

# Setup the question engine with retriever and immediate template

query_engine = index.as_query_engine(

llm=multimodal_llm,

text_qa_template=qa_tmpl,

retreiver=retriever_engine

)

The next code creates the context string ctx_str for the immediate template qa_tmpl_str by getting ready the picture nodes with legitimate paths and metadata. It additionally embeds the question string with the immediate template. The immediate template, together with the embedded context, is then despatched to the LLM to generate the ultimate response.

# Extract the underlying nodes

nodes = [node.node for node in retrieval_results]# Create ImageNode situations with legitimate paths and metadata

image_nodes = []

for n in nodes:

if "file_path" in n.metadata and n.metadata["file_path"].decrease().endswith(('.png', '.jpg')):

# Add the ImageNode with solely path and mimetype as anticipated by LLM

image_node = ImageNode(

image_path=n.metadata["file_path"],

image_mimetype="picture/jpeg" if n.metadata["file_path"].decrease().endswith('.jpg') else "picture/png"

)

image_nodes.append(NodeWithScore(node=image_node))

logging.data(f"ImageNode created for path: {n.metadata['file_path']}")

logging.data(f"Whole ImageNodes ready for LLM: {len(image_nodes)}")

# Create the context string for the immediate

ctx_str = "nn".be a part of(

[n.get_content(metadata_mode=MetadataMode.LLM).strip() for n in nodes]

)

# Format the immediate

fmt_prompt = qa_tmpl.format(context_str=ctx_str, query_str=question)

# Use the multimodal LLM to generate a response

llm_response = multimodal_llm.full(

immediate=fmt_prompt,

image_documents=[image_node.node for image_node in image_nodes], # Cross solely ImageNodes with paths

max_tokens=300

)

# Convert response to textual content and course of it

response_text = llm_response.textual content # Extract the precise textual content content material from the LLM response

# Extract the picture paths after "Related Picture Paths:"

image_paths = re.findall(r'Related Picture Paths:s*(.*)', response_text)

if image_paths:

# Cut up the paths by comma if a number of paths are current and strip any additional whitespace

image_paths = [path.strip() for path in image_paths[0].cut up(",")]

# Filter out the "Related Picture Paths" half from the displayed response

filtered_response = re.sub(r'Related Picture Paths:.*', '', response_text).strip()

show(Markdown(f"**Question**: {question}"))

# Print the filtered response with out picture paths

show(Markdown(f"{filtered_response}"))

if image_paths!=['']:

# Plot pictures utilizing the paths collected within the image_paths array

display_images(image_paths)

The ultimate (filtered) response generated by the LLM for the above question is proven beneath.

This reveals that the embedding mannequin efficiently connects the textual content embeddings with picture embeddings and retrieves related outcomes that are then additional refined by the LLM.

The outcomes of some extra check queries are proven beneath.

Now let’s check the multimodal embedding mannequin for an image-to-image process. We use a special product picture (not within the catalog) and use the retriever to convey the matching product pictures. The next code retrieves the matching product pictures with a modified helper perform display_images.

import matplotlib.pyplot as plt

from PIL import Picture

import osdef display_images(input_image_path, matched_image_paths):

"""

Plot the enter picture alongside matching pictures with applicable labels.

"""

# Whole pictures to point out (enter + first match)

total_images = 1 + len(matched_image_paths)

# Outline the determine dimension

plt.determine(figsize=(7, 7))

# Show the enter picture

plt.subplot(1, total_images, 1)

if os.path.isfile(input_image_path):

input_image = Picture.open(input_image_path)

plt.imshow(input_image)

plt.title("Given Picture")

plt.axis("off")

# Show matching pictures

for idx, img_path in enumerate(matched_image_paths):

if os.path.isfile(img_path):

matched_image = Picture.open(img_path)

plt.subplot(1, total_images, idx + 2)

plt.imshow(matched_image)

plt.title("Match Discovered")

plt.axis("off")

plt.tight_layout()

plt.present()

# Pattern utilization with specified paths

input_image_path = 'C:/Customers/h02317/Downloads/trial2.png'

retrieval_results = retriever_engine.image_to_image_retrieve(input_image_path)

retrieved_images = []

for res in retrieval_results:

retrieved_images.append(res.node.metadata["file_path"])

# Name the perform to show pictures side-by-side

display_images(input_image_path, retrieved_images[:2])

Some outcomes of the enter and output (matching) pictures are proven beneath.

These outcomes present that this multimodal embedding mannequin gives spectacular efficiency throughout text-to-text, text-to-image, and image-to-image duties. This mannequin will be additional explored for multimodal RAGs with massive paperwork to boost retrieval expertise with numerous information varieties.

As well as, multimodal embedding fashions maintain good potential in varied enterprise functions, together with personalised suggestions, content material moderation, cross-modal search engines like google, and customer support automation. These fashions can allow firms to develop richer person experiences and extra environment friendly information retrieval programs.