Doubtlessly, DAU, WAU, and MAU — every day, weekly, and month-to-month active users — are vital enterprise metrics. An article “How Duolingo reignited user growth” by Jorge Mazal, former CPO of Duolingo, is #1 within the Development part of Lenny’s Publication weblog. On this article, Jorge paid particular consideration to the methodology Duolingo used to mannequin the DAU metric (see one other article “Meaningful metrics: how data sharpened the focus of product teams” by Erin Gustafson). This system has a number of strengths, however I’d prefer to concentrate on how one can use this method for DAU forecasting.

The brand new yr is coming quickly, so many firms are planning their budgets for the following yr nowadays. Value estimations usually require DAU forecasts. On this article, I’ll present how one can get this prediction utilizing Duolingo’s development mannequin. I’ll clarify why this method is best in comparison with commonplace time-series forecasting strategies and how one can regulate the prediction in response to your groups’ plans (e.g., advertising and marketing, activation, product groups).

The article textual content goes together with the code, and a simulated dataset is hooked up so the analysis is absolutely reproducible. The Jupyter pocket book model is out there here. In the long run, I’ll share a DAU “calculator” designed in Google Spreadsheet format.

I’ll be narrating on behalf of the collective “we” as if we’re speaking collectively.

A fast recap on how the Duolingo’s growth model works. At day d (d = 1, 2, … ) of a person’s lifetime, the person might be in one of many following 7 (mutually-exclusive) states: new, present, reactivated, resurrected, at_risk_wau, at_risk_mau, dormant. The states are outlined in response to indicators of whether or not a person was energetic in the present day, within the final 7 days, or within the final 30 days. The definition abstract is given within the desk under:

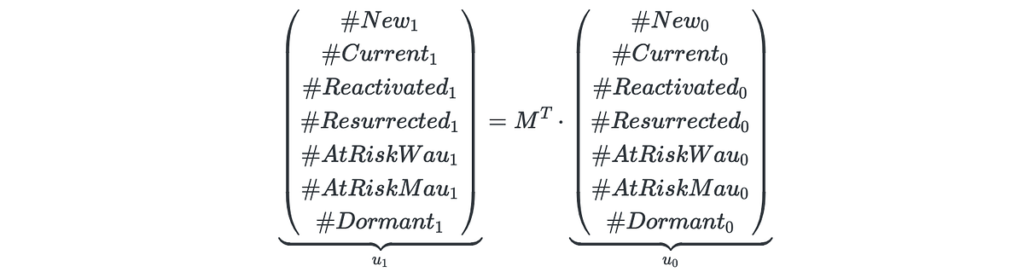

Having these states outlined (as a set S), we are able to take into account person conduct as a Markov chain. Right here’s an instance of a person’s trajectory: new→ present→ present→ at_risk_wau→…→ at_risk_mau→…→ dormant. Let M be a transition matrix related to this Markov course of: m_{i, j} = P(s_j | s_i) are the chances {that a} person strikes to state s_j proper after being at state s_i, the place s_i, s_j ∈ S. Such a matrix is inferred from the historic information.

If we assume that person conduct is stationary (impartial of time), the matrix M absolutely describes the states of all customers sooner or later. Suppose that the vector u_0 of size 7 accommodates the counts of customers in sure states on a given day, denoted as day 0. In keeping with the Markov mannequin, on the following day 1, we anticipate to have the next variety of customers states u_1:

Making use of this method recursively, we derive the variety of customers in sure states on any arbitrary day t > 0 sooner or later.

Apart from the preliminary distribution u_0, we have to present the variety of new customers that can seem within the product every day sooner or later. We’ll deal with this downside as a normal time-series forecasting.

Now, having u_t calculated, we are able to decide DAU values on day t:

DAU_t = #New_t + #Current_t + #Reactivated_t + #Resurrected_t

Moreover, we are able to simply calculate WAU and MAU metrics:

WAU_t = DAU_t + #AtRiskWau_t,

MAU_t = DAU_t + #AtRiskWau_t + #AtRiskMau_t.

Lastly, right here’s the algorithm define:

- For every prediction day t = 1, …, T, calculate the anticipated variety of new customers #New_1, …, #New_T.

- For every lifetime day of every person, assign one of many 7 states.

- Calculate the transition matrix M from the historic information.

- Calculate preliminary state counts u_0 equivalent to day t=0.

- Recursively calculate u_{t+1} = M^T * u_t.

- Calculate DAU, WAU, and MAU for every prediction day t = 1, …, T.

This part is dedicated to technical points of the implementation. For those who’re all in favour of finding out the mannequin properties quite than code, chances are you’ll skip this part and go to the Section 4.

3.1 Dataset

We use a simulated dataset primarily based on historic information of a SaaS app. The info is saved within the dau_data.csv.gz file and accommodates three columns: user_id, date, and registration_date. Every file signifies a day when a person was energetic. The dataset consists of exercise indicators for 51480 customers from 2020-11-01 to 2023-10-31. Moreover, information from October 2020 is included to calculate person states correctly, because the at_risk_mau and dormant states require information from one month prior.

import pandas as pddf = pd.read_csv('dau_data.csv.gz', compression='gzip')

df['date'] = pd.to_datetime(df['date'])

df['registration_date'] = pd.to_datetime(df['registration_date'])

print(f'Form: {df.form}')

print(f'Complete customers: {df['user_id'].nunique()}')

print(f'Knowledge vary: [{df['date'].min()}, {df['date'].max()}]')

df.head()

Form: (667236, 3)

Complete customers: 51480

Knowledge vary: [2020-10-01 00:00:00, 2023-10-31 00:00:00]

That is how the DAU time-series seems to be like.

df.groupby('date').dimension()

.plot(title='DAU, historic')

Suppose that in the present day is 2023–10–31 and we wish to predict the DAU metric for the following 2024 yr. We outline a few world constants PREDICTION_START and PREDICTION_END which embody the prediction interval.

PREDICTION_START = '2023-11-01'

PREDICTION_END = '2024-12-31'

3.2 Predicting new customers quantity

Let’s begin from the brand new customers prediction. We use the prophet library as one of many best methods to forecast time-series information. The new_users Collection accommodates such information. We extract it from the unique df dataset deciding on the rows the place the registration date is the same as the date.

new_users = df[df['date'] == df['registration_date']]

.groupby('date').dimension()

new_users.head()

date

2020-10-01 4

2020-10-02 4

2020-10-03 3

2020-10-04 4

2020-10-05 8

dtype: int64

prophet requires a time-series as a DataFrame containing two columns ds and y, so we reformat the new_users Collection to the new_users_prophet DataFrame. One other factor we have to put together is to create the future variable containing sure days for prediction: from prediction_start to prediction_end. This logic is carried out within the predict_new_users operate. The plot under illustrates predictions for each previous and future durations.

import logging

import matplotlib.pyplot as plt

from prophet import Prophet# suppress prophet logs

logging.getLogger('prophet').setLevel(logging.WARNING)

logging.getLogger('cmdstanpy').disabled=True

def predict_new_users(prediction_start, prediction_end, new_users_train, show_plot=True):

"""

Forecasts a time-seires for brand new customers

Parameters

----------

prediction_start : str

Date in YYYY-MM-DD format.

prediction_end : str

Date in YYYY-MM-DD format.

new_users_train : pandas.Collection

Historic information for the time-series previous the prediction interval.

show_plot : boolean, default=True

If True, a chart with the prepare and predicted time-series values is displayed.

Returns

-------

pandas.Collection

Collection containing the anticipated values.

"""

m = Prophet()

new_users_train = new_users_train

.loc[new_users_train.index < prediction_start]

new_users_prophet = pd.DataFrame({

'ds': new_users_train.index,

'y': new_users_train.values

})

m.match(new_users_prophet)

durations = len(pd.date_range(prediction_start, prediction_end))

future = m.make_future_dataframe(durations=durations)

new_users_pred = m.predict(future)

if show_plot:

m.plot(new_users_pred)

plt.title('New customers prediction');

new_users_pred = new_users_pred

.assign(yhat=lambda _df: _df['yhat'].astype(int))

.rename(columns={'ds': 'date', 'yhat': 'depend'})

.set_index('date')

.clip(decrease=0)

['count']

return new_users_pred

new_users_pred = predict_new_users(PREDICTION_START, PREDICTION_END, new_users)

The new_users_pred Collection shops the anticipated customers quantity.

new_users_pred.tail(5)

date

2024-12-27 52

2024-12-28 56

2024-12-29 71

2024-12-30 79

2024-12-31 74

Title: depend, dtype: int64

3.3 Getting the states

In observe, probably the most calculations are cheap to execute as SQL queries to a database the place the info is saved. Hereafter, we are going to simulate such querying utilizing the duckdb library.

We wish to assign one of many 7 states to every day of a person’s lifetime throughout the app. In keeping with the definition, for every day, we have to take into account a minimum of the previous 30 days. That is the place SQL window features are available. Nevertheless, for the reason that df information accommodates solely data of energetic days, we have to explicitly prolong them and embody the times when a person was not energetic. In different phrases, as a substitute of this checklist of data:

user_id date registration_date

1234567 2023-01-01 2023-01-01

1234567 2023-01-03 2023-01-01

we’d prefer to get an inventory like this:

user_id date is_active registration_date

1234567 2023-01-01 TRUE 2023-01-01

1234567 2023-01-02 FALSE 2023-01-01

1234567 2023-01-03 TRUE 2023-01-01

1234567 2023-01-04 FALSE 2023-01-01

1234567 2023-01-05 FALSE 2023-01-01

... ... ... ...

1234567 2023-10-31 FALSE 2023-01-01

For readability functions we break up the next SQL question into a number of subqueries.

full_range: Create a full sequence of dates for every person.dau_full: Get the total checklist of each energetic and inactive data.states: Assign one of many 7 states for every day of a person’s lifetime.

import duckdbDATASET_START = '2020-11-01'

DATASET_END = '2023-10-31'

OBSERVATION_START = '2020-10-01'

question = f"""

WITH

full_range AS (

SELECT

user_id, UNNEST(generate_series(best(registration_date, '{OBSERVATION_START}'), date '{DATASET_END}', INTERVAL 1 DAY))::date AS date

FROM (

SELECT DISTINCT user_id, registration_date FROM df

)

),

dau_full AS (

SELECT

fr.user_id,

fr.date,

df.date IS NOT NULL AS is_active,

registration_date

FROM full_range AS fr

LEFT JOIN df USING(user_id, date)

),

states AS (

SELECT

user_id,

date,

is_active,

first_value(registration_date IGNORE NULLS) OVER (PARTITION BY user_id ORDER BY date) AS registration_date,

SUM(is_active::int) OVER (PARTITION BY user_id ORDER BY date ROWS BETWEEN 6 PRECEDING and 1 PRECEDING) AS active_days_back_6d,

SUM(is_active::int) OVER (PARTITION BY user_id ORDER BY date ROWS BETWEEN 29 PRECEDING and 1 PRECEDING) AS active_days_back_29d,

CASE

WHEN date = registration_date THEN 'new'

WHEN is_active = TRUE AND active_days_back_6d BETWEEN 1 and 6 THEN 'present'

WHEN is_active = TRUE AND active_days_back_6d = 0 AND IFNULL(active_days_back_29d, 0) > 0 THEN 'reactivated'

WHEN is_active = TRUE AND active_days_back_6d = 0 AND IFNULL(active_days_back_29d, 0) = 0 THEN 'resurrected'

WHEN is_active = FALSE AND active_days_back_6d > 0 THEN 'at_risk_wau'

WHEN is_active = FALSE AND active_days_back_6d = 0 AND ifnull(active_days_back_29d, 0) > 0 THEN 'at_risk_mau'

ELSE 'dormant'

END AS state

FROM dau_full

)

SELECT user_id, date, state FROM states

WHERE date BETWEEN '{DATASET_START}' AND '{DATASET_END}'

ORDER BY user_id, date

"""

states = duckdb.sql(question).df()

The question outcomes are saved within the states DataFrame:

3.4 Calculating the transition matrix

Having obtained these states, we are able to calculate state transition frequencies. Within the Section 4.3 we’ll examine how the prediction relies on a interval by which transitions are thought-about, so it’s cheap to pre-aggregate this information on every day foundation. The ensuing transitions DataFrame accommodates date, state_from, state_to, and cnt columns.

Now, we are able to calculate the transition matrix M. We implement the get_transition_matrix operate, which accepts the transitions DataFrame and a pair of dates that embody the transitions interval to be thought-about.

As a baseline, let’s calculate the transition matrix for the entire yr from 2022-11-01 to 2023-10-31.

M = get_transition_matrix(transitions, '2022-11-01', '2023-10-31')

M

The sum of every row of any transition matrix equals 1 because it represents the chances of shifting from one state to every other state.

3.5 Getting the preliminary state counts

An preliminary state is retrieved from the states DataFrame by the get_state0 operate and the corresponding SQL question. The one argument of the operate is the date for which we wish to get the preliminary state. We assign the consequence to the state0 variable.

def get_state0(date):

question = f"""

SELECT state, depend(*) AS cnt

FROM states

WHERE date = '{date}'

GROUP BY state

"""state0 = duckdb.sql(question).df()

state0 = state0.set_index('state').reindex(states_order)['cnt']

return state0

state0 = get_state0(DATASET_END)

state0

state

new 20

present 475

reactivated 15

resurrected 19

at_risk_wau 404

at_risk_mau 1024

dormant 49523

Title: cnt, dtype: int64

3.6 Predicting DAU

The predict_dau operate under accepts all of the earlier variables required for the DAU prediction and makes this prediction for a date vary outlined by the start_date and end_date arguments.

def predict_dau(M, state0, start_date, end_date, new_users):

"""

Predicts DAU over a given date vary.Parameters

----------

M : pandas.DataFrame

Transition matrix representing person state modifications.

state0 : pandas.Collection

counts of preliminary state of customers.

start_date : str

Begin date of the prediction interval in 'YYYY-MM-DD' format.

end_date : str

Finish date of the prediction interval in 'YYYY-MM-DD' format.

new_users : int or pandas.Collection

The anticipated quantity of latest customers for every day between `start_date` and `end_date`.

If a Collection, it ought to have dates because the index.

If an int, the identical quantity is used for every day.

Returns

-------

pandas.DataFrame

DataFrame containing the anticipated DAU, WAU, and MAU for every day within the date vary,

with columns for various person states and tot.

"""

dates = pd.date_range(start_date, end_date)

dates.identify = 'date'

dau_pred = []

new_dau = state0.copy()

for date in dates:

new_dau = (M.transpose() @ new_dau).astype(int)

if isinstance(new_users, int):

new_users_today = new_users

else:

new_users_today = new_users.astype(int).loc[date]

new_dau.loc['new'] = new_users_today

dau_pred.append(new_dau.tolist())

dau_pred = pd.DataFrame(dau_pred, index=dates, columns=states_order)

dau_pred['dau'] = dau_pred['new'] + dau_pred['current'] + dau_pred['reactivated'] + dau_pred['resurrected']

dau_pred['wau'] = dau_pred['dau'] + dau_pred['at_risk_wau']

dau_pred['mau'] = dau_pred['dau'] + dau_pred['at_risk_wau'] + dau_pred['at_risk_mau']

return dau_pred

dau_pred = predict_dau(M, state0, PREDICTION_START, PREDICTION_END, new_users_pred)

dau_pred

That is how the DAU prediction dau_pred seems to be like for the PREDICTION_START – PREDICTION_END interval. Apart from the anticipated dau, wau, and mau columns, the output accommodates the variety of customers in every state for every prediction date.

Lastly, we calculate the ground-truth values of DAU, WAU, and MAU (together with the person state counts), hold them within the dau_true DataFrame, and plot the anticipated and true values altogether.

question = f"""

SELECT date, state, COUNT(*) AS cnt

FROM states

GROUP BY date, state

ORDER BY date, state;

"""dau_true = duckdb.sql(question).df()

dau_true['date'] = pd.to_datetime(dau_true['date'])

dau_true = dau_true.pivot(index='date', columns='state', values='cnt')

dau_true['dau'] = dau_true['new'] + dau_true['current'] + dau_true['reactivated'] + dau_true['resurrected']

dau_true['wau'] = dau_true['dau'] + dau_true['at_risk_wau']

dau_true['mau'] = dau_true['dau'] + dau_true['at_risk_wau'] + dau_true['at_risk_mau']

dau_true.head()

pd.concat([dau_true['dau'], dau_pred['dau']])

.plot(title='DAU, historic & predicted');

plt.axvline(PREDICTION_START, colour='ok', linestyle='--');

We’ve obtained the prediction however thus far it’s not clear whether or not it’s honest or not. Within the subsequent part, we’ll consider the mannequin.

4.1 Baseline mannequin

To begin with, let’s verify whether or not we actually have to construct a posh mannequin to foretell DAU. Wouldn’t or not it’s higher to foretell DAU as a normal time-series utilizing the talked about prophet library? The operate predict_dau_prophet under implements this. We attempt to use some tweaks out there within the library to be able to make the prediction extra correct. Specifically:

- we use logistic mannequin as a substitute of linear to keep away from adverse values;

- we add explicitly month-to-month and yearly seasonality;

- we take away the outliers;

- we explicitly outline a peak interval in January and February as “holidays”.

def predict_dau_prophet(prediction_start, prediction_end, dau_true, show_plot=True):

# assigning peak days for the brand new yr

holidays = pd.DataFrame({

'vacation': 'january_spike',

'ds': pd.date_range('2022-01-01', '2022-01-31', freq='D').tolist() +

pd.date_range('2023-01-01', '2023-01-31', freq='D').tolist(),

'lower_window': 0,

'upper_window': 40

})m = Prophet(development='logistic', holidays=holidays)

m.add_seasonality(identify='month-to-month', interval=30.5, fourier_order=3)

m.add_seasonality(identify='yearly', interval=365, fourier_order=3)

prepare = dau_true.loc[(dau_true.index < prediction_start) & (dau_true.index >= '2021-08-01')]

train_prophet = pd.DataFrame({'ds': prepare.index, 'y': prepare.values})

# removining outliers

train_prophet.loc[train_prophet['ds'].between('2022-06-07', '2022-06-09'), 'y'] = None

train_prophet['new_year_peak'] = (train_prophet['ds'] >= '2022-01-01') &

(train_prophet['ds'] <= '2022-02-14')

m.add_regressor('new_year_peak')

# setting logistic higher and decrease bounds

train_prophet['cap'] = dau_true.max() * 1.1

train_prophet['floor'] = 0

m.match(train_prophet)

durations = len(pd.date_range(prediction_start, prediction_end))

future = m.make_future_dataframe(durations=durations)

future['new_year_peak'] = (future['ds'] >= '2022-01-01') & (future['ds'] <= '2022-02-14')

future['cap'] = dau_true.max() * 1.1

future['floor'] = 0

pred = m.predict(future)

if show_plot:

m.plot(pred);

# changing the predictions to an applicable format

pred = pred

.assign(yhat=lambda _df: _df['yhat'].astype(int))

.rename(columns={'ds': 'date', 'yhat': 'depend'})

.set_index('date')

.clip(decrease=0)

['count']

.loc[lambda s: (s.index >= prediction_start) & (s.index <= prediction_end)]

return pred

The truth that the code seems to be fairly refined signifies that one can’t merely apply prophet to the DAU time-series.

Hereafter we check a prediction for a number of predicting horizons: 3, 6, and 12 months. Consequently, we get 3 check units:

- 3-months horizon:

2023-08-01–2023-10-31, - 6-months horizon:

2023-05-01–2023-10-31, - 1-year horizon:

2022-11-01–2023-10-31.

For every check set we calculate the MAPE loss operate.

from sklearn.metrics import mean_absolute_percentage_errormapes = []

prediction_end = '2023-10-31'

prediction_horizon = [3, 6, 12]

for offset in prediction_horizon:

prediction_start = pd.to_datetime(prediction_end) - pd.DateOffset(months=offset - 1)

prediction_start = prediction_start.change(day=1)

prediction_end = '2023-10-31'

pred = predict_dau_prophet(prediction_start, prediction_end, dau_true['dau'], show_plot=False)

mape = mean_absolute_percentage_error(dau_true['dau'].reindex(pred.index), pred)

mapes.append(mape)

mapes = pd.DataFrame({'horizon': prediction_horizon, 'MAPE': mapes})

mapes

The MAPE error seems to be excessive: 18% — 35%. The truth that the shortest horizon has the very best error implies that the mannequin is tuned for the long-term predictions. That is one other inconvenience of such an method: we’ve got to tune the mannequin for every prediction horizon. Anyway, that is our baseline. Within the subsequent part we’ll evaluate it with extra superior fashions.

4.2 Common analysis

On this part we consider the mannequin carried out within the Section 3.6. Up to now we set the transition interval as 1 yr earlier than the prediction begin. We’ll examine how the prediction relies on the transition interval within the Section 4.3. As for the brand new customers, we run the mannequin utilizing two choices: the actual values and the anticipated ones. Equally, we repair the identical 3 prediction horizons and check the mannequin on them.

The make_predicion helper operate under implements the described choices. It accepts prediction_start, prediction_end arguments defining the prediction interval for a given horizon, new_users_mode which might be both true or predict, and transition_period. The choices of the latter argument shall be defined additional.

import redef make_prediction(prediction_start, prediction_end, new_users_mode='predict', transition_period='last_30d'):

prediction_start_minus_1d = pd.to_datetime(prediction_start) - pd.Timedelta('1d')

state0 = get_state0(prediction_start_minus_1d)

if new_users_mode == 'predict':

new_users_pred = predict_new_users(prediction_start, prediction_end, new_users, show_plot=False)

elif new_users_mode == 'true':

new_users_pred = new_users.copy()

if transition_period.startswith('last_'):

shift = int(re.search(r'last_(d+)d', transition_period).group(1))

transitions_start = pd.to_datetime(prediction_start) - pd.Timedelta(shift, 'd')

M = get_transition_matrix(transitions, transitions_start, prediction_start_minus_1d)

dau_pred = predict_dau(M, state0, prediction_start, prediction_end, new_users_pred)

else:

transitions_start = pd.to_datetime(prediction_start) - pd.Timedelta(240, 'd')

M_base = get_transition_matrix(transitions, transitions_start, prediction_start_minus_1d)

dau_pred = pd.DataFrame()

month_starts = pd.date_range(prediction_start, prediction_end, freq='1MS')

N = len(month_starts)

for i, prediction_month_start in enumerate(month_starts):

prediction_month_end = pd.offsets.MonthEnd().rollforward(prediction_month_start)

transitions_month_start = prediction_month_start - pd.Timedelta('365D')

transitions_month_end = prediction_month_end - pd.Timedelta('365D')

M_seasonal = get_transition_matrix(transitions, transitions_month_start, transitions_month_end)

if transition_period == 'smoothing':

i = min(i, 12)

M = M_seasonal * i / (N - 1) + (1 - i / (N - 1)) * M_base

elif transition_period.startswith('seasonal_'):

seasonal_coef = float(re.search(r'seasonal_(0.d+)', transition_period).group(1))

M = seasonal_coef * M_seasonal + (1 - seasonal_coef) * M_base

dau_tmp = predict_dau(M, state0, prediction_month_start, prediction_month_end, new_users_pred)

dau_pred = pd.concat([dau_pred, dau_tmp])

state0 = dau_tmp.loc[prediction_month_end][states_order]

return dau_pred

def prediction_details(dau_true, dau_pred, show_plot=True, ax=None):

y_true = dau_true.reindex(dau_pred.index)['dau']

y_pred = dau_pred['dau']

mape = mean_absolute_percentage_error(y_true, y_pred)

if show_plot:

prediction_start = str(y_true.index.min().date())

prediction_end = str(y_true.index.max().date())

if ax is None:

y_true.plot(label='DAU true')

y_pred.plot(label='DAU pred')

plt.title(f'DAU prediction, {prediction_start} - {prediction_end}')

plt.legend()

else:

y_true.plot(label='DAU true', ax=ax)

y_pred.plot(label='DAU pred', ax=ax)

ax.set_title(f'DAU prediction, {prediction_start} - {prediction_end}')

ax.legend()

return mape

In complete, we’ve got 6 prediction situations: 2 choices for brand new customers and three prediction horizons. The diagram under illustrates the outcomes. The charts on the left relate to the new_users_mode = 'predict' possibility, whereas the proper ones relate to the new_users_mode = 'true' possibility.

fig, axs = plt.subplots(3, 2, figsize=(15, 6))

mapes = []

prediction_end = '2023-10-31'

prediction_horizon = [3, 6, 12]for i, offset in enumerate(prediction_horizon):

prediction_start = pd.to_datetime(prediction_end) - pd.DateOffset(months=offset - 1)

prediction_start = prediction_start.change(day=1)

args = {

'prediction_start': prediction_start,

'prediction_end': prediction_end,

'transition_period': 'last_365d'

}

for j, new_users_mode in enumerate(['predict', 'true']):

args['new_users_mode'] = new_users_mode

dau_pred = make_prediction(**args)

mape = prediction_details(dau_true, dau_pred, ax=axs[i, j])

mapes.append([offset, new_users_mode, mape])

mapes = pd.DataFrame(mapes, columns=['horizon', 'new_users', 'MAPE'])

plt.tight_layout()

And listed here are the MAPE values summarizing the prediction high quality:

mapes.pivot(index='horizon', columns='new_users', values='MAPE')

We discover a number of issues.

- Generally, the mannequin demonstrates significantly better outcomes than the baseline. Certainly, the baseline is predicated on the historic DAU information solely, whereas the mannequin makes use of the person states data.

- Nevertheless, for the 1-year horizon and

new_users_mode='predict'the MAPE error is big: 65%. That is 3 instances greater than the corresponding baseline error (21%). However,new_users_mode='true'possibility offers a significantly better consequence: 8%. It implies that the brand new customers prediction has a huge effect on the mannequin, particularly for long-term predictions. For the shorter durations the distinction is much less dramatic. The most important motive for such a distinction is that 1-year interval consists of Christmas with its excessive values. Consequently, i) it is onerous to foretell such excessive new person values, ii) the interval closely impacts person conduct, the transition matrix and, consequently, DAU values. Therefore, we strongly advocate to implement the brand new person prediction fastidiously. The baseline mannequin was specifically tuned for this Christmas interval, so it is not stunning that it outperforms the Markov mannequin. - When the brand new customers prediction is correct, the mannequin captures developments effectively. It implies that utilizing final three hundred and sixty five days for the transition matrix calculation is an affordable selection.

- Curiously, the true new customers information gives worse outcomes for the 3-months prediction. That is nothing however a coincidence. The flawed new customers prediction in October 2023 reversed the anticipated DAU pattern and made MAPE a bit decrease.

Now, let’s decompose the prediction error and see which states contribure probably the most. By error we imply right here dau_pred – dau_true values, by relative error – ( dau_pred – dau_true) / dau_true – see left and proper diagrams under correspondingly. So as to concentrate on this side, we’ll slender the configuration to the 3-months prediction horizon and the new_users_mode='true' possibility.

dau_component_cols = ['new', 'current', 'reactivated', 'resurrected']dau_pred = make_prediction('2023-08-01', '2023-10-31', new_users_mode='true', transition_period='last_365d')

determine, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 4))

dau_pred[dau_component_cols]

.subtract(dau_true[dau_component_cols])

.reindex(dau_pred.index)

.plot(title='Prediction error by state', ax=ax1)

dau_pred[['current']]

.subtract(dau_true[['current']])

.div(dau_true[['current']])

.reindex(dau_pred.index)

.plot(title='Relative prediction error (present state)', ax=ax2);

From the left chart we discover that the error is mainly contributed by the present state. It isn’t stunning since this state contributes to DAU probably the most. The error for the reactivated, and resurrected states is sort of low. One other attention-grabbing factor is that this error is generally adverse for the present state and principally optimistic for the resurrected state. The previous may be defined by the truth that the brand new customers who appeared within the prediction interval are extra engaged that the customers from the previous. The latter signifies that the resurrected customers in actuality contribute to DAU lower than the transition matrix expects, so the dormant→ resurrected conversion fee is overestimated.

As for the relative error, it is smart to investigate it for the present state solely. It’s because the every day quantity of the reactivated and resurrected states are low so the relative error is excessive and noisy. The relative error for the present state varies between -25% and 4% which is sort of excessive. And since we have fastened the brand new customers prediction, this error is defined by the transition matrix inaccuracy solely. Specifically, the present→ present conversion fee is roughly 0.8 which is excessive and, in consequence, it contributes to the error loads. So if we wish to enhance the prediction we have to take into account tuning this conversion fee foremost.

4.3 Transitions interval affect

Within the earlier part we saved the transitions interval fastened: 1 yr earlier than a prediction begin. Now we’re going to check how lengthy this era must be to get extra correct prediction. We take into account the identical prediction horizons of three, 6, and 12 months. So as to mitigate the noise from the brand new customers prediction, we use the actual values of the brand new customers quantity: new_users_mode='true'.

Right here comes various of the transition_period argument. Its values are masked with the last_<N>d sample the place N stands for the variety of days in a transitions interval. For every prediction horizon we calculate 12 completely different transition durations of 1, 2, …, 12 months. Then we calculate the MAPE error for every of the choices and plot the outcomes.

consequence = []for prediction_offset in prediction_horizon:

prediction_start = pd.to_datetime(prediction_end) - pd.DateOffset(months=prediction_offset - 1)

prediction_start = prediction_start.change(day=1)

for transition_offset in vary(1, 13):

dau_pred = make_prediction(

prediction_start, prediction_end, new_users_mode='true',

transition_period=f'last_{transition_offset*30}d'

)

mape = prediction_details(dau_true, dau_pred, show_plot=False)

consequence.append([prediction_offset, transition_offset, mape])

consequence = pd.DataFrame(consequence, columns=['prediction_period', 'transition_period', 'mape'])

consequence.pivot(index='transition_period', columns='prediction_period', values='mape')

.plot(title='MAPE by prediction and transition interval');

It seems that the optimum transitions interval relies on the prediction horizon. Shorter horizons require shorter transitions durations: the minimal MAPE error is achieved at 1, 4, and eight transition durations for the three, 6, and 12 months correspondingly. Apparently, it is because the longer horizons include some seasonal results that may very well be captured solely by the longer transitions durations. Additionally, plainly for the longer prediction horizons the MAPE curve is U-shaped which means that too lengthy and too quick transitions durations are each not good for the prediction. We’ll develop this concept within the subsequent part.

4.4 Obsolence and seasonality

Nonetheless, fixing a single transition matrix for predicting the entire yr forward doesn’t appear to be a good suggestion: such a mannequin can be too inflexible. Normally, person conduct varies relying on a season. For instance, customers who seem after Christmas may need some shifts in conduct. One other typical scenario is when customers change their conduct in summer season. On this part, we’ll attempt to take into consideration these seasonal results.

So we wish to predict DAU for 1 yr forward ranging from November 2022. As a substitute of utilizing a single transition matrix M_base which is calculated for the final 8 months earlier than the prediction begin, in response to the earlier subsection outcomes (and labeled because the last_240d possibility under), we’ll take into account a combination of this matrix and a seasonal one M_seasonal. The latter is calculated on month-to-month foundation lagging 1 yr behind. For instance, to foretell DAU for November 2022 we outline M_seasonal because the transition matrix for November 2021. Then we shift the prediction horizon to December 2022 and calculate M_seasonal for December 2021, and so on.

So as to combine M_base and M_seasonal we outline the next two choices.

seasonal_0.3: M = 0.3 *M_seasonal+ 0.7 *M_base. 0.3 is a weight that was chosen as an area minimal after some experiments.smoothing: M = i/(N-1) *M_seasonal+ (1 – i/(N – 1)) *M_basethe place N is the variety of months throughout the predicting interval, i = 0, …, N – 1 – the month index. The concept of this configuration is to regularly change from the latest transition matrixM_baseto seasonal ones because the prediction month strikes ahead from the prediction begin.

consequence = pd.DataFrame()

for transition_period in ['last_240d', 'seasonal_0.3', 'smoothing']:

consequence[transition_period] = make_prediction(

'2022-11-01', '2023-10-31',

'true',

transition_period

)['dau']

consequence['true'] = dau_true['dau']

consequence['true'] = consequence['true'].astype(int)

consequence.plot(title='DAU prediction by completely different transition matrices');

mape = pd.DataFrame()

for col in consequence.columns:

if col != 'true':

mape.loc[col, 'mape'] = mean_absolute_percentage_error(consequence['true'], consequence[col])

mape

In keeping with the MAPE errors, seasonal_0.3 configuration gives the very best outcomes. Curiously, smoothing method has gave the impression to be even worse than the last_240d. From the diagram above we see that each one three fashions begin to underestimate the DAU values in July 2023, particularly the smoothing mannequin. It appears that evidently the brand new customers who began showing in July 2023 are extra engaged than the customers from 2022. Most likely, the app was improved sufficiently or the advertising and marketing staff did an incredible job. Consequently, the smoothing mannequin that a lot depends on the outdated transitions information from July 2022 – October 2022 fails greater than the opposite fashions.

4.5 Ultimate resolution

To sum issues up, let’s make a remaining prediction for the 2024 yr. We use the seasonal_0.3 configuration and the anticipated values for brand new customers.

dau_pred = make_prediction(

PREDICTION_START, PREDICTION_END,

new_users_mode='predict',

transition_period='seasonal_0.3'

)

dau_true['dau'].plot(label='true')

dau_pred['dau'].plot(label='seasonal_0.3')

plt.title('DAU, historic & predicted')

plt.axvline(PREDICTION_START, colour='ok', linestyle='--')

plt.legend();

Within the Section 4 we studied the mannequin efficiency from the prediction accuracy perspective. Now let’s talk about the mannequin from the sensible perspective.

Apart from poor accuracy, predicting DAU as a time-series (see the Section 4.1) makes this method very stiff. Primarily, it makes a prediction in such a way so it could match historic information finest. In observe, when planning for a subsequent yr we often have some sure expectations in regards to the future. For instance,

- the advertising and marketing staff goes to launch some new more practical campaings,

- the activation staff is planning to enhance the onboarding course of,

- the product staff will launch some new options that might interact and retain customers extra.

Our mannequin can take into consideration such expectations. For the examples above we are able to regulate the brand new customers prediction, the new→ present and the present→ present conversion charges respectively. Consequently, we are able to get a prediction that does not match with the historic information however nonetheless can be extra sensible. This mannequin’s property is not only versatile – it is interpretable. You possibly can simply talk about all these changes with the stakeholders, they usually can perceive how the prediction works.

One other benefit of the mannequin is that it doesn’t require predicting whether or not a sure person shall be energetic on a sure day. Typically binary classifiers are used for this function. The draw back of this method is that we have to apply such a classifier to every person together with all of the dormant customers and every day from a prediction horizon. This can be a tremedous computational value. In distinction, the Markov mannequin requires solely the preliminary quantity of states ( state0). Furthermore, such classiffiers are sometimes black-box fashions: they’re poorly interpretable and onerous to regulate.

The Markov mannequin additionally has some limitations. As we have already got seen, it’s delicate to the brand new customers prediction. It’s simple to completely spoil the prediction by a flawed new customers quantity. One other downside is that the Markov mannequin is memoryless which means that it doesn’t take into consideration the person’s historical past. For instance, it doesn’t distinguish whether or not a present person is a beginner, skilled, or reactivated/ resurrected one. The retention fee of those person varieties must be actually completely different. Additionally, as we mentioned earlier, the person conduct may be of various nature relying on the season, advertising and marketing sources, nations, and so on. Up to now our mannequin will not be capable of seize these variations. Nevertheless, this may be a topic for additional analysis: we might prolong the mannequin by becoming extra transition matrices for various person segments.

Lastly, as we promised within the introduction, we offer a DAU spreadsheet calculator. Within the Prediction sheet you will have to fill the preliminary states distribution row (marked with blue) and the brand new customers prediction column (marked with purple). Within the Conversions sheet you’ll be able to regulate the transition matrix values. Keep in mind that the sum of every row of the matrix must be equal to 1.