I research the intersection of artificial intelligence, pure language processing, and human reasoning because the director of the Advancing Human and Machine Reasoning lab on the College of South Florida. I’m additionally commercializing this analysis in an AI startup that gives a vulnerability scanner for language fashions.

From my vantage level, I noticed important developments within the area of AI language fashions in 2024, each in analysis and the business.

Maybe essentially the most thrilling of those are the capabilities of smaller language fashions, help for addressing AI hallucination, and frameworks for growing AI agents.

Small AIs make a splash

On the coronary heart of commercially obtainable generative AI merchandise like ChatGPT are giant language fashions, or LLMs, that are skilled on huge quantities of textual content and produce convincing humanlike language. Their measurement is usually measured in parameters, that are the numerical values a mannequin derives from its coaching knowledge. The bigger fashions like these from the main AI corporations have a whole lot of billions of parameters.

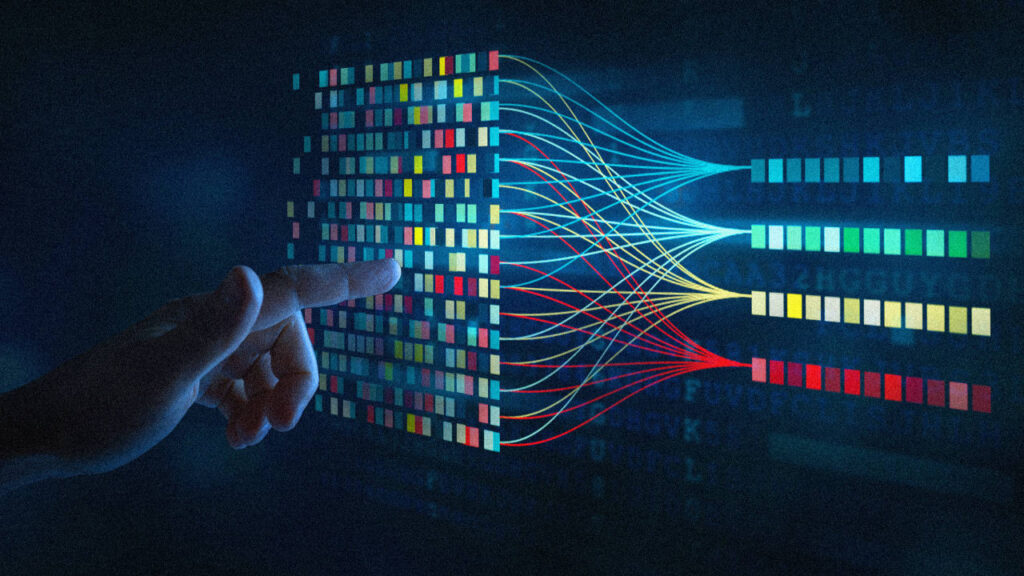

There may be an iterative interplay between large language models and smaller language models, which appears to have accelerated in 2024.

First, organizations with essentially the most computational assets experiment with and prepare more and more bigger and extra highly effective language fashions. These yield new giant language mannequin capabilities, benchmarks, coaching units, and coaching or prompting methods. In flip, these are used to make smaller language fashions—within the vary of three billion parameters or much less—which could be run on extra inexpensive laptop setups, require much less vitality and reminiscence to coach, and could be fine-tuned with much less knowledge.

It’s no shock, then, that builders have launched a number of highly effective smaller language fashions—though the definition of small retains altering: Phi-3 and Phi-4 from Microsoft, Llama-3.2 1B and 3B, and Qwen2-VL-2B are only a few examples.

These smaller language fashions could be specialised for extra particular duties, resembling quickly summarizing a set of feedback or fact-checking textual content towards a particular reference. They will work with their larger cousins to provide more and more highly effective hybrid methods. https://www.youtube.com/embed/zDj24etsRZ4?wmode=clear&begin=0 What are small language mannequin AIs – and why would you need one?

Wider entry

Elevated entry to extremely succesful language fashions giant and small generally is a blended blessing. As there have been many consequential elections all over the world in 2024, the temptation for the misuse of language fashions was excessive.

Language fashions may give malicious customers the power to generate social media posts and deceptively affect public opinion. There was a great deal of concern about this menace in 2024, provided that it was an election 12 months in lots of nations.

And certainly, a robocall faking President Joe Biden’s voice requested New Hampshire Democratic major voters to stay home. OpenAI needed to intervene to disrupt over 20 operations and deceptive networks that attempted to make use of its fashions for misleading campaigns. Pretend movies and memes have been created and shared with the assistance of AI instruments.

Regardless of the anxiety surrounding AI disinformation, it’s not yet clear what effect these efforts actually had on public opinion and the U.S. election. However, U.S. states handed a considerable amount of legislation in 2024 governing using AI in elections and campaigns.

Misbehaving bots

Google began together with AI overviews in its search outcomes, yielding some outcomes that have been hilariously and clearly flawed—until you get pleasure from glue in your pizza. Nonetheless, different outcomes could have been dangerously flawed, resembling when it advised mixing bleach and vinegar to scrub your garments.

Massive language fashions, as they’re mostly carried out, are prone to hallucinations. Because of this they will state issues which can be false or deceptive, usually with assured language. Though I and others regularly beat the drum about this, 2024 nonetheless noticed many organizations studying in regards to the risks of AI hallucination the onerous method.

Regardless of important testing, a chatbot taking part in the position of a Catholic priest advocated for baptism via Gatorade. A chatbot advising on New York City laws and regulations incorrectly stated it was “authorized for an employer to fireside a employee who complains about sexual harassment, doesn’t disclose a being pregnant or refuses to chop their dreadlocks.” And OpenAI’s speech-capable mannequin forgot whose flip it was to talk and responded to a human in her own voice.

Luckily, 2024 additionally noticed new methods to mitigate and dwell with AI hallucinations. Firms and researchers are growing instruments for ensuring AI methods follow given rules pre-deployment, in addition to environments to evaluate them. So-called guardrail frameworks examine giant language mannequin inputs and outputs in actual time, albeit usually through the use of one other layer of enormous language fashions.

And the dialog on AI regulation accelerated, inflicting the massive gamers within the giant language mannequin house to replace their insurance policies on responsibly scaling and harnessing AI.

However though researchers are regularly discovering ways to reduce hallucinations, in 2024, analysis convincingly showed that AI hallucinations are always going to exist in some form. It might be a elementary function of what occurs when an entity has finite computational and knowledge assets. In spite of everything, even human beings are recognized to confidently misremember and state falsehoods occasionally.

The rise of brokers

Massive language fashions, notably these powered by variants of the transformer architecture, are nonetheless driving essentially the most important advances in AI. For instance, builders are utilizing giant language fashions to not solely create chatbots, however to function the idea of AI brokers. The time period “agentic AI” shot to prominence in 2024, with some pundits even calling it the third wave of AI.

To know what an AI agent is, consider a chatbot expanded in two methods: First, give it entry to instruments that present the ability to take actions. This could be the power to question an exterior search engine, ebook a flight, or use a calculator. Second, give it elevated autonomy, or the power to make extra choices by itself.

For instance, a journey AI chatbot may have the ability to carry out a search of flights based mostly on what data you give it, however a tool-equipped journey agent may plan out a whole journey itinerary, together with discovering occasions, reserving reservations, and including them to your calendar.

In 2024, new frameworks for growing AI brokers emerged. Simply to call a number of, LangGraph, CrewAI, PhiData, and AutoGen/Magentic-One have been launched or improved in 2024.

Firms are simply beginning to adopt AI brokers. Frameworks for growing AI brokers are new and quickly evolving. Moreover, safety, privateness, and hallucination dangers are nonetheless a priority.

However international market analysts forecast this to change: 82% of organizations surveyed plan to use agents within 1-3 years, and 25% of all companies currently using generative AI are more likely to undertake AI brokers in 2025.

John Licato is an affiliate professor of laptop science on the Director of AMHR Lab at University of South Florida.

This text is republished from The Conversation underneath a Artistic Commons license. Learn the original article.