And with that story we start our expedition to the depths of one of the vital interesting Recurrent Neural Networks — the Lengthy Quick-Time period Reminiscence Networks, very popularly referred to as the LSTMs. Why will we revisit this traditional? As a result of they might as soon as once more change into helpful as longer context-lengths in language modeling develop in significance.

A short time in the past, researchers in Austria got here up with a promising initiative to revive the misplaced glory of LSTMs — by giving solution to the extra advanced Prolonged Lengthy-short Time period Reminiscence, additionally referred to as xLSTM. It could not be fallacious to say that earlier than Transformers, LSTMs had worn the throne for innumerous deep-learning successes. Now the query stands, with their skills maximized and disadvantages minimized, can they compete with the present-day LLMs?

To be taught the reply, let’s transfer again in time a bit and revise what LSTMs had been and what made them so particular:

Lengthy Quick Time period Reminiscence Networks had been first launched within the 12 months 1997 by Hochreiter and Schmidhuber — to deal with the long-term dependency downside confronted by RNNs. With round 106518 citations on the paper, it’s no marvel that LSTMs are a traditional.

The important thing thought in an LSTM is the flexibility to be taught when to recollect and when to overlook related info over arbitrary time intervals. Similar to us people. Relatively than beginning each thought from scratch — we depend on a lot older info and are capable of very aptly join the dots. After all, when speaking about LSTMs, the query arises — don’t RNNs do the identical factor?

The quick reply is sure, they do. Nonetheless, there’s a huge distinction. The RNN structure doesn’t help delving an excessive amount of up to now — solely as much as the fast previous. And that’s not very useful.

For instance, let’s take into account these line John Keats wrote in ‘To Autumn’:

“Season of mists and mellow fruitfulness,

Shut bosom-friend of the maturing solar;”

As people, we perceive that phrases “mists” and “mellow fruitfulness” are conceptually associated to the season of autumn, evoking concepts of a selected time of 12 months. Equally, LSTMs can seize this notion and use it to know the context additional when the phrases “maturing solar” is available in. Regardless of the separation between these phrases within the sequence, LSTM networks can be taught to affiliate and hold the earlier connections intact. And that is the massive distinction in comparison with the unique Recurrent Neural Community framework.

And the way in which LSTMs do it’s with the assistance of a gating mechanism. If we take into account the structure of an RNN vs an LSTM, the distinction could be very evident. The RNN has a quite simple structure — the previous state and current enter move via an activation operate to output the following state. An LSTM block, then again, provides three extra gates on high of an RNN block: the enter gate, the overlook gate and output gate which collectively deal with the previous state together with the current enter. This concept of gating is what makes all of the distinction.

To know issues additional, let’s dive into the main points with these unimaginable works on LSTMs and xLSTMs by the superb Prof. Tom Yeh.

First, let’s perceive the mathematical cogs and wheels behind LSTMs earlier than exploring their newer model.

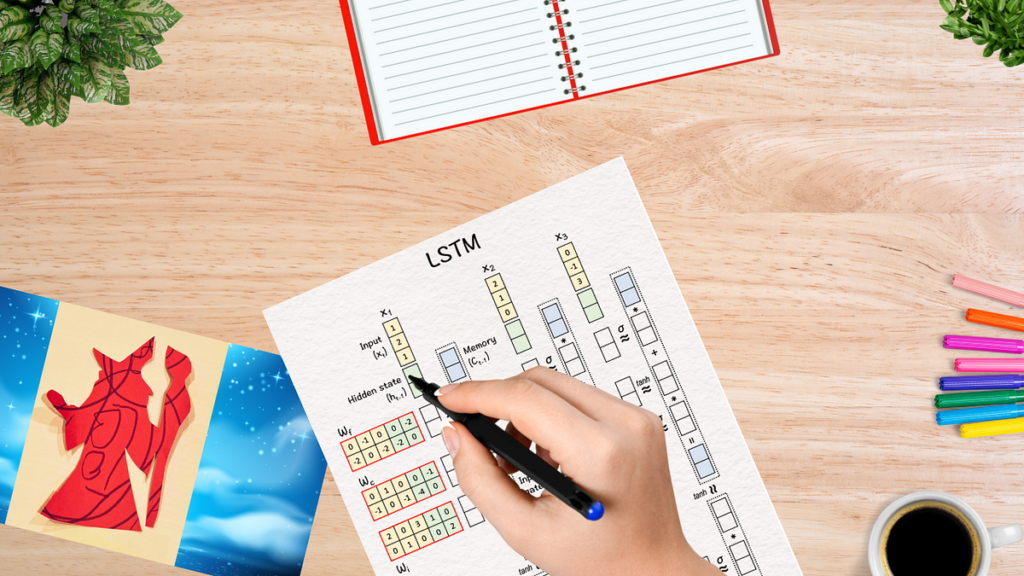

(All the pictures beneath, until in any other case famous, are by Prof. Tom Yeh from the above-mentioned LinkedIn posts, which I’ve edited together with his permission. )

So, right here we go:

[1] Initialize

Step one begins with randomly assigning values to the earlier hidden state h0 and reminiscence cells C0. Conserving it in sync with the diagrams, we set

h0 → [1,1]

C0 → [0.3, -0.5]

[2] Linear Rework

Within the subsequent step, we carry out a linear rework by multiplying the 4 weight matrices (Wf, Wc, Wi and Wo) with the concatenated present enter X1 and the earlier hidden state that we assigned within the earlier step.

The resultant values are referred to as function values obtained as the mix of the present enter and the hidden state.

[3] Non-linear Rework

This step is essential within the LSTM course of. It’s a non-linear rework with two elements — a sigmoid σ and tanh.

The sigmoid is used to acquire gate values between 0 and 1. This layer primarily determines what info to retain and what to overlook. The values all the time vary between 0 and 1 — a ‘0’ implies fully eliminating the data whereas a ‘1’ implies maintaining it in place.

- Overlook gate (f1): [-4, -6] → [0, 0]

- Enter gate (i1): [6, 4] → [1, 1]

- Output gate (o1): [4, -5] → [1, 0]

Within the subsequent half, tanh is utilized to acquire new candidate reminiscence values that may very well be added on high of the earlier info.

- Candidate reminiscence (C’1): [1, -6] → [0.8, -1]

[4] Replace Reminiscence

As soon as the above values are obtained, it’s time to replace the present state utilizing these values.

The earlier step made the choice on what must be achieved, on this step we implement that call.

We accomplish that in two elements:

- Overlook : Multiply the present reminiscence values (C0) element-wise with the obtained forget-gate values. What it does is it updates within the present state the values that had been determined may very well be forgotten. → C0 .* f1

- Enter : Multiply the up to date reminiscence values (C’1) element-wise with the enter gate values to acquire ‘input-scaled’ the reminiscence values. → C’1 .* i1

Lastly, we add these two phrases above to get the up to date reminiscence C1, i.e. C0 .* f1 + C’1 .* i1 = C1

[5] Candidate Output

Lastly, we make the choice on how the output goes to appear to be:

To start, we first apply tanh as earlier than to the brand new reminiscence C1 to acquire a candidate output o’1. This pushes the values between -1 and 1.

[6] Replace Hidden State

To get the ultimate output, we multiply the candidate output o’1 obtained within the earlier step with the sigmoid of the output gate o1 obtained in Step 3. The outcome obtained is the primary output of the community and is the up to date hidden state h1, i.e. o’1 * o1 = h1.

— — Course of t = 2 — –

We proceed with the following iterations beneath:

[7] Initialize

First, we copy the updates from the earlier steps i.e. up to date hidden state h1 and reminiscence C1.

[8] Linear Rework

We repeat Step [2] which is element-wise weight and bias matrix multiplication.

[9] Replace Reminiscence (C2)

We repeat steps [3] and [4] that are the non-linear transforms utilizing sigmoid and tanh layers, adopted by the choice on forgetting the related elements and introducing new info — this offers us the up to date reminiscence C2.

[10] Replace Hidden State (h2)

Lastly, we repeat steps [5] and [6] which provides as much as give us the second hidden state h2.

Subsequent, we’ve got the ultimate iteration.

— — Course of t = 3 — –

[11] Initialize

As soon as once more we copy the hidden state and reminiscence from the earlier iteration i.e. h2 and C2.

[12] Linear Rework

We carry out the identical linear-transform as we do in Step 2.

[13] Replace Reminiscence (C3)

Subsequent, we carry out the non-linear transforms and carry out the reminiscence updates based mostly on the values obtained in the course of the rework.

[14] Replace Hidden State (h3)

As soon as achieved, we use these values to acquire the ultimate hidden state h3.

To summarize the working above, the important thing factor to recollect is that LSTM is determined by three predominant gates : enter, overlook and output. And these gates as will be inferred from the names, management what a part of the data and the way a lot of it’s related and which elements will be discarded.

Very briefly, the steps to take action are as follows:

- Initialize the hidden state and reminiscence values from the earlier state.

- Carry out linear-transform to assist the community begin wanting on the hidden state and reminiscence values.

- Apply non-linear rework (sigmoid and tanh) to find out what values to retain /discard and to acquire new candidate reminiscence values.

- Based mostly on the choice (values obtained) in Step 3, we carry out reminiscence updates.

- Subsequent, we decide what the output goes to appear to be based mostly on the reminiscence replace obtained within the earlier step. We receive a candidate output right here.

- We mix the candidate output with the gated output worth obtained in Step 3 to lastly attain the intermediate hidden state.

This loop continues for as many iterations as wanted.

The necessity for xLSTMs

When LSTMs emerged, they undoubtedly set the platform for doing one thing that was not achieved beforehand. Recurrent Neural Networks might have reminiscence but it surely was very restricted and therefore the beginning of LSTM — to help long-term dependencies. Nonetheless, it was not sufficient. As a result of analyzing inputs as sequences obstructed the usage of parallel computation and furthermore, led to drops in efficiency on account of lengthy dependencies.

Thus, as an answer to all of it had been born the transformers. However the query nonetheless remained — can we as soon as once more use LSTMs by addressing their limitations to attain what Transformers do? To reply that query, got here the xLSTM structure.

How is xLSTM totally different from LSTM?

xLSTMs will be seen as a really advanced model of LSTMs. The underlying construction of LSTMs are preserved in xLSTM, nevertheless new components have been launched which assist deal with the drawbacks of the unique kind.

Exponential Gating & Scalar Reminiscence Mixing — sLSTM

Probably the most essential distinction is the introduction of exponential gating. In LSTMs, after we carry out Step [3], we induce a sigmoid gating to all gates, whereas for xLSTMs it has been changed by exponential gating.

For eg: For the enter gate i1-

is now,

With an even bigger vary that exponential gating supplies, xLSTMs are capable of deal with updates higher as in comparison with the sigmoid operate which compresses inputs to the vary of (0, 1). There’s a catch although — exponential values could develop as much as be very giant. To mitigate that downside, xLSTMs incorporate normalization and the logarithm operate seen within the equations beneath performs an necessary position right here.

Now, logarithm does reverse the impact of the exponential however their mixed utility, because the xLSTM paper claims, leads the way in which for balanced states.

This exponential gating together with reminiscence mixing among the many totally different gates (as within the unique LSTM) kinds the sLSTM block.

The opposite new side of the xLSTM structure is the rise from a scalar reminiscence to matrix reminiscence which permits it to course of extra info in parallel. It additionally attracts semblance to the transformer structure by introducing the important thing, question and worth vectors and utilizing them within the normalizer state because the weighted sum of key vectors, the place every key vector is weighted by the enter and overlook gates.

As soon as the sLSTM and mLSTM blocks are prepared, they’re stacked one over the opposite utilizing residual connections to yield xLSTM blocks and eventually the xLSTM structure.

Thus, the introduction of exponential gating (with applicable normalization) together with newer reminiscence constructions set up a robust pedestal for the xLSTMs to attain outcomes much like the transformers.

To summarize:

- An LSTM is a particular Recurrent Neural Community (RNN) that enables connecting earlier info to the present state simply as us people do with persistence of our ideas. LSTMs turned extremely standard due to their means to look far into the previous reasonably than relying solely on the fast previous. What made it potential was the introduction of particular gating components into the RNN architecture-

- Overlook Gate: Determines what info from the earlier cell state needs to be saved or forgotten. By selectively forgetting irrelevant previous info, the LSTM maintains long-term dependencies.

- Enter Gate : Determines what new info needs to be saved within the cell state. By controlling how the cell state is up to date, it incorporates new info necessary for predicting the present output.

- Output Gate : Determines what info needs to be the output because the hidden state. By selectively exposing elements of the cell state because the output, the LSTM can present related info to subsequent layers whereas suppressing the non-pertinent particulars and thus propagating solely the necessary info over longer sequences.

2. An xLSTM is an advanced model of the LSTM that addresses the drawbacks confronted by the LSTM. It’s true that LSTMs are able to dealing with long-term dependencies, nevertheless the data is processed sequentially and thus doesn’t incorporate the ability of parallelism that as we speak’s transformers capitalize on. To deal with that, xLSTMs usher in:

- sLSTM : Exponential gating that helps to incorporate bigger ranges as in comparison with sigmoid activation.

- mLSTM : New reminiscence constructions with matrix reminiscence to reinforce reminiscence capability and improve extra environment friendly info retrieval.

LSTMs general are a part of the Recurrent Neural Community household that course of info in a sequential method recursively. The arrival of Transformers fully obliterated the applying of recurrence nevertheless, their battle to deal with extraordinarily lengthy sequences nonetheless stays a burning downside. Analysis means that quadratic time is pertinent for long-ranges or lengthy contexts.

Thus, it does appear worthwhile to discover choices that might at the very least enlighten an answer path and a great start line can be going again to LSTMs — briefly, LSTMs have a great probability of creating a comeback. The current xLSTM outcomes undoubtedly look promising. After which, to spherical all of it up — the usage of recurrence by Mamba stands as a great testimony that this may very well be a profitable path to discover.

So, let’s observe alongside on this journey and see it unfold whereas maintaining in thoughts the ability of recurrence!

P.S. If you want to work via this train by yourself, here’s a hyperlink to a clean template to your use.

Blank Template for hand-exercise

Now go have enjoyable and create some Lengthy Quick-Time period impact!

References:

- xLSTM: Prolonged Lengthy Quick-Time period Reminiscence, Maximilian et al. Might 2024 https://arxiv.org/abs/2405.04517

- Lengthy Quick-Time period Reminiscence, Sepp Hochreiter and Jürgen Schmidhuber, 1997, Neural Comput. 9, 8 (November 15, 1997), 1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735