The very first thing to note is that though there’s no specific regularisation there are comparatively clean boundaries. For instance, within the prime left there occurred to be a little bit of sparse sampling (by likelihood) but each fashions choose to chop off one tip of the star somewhat than predicting a extra complicated form across the particular person factors. This is a crucial reminder that many architectural choices act as implicit regularisers.

From our evaluation we might anticipate focal loss to foretell difficult boundaries in areas of pure complexity. Ideally, this might be a bonus of utilizing the focal loss. But when we examine one of many areas of pure complexity we see that each fashions fail to establish that there’s an extra form contained in the circles.

In areas of sparse knowledge (lifeless zones) we might anticipate focal loss to create extra complicated boundaries. This isn’t essentially fascinating. If the mannequin hasn’t realized any of the underlying patterns of the information then there are infinitely some ways to attract a boundary round sparse factors. Right here we are able to distinction two sparse areas and see that focal loss has predicted a extra complicated boundary than the cross entropy:

The highest row is from the central star and we are able to see that the focal loss has realized extra concerning the sample. The expected boundary within the sparse area is extra complicated but additionally extra right. The underside row is from the decrease proper nook and we are able to see that the expected boundary is extra difficult however it hasn’t realized a sample concerning the form. The sleek boundary predicted by BCE could be extra fascinating than the unusual form predicted by focal loss.

This qualitative evaluation doesn’t assist in figuring out which one is best. How can we quantify it? The 2 loss features produce totally different values that may’t be in contrast immediately. As an alternative we’re going to check the accuracy of predictions. We’ll use a typical F1 rating however be aware that totally different danger profiles may choose further weight on recall or precision.

To evaluate generalisation functionality we use a validation set that’s iid with our coaching pattern. We are able to additionally use early stopping to forestall each approaches from overfitting. If we evaluate the validation losses of the 2 fashions we see a slight increase in F1 scores utilizing focal loss vs binary cross entropy.

- BCE Loss: 0.936 (Validation F1)

- Focal Loss: 0.954 (Validation F1)

So evidently the mannequin educated with focal loss performs barely higher when utilized on unseen knowledge. Thus far, so good, proper?

The difficulty with iid generalisation

In the usual definition of generalisation, future observations are assumed to be iid with our coaching distribution. However this received’t assist if we wish our mannequin to be taught an efficient illustration of the underlying course of that generated the information. On this instance that course of includes the shapes and the symmetries that decide the choice boundary. If our mannequin has an inside illustration of these shapes and symmetries then it ought to carry out equally effectively in these sparsely sampled “lifeless zones”.

Neither mannequin will ever work OOD as a result of they’ve solely seen knowledge from one distribution and can’t generalise. And it could be unfair to anticipate in any other case. Nevertheless, we are able to concentrate on robustness within the sparse sampling areas. Within the paper Machine Studying Robustness: A Primer, they largely discuss samples from the tail of the distribution which is one thing we noticed in our home costs fashions. However right here we now have a state of affairs the place sampling is sparse however it has nothing to do with an specific “tail”. I’ll proceed to confer with this as an “endogenous sampling bias” to spotlight that tails are usually not explicitly required for sparsity.

On this view of robustness the endogenous sampling bias is one risk the place fashions could not generalise. For extra highly effective fashions we are able to additionally discover OOD and adversarial knowledge. Take into account a picture mannequin which is educated to recognise objects in city areas however fails to work in a jungle. That might be a state of affairs the place we might anticipate a strong sufficient mannequin to work OOD. Adversarial examples then again would contain including noise to a picture to vary the statistical distribution of colors in a method that’s imperceptible to people however causes miss-classification from a non-robust mannequin. However constructing fashions that resist adversarial and OOD perturbations is out of scope for this already lengthy article.

Robustness to perturbation

So how can we quantify this robustness? We’ll begin with an accuracy perform A (we beforehand used the F1 rating). Then we contemplate a perturbation perform φ which we are able to apply on each particular person factors or on a complete dataset. Word that this perturbation perform ought to protect the connection between predictor x and goal y. (i.e. we’re not purposely mislabelling examples).

Take into account a mannequin designed to foretell home costs in any metropolis, an OOD perturbation could contain discovering samples from cities not within the coaching knowledge. In our instance we’ll concentrate on a modified model of the dataset which samples solely from the sparse areas.

The robustness rating (R) of a mannequin (h) is a measure of how effectively the mannequin performs underneath a perturbed dataset in comparison with a clear dataset:

Take into account the 2 fashions educated to foretell a call boundary: one educated with focal loss and one with binary cross entropy. Focal loss carried out barely higher on the validation set which was iid with the coaching knowledge. But we used that dataset for early stopping so there’s some delicate data leakage. Let’s evaluate outcomes on:

- A validation set iid to our coaching set and used for early stopping.

- A check set iid to our coaching set.

- A perturbed (φ) check set the place we solely pattern from the sparse areas I’ve known as “lifeless zones”.

| Loss Sort | Val (iid) F1 | Check (iid) F1 | Check (φ) F1 | R(φ) |

|------------|---------------|-----------------|-------------|---------|

| BCE Loss | 0.936 | 0.959 | 0.834 | 0.869 |

| Focal Loss | 0.954 | 0.941 | 0.822 | 0.874 |

The usual bias-variance decomposition recommended that we would get extra sturdy outcomes with focal loss by permitting elevated complexity on laborious examples. We knew that this may not be superb in all circumstances so we evaluated on a validation set to verify. Thus far so good. However now that we take a look at the efficiency on a perturbed check set we are able to see that focal loss carried out barely worse! But we additionally see that focal loss has a barely increased robustness rating. So what’s going on right here?

I ran this experiment a number of instances, every time yielding barely totally different outcomes. This was one stunning occasion I wished to spotlight. The bias-variance decomposition is about how our mannequin will carry out in expectation (throughout totally different attainable worlds). Against this this robustness strategy tells us how these particular fashions carry out underneath perturbation. However we made want extra issues for mannequin choice.

There are plenty of delicate classes in these outcomes:

- If we make vital choices on our validation set (e.g. early stopping) then it turns into important to have a separate check set.

- Even coaching on the identical dataset we are able to get different outcomes. When coaching neural networks there are a number of sources of randomness to contemplate which can develop into essential within the final a part of this text.

- A weaker mannequin could also be extra sturdy to perturbations. So mannequin choice wants to contemplate extra than simply the robustness rating.

- We may have to guage fashions on a number of perturbations to make knowledgeable choices.

Evaluating approaches to robustness

In a single strategy to robustness we contemplate the influence of hyperparameters on mannequin efficiency by way of the lens of the bias-variance trade-off. We are able to use this data to grasp how totally different sorts of coaching examples have an effect on our coaching course of. For instance, we all know that miss-labelled knowledge is especially dangerous to make use of with focal loss. We are able to contemplate whether or not significantly laborious examples could possibly be excluded from our coaching knowledge to supply extra sturdy fashions. And we are able to higher perceive the function of regularisation by contemplate the kinds of hyperparameters and the way they influence bias and variance.

The opposite perspective largely disregards the bias variance trade-off and focuses on how our mannequin performs on perturbed inputs. For us this meant specializing in sparsely sampled areas however may additionally embody out of distribution (OOD) and adversarial knowledge. One disadvantage to this strategy is that it’s evaluative and doesn’t essentially inform us tips on how to assemble higher fashions in need of coaching on extra (and extra different) knowledge. A extra vital disadvantage is that weaker fashions could exhibit extra robustness and so we are able to’t solely use robustness rating for mannequin choice.

Regularisation and robustness

If we take the usual mannequin educated with cross entropy loss we are able to plot the efficiency on totally different metrics over time: coaching loss, validation loss, validation_φ loss, validation accuracy, and validation_φ accuracy. We are able to evaluate the coaching course of underneath the presence of various sorts of regularisation to see the way it impacts generalisation functionality.

On this specific downside we are able to make some uncommon observations

- As we might anticipate with out regularisation, because the coaching loss tends in the direction of 0 the validation loss begins to extend.

- The validation_φ loss will increase rather more considerably as a result of it solely incorporates examples from the sparse “lifeless zones”.

- However the validation accuracy doesn’t truly worsen because the validation loss will increase. What’s going on right here? That is one thing I’ve truly seen in actual datasets. The mannequin’s accuracy improves however it additionally turns into more and more assured in its outputs, so when it’s flawed the loss is sort of excessive. Utilizing the mannequin’s chances turns into ineffective as all of them are likely to 99.99% no matter how effectively the mannequin does.

- Including regularisation prevents the validation losses from blowing up because the coaching loss can not go to 0. Nevertheless, it could additionally negatively influence the validation accuracy.

- Including dropout and weight decay is best than simply dropout, however each are worse than utilizing no regularisation when it comes to accuracy.

Reflection

In case you’ve caught with me this far into the article I hope you’ve developed an appreciation for the restrictions of the bias-variance trade-off. It would all the time be helpful to have an understanding of the everyday relationship between mannequin complexity and anticipated efficiency. However we’ve seen some fascinating observations that problem the default assumptions:

- Mannequin complexity can change in several elements of the function area. Therefore, a single measure of complexity vs bias/variance doesn’t all the time seize the entire story.

- The usual measures of generalisation error don’t seize all kinds of generalisation, significantly missing in robustness underneath perturbation.

- Components of our coaching pattern may be more durable to be taught from than others and there are a number of methods wherein a coaching instance may be thought of “laborious”. Complexity could be mandatory in naturally complicated areas of the function area however problematic in sparse areas. This sparsity may be pushed by endogenous sampling bias and so evaluating efficiency to an iid check set can provide false impressions.

- As all the time we have to consider danger and danger minimisation. In case you anticipate all future inputs to be iid with the coaching knowledge it could be detrimental to concentrate on sparse areas or OOD knowledge. Particularly if tail dangers don’t carry main penalties. However we’ve seen that tail dangers can have distinctive penalties so it’s essential to assemble an acceptable check set in your specific downside.

- Merely testing a mannequin’s robustness to perturbations isn’t enough for mannequin choice. A call concerning the generalisation functionality of a mannequin can solely be accomplished underneath a correct danger evaluation.

- The bias-variance trade-off solely considerations the anticipated loss for fashions averaged over attainable worlds. It doesn’t essentially inform us how correct our mannequin shall be utilizing laborious classification boundaries. This could result in counter-intuitive outcomes.

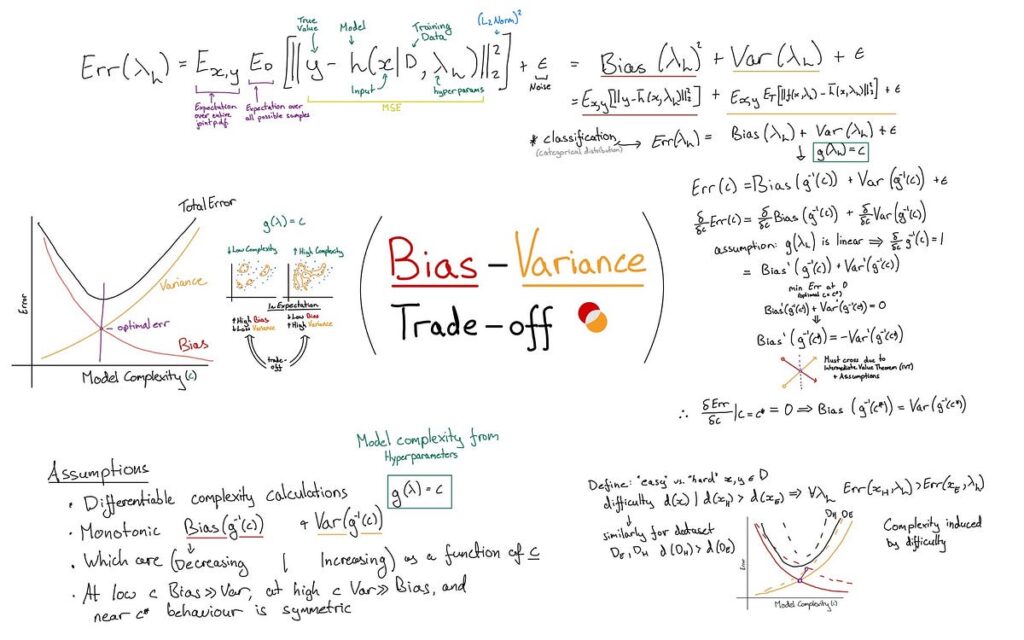

Let’s overview a number of the assumptions that had been key to our bias-variance decomposition:

- At low complexity, the entire error is dominated by bias, whereas at excessive complexity whole error is dominated by variance. With bias ≫ variance on the minimal complexity.

- As a perform of complexity bias is monotonically reducing and variance is monotonically growing.

- The complexity perform g is differentiable.

It seems that with sufficiently deep neural networks these first two assumptions are incorrect. And that final assumption may be a handy fiction to simplify some calculations. We received’t query that one however we’ll be having a look on the first two.

Let’s briefly overview what it means to overfit:

- A mannequin overfits when it fails to differentiate noise (aleatoric uncertainty) from intrinsic variation. Which means a educated mannequin could behave wildly in a different way given totally different coaching knowledge with totally different noise (i.e. variance).

- We discover a mannequin has overfit when it fails to generalise to an unseen check set. This sometimes means efficiency on check knowledge that’s iid with the coaching knowledge. We could concentrate on totally different measures of robustness and so craft a check set which is OOS, stratified, OOD, or adversarial.

We’ve thus far assumed that the one technique to get really low bias is that if a mannequin is overly complicated. And we’ve assumed that this complexity results in excessive variance between fashions educated on totally different knowledge. We’ve additionally established that many hyperparameters contribute to complexity together with the variety of epochs of stochastic gradient descent.

Overparameterisation and memorisation

You will have heard that a big neural community can merely memorise the coaching knowledge. However what does that imply? Given enough parameters the mannequin doesn’t have to be taught the relationships between options and outputs. As an alternative it could retailer a perform which responds completely to the options of each coaching instance utterly independently. It could be like writing an specific if assertion for each mixture of options and easily producing the typical output for that function. Take into account our choice boundary dataset the place each instance is totally separable. That might imply 100% accuracy for all the pieces within the coaching set.

If a mannequin has enough parameters then the gradient descent algorithm will naturally use all of that area to do such memorisation. Usually it’s believed that that is a lot less complicated than discovering the underlying relationship between the options and the goal values. That is thought of the case when p ≫ N (the variety of trainable parameters is considerably bigger than the variety of examples).

However there are 2 conditions the place a mannequin can be taught to generalise regardless of having memorised coaching knowledge:

- Having too few parameters results in weak fashions. Including extra parameters results in a seemingly optimum stage of complexity. Persevering with so as to add parameters makes the mannequin carry out worse because it begins to suit to noise within the coaching knowledge. As soon as the variety of parameters exceeds the variety of coaching examples the mannequin could begin to carry out higher. As soon as p ≫ N the mannequin reaches one other optimum level.

- Prepare a mannequin till the coaching and validation losses start to diverge. The coaching loss tends in the direction of 0 because the mannequin memorises the coaching knowledge however the validation loss blows up and reaches a peak. After some (prolonged) coaching time the validation loss begins to lower.

This is called the “double descent” phenomena the place extra complexity truly results in higher generalisation.

Does double descent require mislabelling?

One common consensus is that label noise is enough however not mandatory for double descent to happen. For instance, the paper Unravelling The Enigma of Double Descent discovered that overparameterised networks will be taught to assign the mislabelled class to factors within the coaching knowledge as a substitute of studying to disregard the noise. Nevertheless, a mannequin could “isolate” these factors and be taught common options round them. It primarily focuses on the realized options throughout the hidden states of neural networks and exhibits that separability of these realized options could make labels noisy even with out mislabelling.

The paper Double Descent Demystified describes a number of mandatory circumstances for double descent to happen in generalised linear fashions. These standards largely concentrate on variance throughout the knowledge (versus mannequin variance) which make it troublesome for a mannequin to appropriately be taught the relationships between predictor and goal variables. Any of those circumstances can contribute to double descent:

- The presence of singular values.

- That the check set distribution isn’t successfully captured by options which account for essentially the most variance within the coaching knowledge.

- An absence of variance for a superbly match mannequin (i.e. a superbly match mannequin appears to don’t have any aleatoric uncertainty).

This paper additionally captures the double descent phenomena for a toy downside with this visualisation:

Against this the paper Understanding Double Descent Requires a Fine-Grained Bias-Variance Decomposition offers an in depth mathematical breakdown of various sources of noise and their influence on variance:

- Sampling — the final concept that becoming a mannequin to totally different datasets results in fashions with totally different predictions (V_D)

- Optimisation — the results of parameters initialisation however doubtlessly additionally the character of stochastic gradient descent (V_P).

- Label noise — typically mislabelled examples (V_ϵ).

- The potential interactions between the three sources of variance.

The paper goes on to indicate that a few of these variance phrases truly contribute to the entire error as a part of a mannequin’s bias. Moreover, you possibly can situation the expectation calculation first on V_D or V_P and it means you attain totally different conclusions relying on the way you do the calculation. A correct decomposition includes understanding how the entire variance comes collectively from interactions between the three sources of variance. The conclusion is that whereas label noise exacerbates double descent it’s not mandatory.

Regularisation and double descent

One other consensus from these papers is that regularisation could stop double descent. However as we noticed within the earlier part that doesn’t essentially imply that the regularised mannequin will generalise higher to unseen knowledge. It extra appears to be the case that regularisation acts as a ground for the coaching loss, stopping the mannequin from taking the coaching loss arbitrarily low. However as we all know from the bias-variance trade-off, that might restrict complexity and introduce bias to our fashions.

Reflection

Double descent is an fascinating phenomenon that challenges lots of the assumptions used all through this text. We are able to see that underneath the precise circumstances growing complexity doesn’t essentially degrade a mannequin’s means to generalise.

Ought to we consider extremely complicated fashions as particular instances or do they name into query all the bias-variance trade-off. Personally, I believe that the core assumptions maintain true usually and that extremely complicated fashions are only a particular case. I believe the bias-variance trade-off has different weaknesses however the core assumptions are typically legitimate.

The bias-variance trade-off is comparatively easy in relation to statistical inference and extra typical statistical fashions. I didn’t go into different machine studying strategies like choices timber or help vector machines, however a lot of what we’ve mentioned continues to use there. However even in these settings we have to contemplate extra components than how effectively our mannequin could carry out if averaged over all attainable worlds. Primarily as a result of we’re evaluating the efficiency towards future knowledge assumed to be iid with our coaching set.

Even when our mannequin will solely ever see knowledge that appears like our coaching distribution we are able to nonetheless face giant penalties with tail dangers. Most machine learning projects need a proper risk assessment to grasp the implications of errors. As an alternative of evaluating fashions underneath iid assumptions we needs to be establishing validation and check units which match into an acceptable danger framework.

Moreover, fashions that are purported to have common capabilities have to be evaluated on OOD knowledge. Fashions which carry out essential features have to be evaluated adversarially. It’s additionally value stating that the bias-variance trade-off isn’t essentially legitimate within the setting of reinforcement studying. Take into account the alignment problem in AI safety which considers mannequin efficiency past explicitly acknowledged targets.

We’ve additionally seen that within the case of enormous overparameterised fashions the usual assumptions about over- and underfitting merely don’t maintain. The double descent phenomena is complicated and nonetheless poorly understood. But it holds an essential lesson about trusting the validity of strongly held assumptions.

For many who’ve continued this far I need to make one final connection between the totally different sections of this text. Within the part in inferential statistics I defined that Fisher data describes the quantity of knowledge a pattern can comprise concerning the distribution the pattern was drawn from. In varied elements of this text I’ve additionally talked about that there are infinitely some ways to attract a call boundary round sparsely sampled factors. There’s an fascinating query about whether or not there’s sufficient data in a pattern to attract conclusions about sparse areas.

In my article on why scaling works I discuss concerning the idea of an inductive prior. That is one thing launched by the coaching course of or mannequin structure we’ve chosen. These inductive priors bias the mannequin into ensuring sorts of inferences. For instance, regularisation may encourage the mannequin to make clean somewhat than jagged boundaries. With a distinct sort of inductive prior it’s attainable for a mannequin to glean extra data from a pattern than can be attainable with weaker priors. For instance, there are methods to encourage symmetry, translation invariance, and even detecting repeated patterns. These are usually utilized by way of function engineering or by way of structure choices like convolutions or the eye mechanism.

I first began placing collectively the notes for this text over a yr in the past. I had one experiment the place focal loss was important for getting respectable efficiency from my mannequin. Then I had a number of experiments in a row the place focal loss carried out terribly for no obvious cause. I began digging into the bias-variance trade-off which led me down a rabbit gap. Finally I realized extra about double descent and realised that the bias-variance trade-off had much more nuance than I’d beforehand believed. In that point I learn and annotated a number of papers on the subject and all my notes had been simply amassing digital mud.

Just lately I realised that through the years I’ve learn plenty of horrible articles on the bias-variance trade-off. The thought I felt was lacking is that we’re calculating an expectation over “attainable worlds”. That perception may not resonate with everybody however it appears important to me.

I additionally need to touch upon a well-liked visualisation about bias vs variance which makes use of archery pictures unfold round a goal. I really feel that this visible is deceptive as a result of it makes it appear that bias and variance are about particular person predictions of a single mannequin. But the mathematics behind the bias-variance error decomposition is clearly about efficiency averaged throughout attainable worlds. I’ve purposely prevented that visualisation for that cause.

I’m unsure how many individuals will make it throughout to the top. I put these notes collectively lengthy earlier than I began writing about AI and felt that I ought to put them to good use. I additionally simply wanted to get the concepts out of my head and written down. So in the event you’ve reached the top I hope you’ve discovered my observations insightful.

[1] “German tank downside,” Wikipedia, Nov. 26, 2021. https://en.wikipedia.org/wiki/German_tank_problem

[2] Wikipedia Contributors, “Minimal-variance unbiased estimator,” Wikipedia, Nov. 09, 2019. https://en.wikipedia.org/wiki/Minimum-variance_unbiased_estimator

[3] “Chance perform,” Wikipedia, Nov. 26, 2020. https://en.wikipedia.org/wiki/Likelihood_function

[4] “Fisher data,” Wikipedia, Nov. 23, 2023. https://en.wikipedia.org/wiki/Fisher_information

[5] Why, “Why is utilizing squared error the usual when absolute error is extra related to most issues?,” Cross Validated, Jun. 05, 2020. https://stats.stackexchange.com/questions/470626/w (accessed Nov. 26, 2024).

[6] Wikipedia Contributors, “Bias–variance tradeoff,” Wikipedia, Feb. 04, 2020. https://en.wikipedia.org/wiki/Bias%E2%80%93variance_tradeoff

[7] B. Efron, “Prediction, Estimation, and Attribution,” Worldwide Statistical Overview, vol. 88, no. S1, Dec. 2020, doi: https://doi.org/10.1111/insr.12409.

[8] T. Hastie, R. Tibshirani, and J. H. Friedman, The Components of Statistical Studying. Springer, 2009.

[9] T. Dzekman, “Medium,” Medium, 2024. https://medium.com/towards-data-science/why-scalin (accessed Nov. 26, 2024).

[10] H. Braiek and F. Khomh, “Machine Studying Robustness: A Primer,” 2024. Obtainable: https://arxiv.org/pdf/2404.00897

[11] O. Wu, W. Zhu, Y. Deng, H. Zhang, and Q. Hou, “A Mathematical Basis for Sturdy Machine Studying primarily based on Bias-Variance Commerce-off,” arXiv.org, 2021. https://arxiv.org/abs/2106.05522v4 (accessed Nov. 26, 2024).

[12] “bias_variance_decomp: Bias-variance decomposition for classification and regression losses — mlxtend,” rasbt.github.io. https://rasbt.github.io/mlxtend/user_guide/evaluate/bias_variance_decomp

[13] T.-Y. Lin, P. Goyal, R. Girshick, Ok. He, and P. Dollár, “Focal Loss for Dense Object Detection,” arXiv:1708.02002 [cs], Feb. 2018, Obtainable: https://arxiv.org/abs/1708.02002

[14] Y. Gu, X. Zheng, and T. Aste, “Unraveling the Enigma of Double Descent: An In-depth Evaluation by way of the Lens of Realized Function House,” arXiv.org, 2023. https://arxiv.org/abs/2310.13572 (accessed Nov. 26, 2024).

[15] R. Schaeffer et al., “Double Descent Demystified: Figuring out, Decoding & Ablating the Sources of a Deep Studying Puzzle,” arXiv.org, 2023. https://arxiv.org/abs/2303.14151 (accessed Nov. 26, 2024).

[16] B. Adlam and J. Pennington, “Understanding Double Descent Requires a High-quality-Grained Bias-Variance Decomposition,” Neural Info Processing Methods, vol. 33, pp. 11022–11032, Jan. 2020.