Adobe entered the trendy period of generative AI a full year behind others, and the corporate continues shifting ahead with Firefly at a conservative tempo. It’s a method you could possibly criticize—or argue is by design. Adobe must push the boundaries of media with out breaking the instruments utilized by its artistic clients day by day, like graphic designers and video editors.

Whereas Adobe first debuted Firefly tools in Photoshop in 2023, it took about a year for them to be any good. Now, it’s upping the ante by going from producing nonetheless imagery to movement.

Adobe is shifting a few of its video era instruments, first teased in April, into non-public beta. The brand new choices seem highly effective, however for Adobe, it’s as vital that these instruments are sensible. Adobe must grasp the UI of AI.

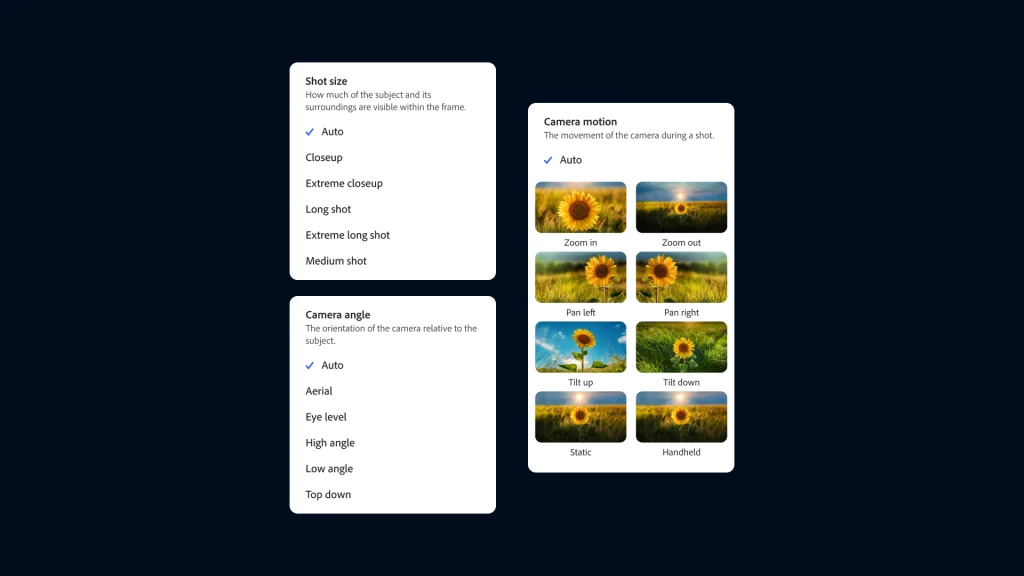

Textual content to Video is mainly Adobe’s reply to OpenAI’s Sora (which can be but to be launched publicly). It allows you to sort a immediate, like “closeup of a daisy,” to generate video of it. Adobe’s distinguishing characteristic is cinemagraphic artistic management. A drop down menu will share choices like digicam angles to assist information you within the course of.

One other characteristic, Generative Prolong, pushes AI integration a step additional—and I feel is Adobe’s finest instance of how gen AI can sneak right into a UI workflow. It means that you can take a clip in Premiere Professional and easily stretch it longer on the timeline, whereas the system will invent frames (and even background audio) to fill the time. You possibly can see within the clip beneath the place an astronaut, in shut up, will get the stretch remedy, which he fills by ever so gently turning his head. Impressed? No. Straightforward? Sure. Convincing? Completely.

In a dialog with Adobe CTO, Ely Greenfield, I took the chance to probe into Adobe’s psyche. How is it excited about integrating AI instruments? What constitutes success? What defines a helpful AI characteristic? In our 30-minute dialog, Greenfield provided a candid have a look at the processes and priorities behind Adobe’s integration of gen AI.

This interview has been edited and condensed for readability.

Quick Firm: OK, what’s the stickiest AI characteristic Adobe has shipped up to now?

Ely Greenfield: Generative Fill and Generative Increase in Photoshop are extremely sticky and worthwhile. The final quantity we quoted was over 9 billion photographs generated. Loads of that’s Generative Fill and Increase in Photoshop. Generative Take away in Lightroom can be arising sturdy. We’ve seen an enormous explosion and development in that as properly.

How properly does a generative AI instrument must work to make it into an Adobe product? Does the end result have to be acceptable 9 out of ten occasions, as an example? Or like, two out of ten?

It comes right down to what the issue is and what the following finest different is. If somebody is on the lookout for pretty frequent inventory, like b-roll, then it higher get it proper 100% of the time, as a result of even then, you could possibly simply use b-roll. However when you’ve got specificity to what you need…getting it proper 1/10 occasions remains to be an enormous financial savings. It may be somewhat irritating within the second, but when we can provide folks good content material 1/10 occasions that saves them from going again to reshoot one thing on deadline, that’s extremely worthwhile.

Our objective is 10/10 occasions. However the bar to get into folks’s arms to supply worth to them in a deadline crunch is way nearer to 1/10 occasions. I’m tempted to present a quantity on the place we at the moment are, however it varies wildly on the use case.

I’ve been an expert producer and editor. I do know what it’s prefer to not have that shot within the eleventh hour and to really feel determined. However I additionally all the time count on an Adobe product to work. So explaining that AI may work one out of ten occasions,—and why—looks as if a communication problem for Adobe. It’s an actual mess for Google AI that its search outcomes are so usually flawed, when the usual outcomes proper beneath them are appropriate—no surprise you may have the general public complaining that Google is telling you to put glue on a pizza.

For Google, hallucination is the bane of their existence. For us, it’s what you’re coming to Firefly for. Google you get the reply, and there’s such an activation vitality: Do I belief this? Now I’ve to validate it! Is the work price it, or would [traditional] search have been higher?

Once we generate, it’s not a query of, can I belief it? It’s nearly what’s in entrance of your eyes. I could make that judgment immediately. If I prefer it, I can use it. It’s somewhat extra comprehensible.

The use instances we’re going after are ones the place the following finest different is pricey and painful: I don’t have an possibility, it’s the night time earlier than a deadline, and I can’t create this myself. These are the use instances we’re going after.

If we are able to prevent 50% of your time, and even in the event you spend an hour producing, that’s a whole lot of time you saved over the choice. We now have to verify we’re speaking to the suitable clients who say that is going to save lots of them time versus folks enjoying round, saying it took too lengthy. We wish to create actual worth.

How simple ought to generative AI be to make use of? I watch how my son, a 4th grader, is utilizing Canva’s generative AI tools. As a result of they’re so accessible within the workflow, I discover that he usually generates photographs for issues I do know exist already in Canva’s personal inventory library. It seems like a waste of time and vitality! Is that this one thing Adobe is considering?

We completely are engaged on it. I used to be reviewing some UIs yesterday displaying how some stuff is [being incorporated] into instruments. Usually, the idea is to generate a search. For all types of causes, if what you’re engaged on may be solved with licensable content material, why generate? It’s a waste of time, cash, CO2. We’re not attempting to compete with present inventory on the market. We have a look at the place inventory isn’t ok.

For the client, as soon as we get previous the gee-whizz-wow issue, what issues is you get a chunk of content material. You don’t care the place it got here from. Hopefully you care the way it’s licensed! However on the finish of the day, whether or not it’s manually captured or AI-generated doesn’t matter so long as you get what you need.

With inventory, a whole lot of the occasions you get shut however not fairly there, which is why Generative Fill and Erase are so vital. You possibly can mix these instruments and take inventory content material not discovered and alter the colour of their jacket. The artistic course of is iteration. Perhaps I discover inventory content material, and that offers me the broad strokes. Then I take advantage of generative enhancing…after which possibly [manual] enhancing the place generative doesn’t work.

In your demo from April, you present Generative Fill working in video, letting somebody draw a bounding field in a suitcase, and all of a sudden it’s full of diamonds. Is that coming to the beta? As a result of the UI integration seems to be unbelievable. However it looks as if most of what you’re displaying now isn’t Adobe AI built-in with Adobe UI. It’s nonetheless principally simply conventional textual content immediate era.

It’s not in beta proper now. It’ll comply with. The way in which these items develop, you want a powerful basis mannequin. Proper now that potential is text-to-video or picture. As soon as we have now that basis mannequin, then we construct use instances: options, functions, and specializations on high of these. Generative Prolong is the primary one as a result of it requires the least quantity of labor.

Picture-to-video is in non-public beta now. Generative Prolong is image-to-video, mainly. However it’s the simplest to shine and tackle high of the core basis. The others will comply with, however we don’t have a timeline on them proper now.

So you may have your core AI intelligence within the basis mannequin, and then you definitely mainly create a UI out of that?

We construct an early mannequin, then hand them to groups to fantastic tune to be used instances. Then we iterate on the muse mannequin. If we uplevel decision or framerate, then we have now to return to the drafting board with some new options.

You’ve talked about, not simply letting somebody create video, however giving them granular management over the digicam sort and aesthetic. Proper now, that’s all primarily based upon textual content descriptions. However I assume you’re wanting into constructing a UI for digital directing, too? Like we see in After Results?

You possibly can see a few of the evolution of that [in our model]—and there are two items to it.

First, we began with fundamental large angle and shut up, then you could possibly specify depth of subject, aperture and focal size. We bought extra particular about what it may possibly perceive. It doesn’t perceive codecs, or a Canon vs Nikon, however it does perceive digicam movement varieties. Lens type. Digicam positioning.

The second piece is totally, how do you get into extra interactive multimodal enter as a substitute of textual content enter?

That begins with an [automatic] immediate from a drop down…so that you don’t have to determine what to sort. To then storyboarders. There’s a specified language of digicam angle inside storyboards: [We might] offer you instruments to attract and specify digicam angles.

However for now, it’s about foundational expertise and getting it into somebody’s arms.