Clustering is a must have talent set for any information scientist on account of its utility and suppleness to real-world issues. This text is an outline of clustering and the several types of clustering algorithms.

Clustering is a well-liked unsupervised studying method that’s designed to group objects or observations collectively primarily based on their similarities. Clustering has a number of helpful purposes resembling market segmentation, suggestion techniques, exploratory evaluation, and extra.

Whereas clustering is a widely known and broadly used method within the subject of knowledge science, some is probably not conscious of the several types of clustering algorithms. Whereas there are only a few, it is very important perceive these algorithms and the way they work to get the perfect outcomes on your use case.

Centroid-based clustering is what most consider in terms of clustering. It’s the “conventional” approach to cluster information through the use of an outlined variety of centroids (facilities) to group information factors primarily based on their distance to every centroid. The centroid finally turns into the imply of it’s assigned information factors. Whereas centroid-based clustering is highly effective, it’s not strong towards outliers, as outliers will have to be assigned to a cluster.

Ok-Means

Ok-Means is probably the most broadly used clustering algorithm, and is probably going the primary one you’ll be taught as an information scientist. As defined above, the target is to attenuate the sum of distances between the info factors and the cluster centroid to establish the proper group that every information level ought to belong to. Right here’s the way it works:

- An outlined variety of centroids are randomly dropped into the vector house of the unlabeled information (initialization).

- Every information level measures itself to every centroid (often utilizing Euclidean distance) and assigns itself to the closest one.

- The centroids relocate to the imply of their assigned information factors.

- Steps 2–3 repeat till the ‘optimum’ clusters are produced.

from sklearn.cluster import KMeans

import numpy as np#pattern information

X = np.array([[1, 2], [1, 4], [1, 0],

[10, 2], [10, 4], [10, 0]])

#create k-means mannequin

kmeans = KMeans(n_clusters = 2, random_state = 0, n_init = "auto").match(X)

#print the outcomes, use to foretell, and print facilities

kmeans.labels_

kmeans.predict([[0, 0], [12, 3]])

kmeans.cluster_centers_

Ok-Means ++

Ok-Means ++ is an enchancment of the initialization step of Ok-Means. Because the centroids are randomly dropped in, there’s a probability that multiple centroid could be initialized into the identical cluster, leading to poor outcomes.

Nevertheless Ok-Means ++ solves this by randomly assigning the primary centroid that can ultimately discover the most important cluster. Then, the opposite centroids are positioned a sure distance away from the preliminary cluster. The aim of Ok-Means ++ is to push the centroids so far as doable from each other. This leads to high-quality clusters which are distinct and well-defined.

from sklearn.cluster import KMeans

import numpy as np#pattern information

X = np.array([[1, 2], [1, 4], [1, 0],

[10, 2], [10, 4], [10, 0]])

#create k-means mannequin

kmeans = KMeans(n_clusters = 2, random_state = 0, n_init = "k-means++").match(X)

#print the outcomes, use to foretell, and print facilities

kmeans.labels_

kmeans.predict([[0, 0], [12, 3]])

kmeans.cluster_centers_

Density-based algorithms are additionally a well-liked type of clustering. Nevertheless, as a substitute of measuring from randomly positioned centroids, they create clusters by figuring out high-density areas inside the information. Density-based algorithms don’t require an outlined variety of clusters, and subsequently are much less work to optimize.

Whereas centroid-based algorithms carry out higher with spherical clusters, density-based algorithms can take arbitrary shapes and are extra versatile. Additionally they don’t embrace outliers of their clusters and subsequently are strong. Nevertheless, they will wrestle with information of various densities and excessive dimensions.

DBSCAN

DBSCAN is the most well-liked density-based algorithm. DBSCAN works as follows:

- DBSCAN randomly selects an information level and checks if it has sufficient neighbors inside a specified radius.

- If the purpose has sufficient neighbors, it’s marked as a part of a cluster.

- DBSCAN recursively checks if the neighbors even have sufficient neighbors inside the radius till all factors within the cluster have been visited.

- Repeat steps 1–3 till the remaining information level should not have sufficient neighbors within the radius.

- Remaining information factors are marked as outliers.

from sklearn.cluster import DBSCAN

import numpy as np#pattern information

X = np.array([[1, 2], [2, 2], [2, 3],

[8, 7], [8, 8], [25, 80]])

#create mannequin

clustering = DBSCAN(eps=3, min_samples=2).match(X)

#print outcomes

clustering.labels_

Subsequent, we’ve got hierarchical clustering. This methodology begins off by computing a distance matrix from the uncooked information. This distance matrix is greatest and infrequently visualized by a dendrogram (see under). Knowledge factors are linked collectively one after the other by discovering the closest neighbor to ultimately kind one large cluster. Due to this fact, a cut-off level to establish the clusters by stopping all information factors from linking collectively.

By utilizing this methodology, the info scientist can construct a strong mannequin by defining outliers and excluding them within the different clusters. This methodology works nice towards hierarchical information, resembling taxonomies. The variety of clusters is dependent upon the depth parameter and may be wherever from 1-n.

from scipy.cluster.hierarchy import dendrogram, linkage

from sklearn.cluster import AgglomerativeClustering

from scipy.cluster.hierarchy import fcluster#create distance matrix

linkage_data = linkage(information, methodology = 'ward', metric = 'euclidean', optimal_ordering = True)

#view dendrogram

dendrogram(linkage_data)

plt.title('Hierarchical Clustering Dendrogram')

plt.xlabel('Knowledge level')

plt.ylabel('Distance')

plt.present()

#assign depth and clusters

clusters = fcluster(linkage_data, 2.5, criterion = 'inconsistent', depth = 5)

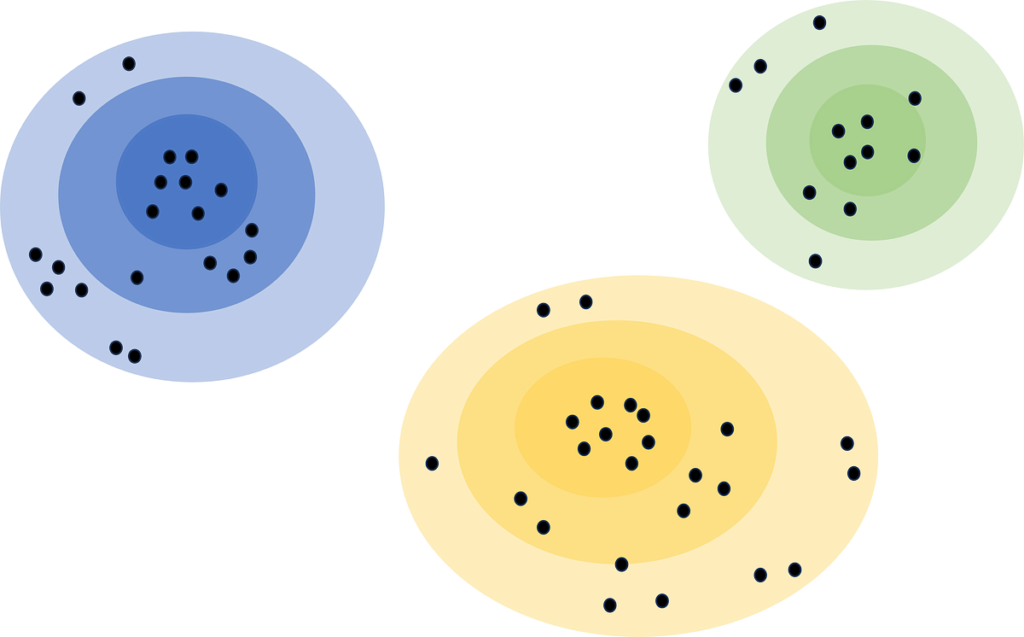

Lastly, distribution-based clustering considers a metric apart from distance and density, and that’s likelihood. Distribution-based clustering assumes that the info is made up of probabilistic distributions, resembling regular distributions. The algorithm creates ‘bands’ that characterize confidence intervals. The additional away an information level is from the middle of a cluster, the much less assured we’re that the info level belongs to that cluster.

Distribution-based clustering could be very tough to implement because of the assumptions it makes. It often isn’t really helpful until rigorous evaluation has been completed to substantiate its outcomes. For instance, utilizing it to establish buyer segments in a advertising and marketing dataset, and confirming these segments comply with a distribution. This will also be an excellent methodology for exploratory evaluation to see not solely what the facilities of clusters comprise of, but additionally the sides and outliers.

Clustering is an unsupervised machine studying method that has a rising utility in lots of fields. It may be used to help information evaluation, segmentation initiatives, suggestion techniques, and extra. Above we’ve got explored how they work, their execs and cons, code samples, and even some use circumstances. I’d take into account expertise with clustering algorithms a must have for information scientists on account of their utility and suppleness.

I hope you’ve got loved my article! Please be happy to remark, ask questions, or request different subjects.