In machine studying, extra information results in higher outcomes. However labeling information might be costly and time-consuming. What if we may use the large quantities of unlabeled information that’s normally simple to get? That is the place pseudo-labeling turns out to be useful.

TL;DR: I performed a case research on the MNIST dataset and boosted my mannequin’s accuracy from 90 % to 95 % by making use of iterative, confidence-based pseudo-labeling. This text covers the main points of what pseudo-labeling is, together with sensible suggestions and insights from my experiments.

Pseudo-labeling is a sort of semi-supervised studying. It bridges the hole between supervised studying (the place all information is labeled) and unsupervised studying (the place no information is labeled).

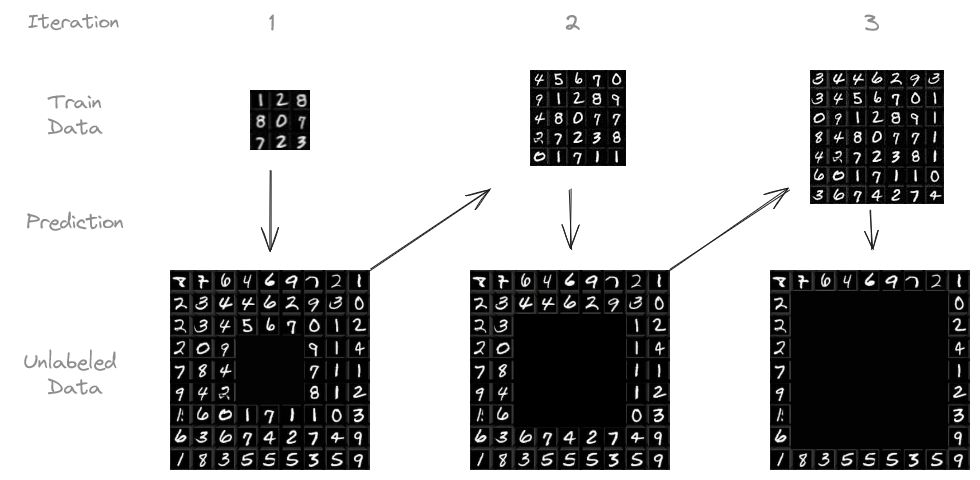

The precise process I adopted goes as follows:

- We begin with a small quantity of labeled information and prepare our mannequin on it.

- The mannequin makes predictions on the unlabeled information.

- We choose the predictions the mannequin is most assured about (e.g., above 95 % confidence) and deal with them as in the event that they had been precise labels, hoping that they’re dependable sufficient.

- We add this “pseudo-labeled” information to our coaching set and retrain the mannequin.

- We are able to repeat this course of a number of occasions, letting the mannequin be taught from the rising pool of pseudo-labeled information.

Whereas this strategy might introduce some incorrect labels, the profit comes from the considerably elevated quantity of coaching information.

The concept of a mannequin studying from its personal predictions may elevate some eyebrows. In spite of everything, aren’t we making an attempt to create one thing from nothing, counting on an “echo chamber” the place the mannequin merely reinforces its personal preliminary biases and errors?

This concern is legitimate. It could remind you of the legendary Baron Münchhausen, who famously claimed to have pulled himself and his horse out of a swamp by his personal hair — a bodily impossibility. Equally, if a mannequin solely depends by itself doubtlessly flawed predictions, it dangers getting caught in a loop of self-reinforcement, very similar to individuals trapped in echo chambers who solely hear their very own beliefs mirrored again at them.

So, can pseudo-labeling actually be efficient with out falling into this entice?

The reply is sure. Whereas this story of Baron Münchhausen is clearly a fairytale, chances are you’ll think about a blacksmith progressing by the ages. He begins with primary stone instruments (the preliminary labeled information). Utilizing these, he forges crude copper instruments (pseudo-labels) from uncooked ore (unlabeled information). These copper instruments, whereas nonetheless rudimentary, permit him to work on beforehand unfeasible duties, finally resulting in the creation of instruments which can be manufactured from bronze, iron, and so forth. This iterative course of is essential: You can’t forge metal swords utilizing a stone hammer.

Similar to the blacksmith, in machine studying, we will obtain the same development by:

- Rigorous thresholds: The mannequin’s out-of-sample accuracy is bounded by the share of appropriate coaching labels. If 10 % of labels are mistaken, the mannequin’s accuracy gained’t exceed 90 % considerably. Subsequently it is very important permit as few mistaken labels as doable.

- Measurable suggestions: Continually evaluating the mannequin’s efficiency on a separate take a look at set acts as a actuality examine, guaranteeing we’re making precise progress, not simply reinforcing current errors.

- Human-in-the-loop: Incorporating human suggestions within the type of handbook overview of pseudo-labels or handbook labeling of low-confidence information can present invaluable course correction.

Pseudo-labeling, when completed proper, could be a highly effective instrument to benefit from small labeled datasets, as we’ll see within the following case research.

I performed my experiments on the MNIST dataset, a traditional assortment of 28 by 28 pixel photos of handwritten digits, broadly used for benchmarking machine studying fashions. It consists of 60,000 coaching photos and 10,000 take a look at photos. The aim is to, based mostly on the 28 by 28 pixels, predict what digit is written.

I educated a easy CNN on an preliminary set of 1,000 labeled photos, leaving 59,000 unlabeled. I then used the educated mannequin to foretell the labels for the unlabeled photos. Predictions with confidence above a sure threshold (e.g., 95 %) had been added to the coaching set, together with their predicted labels. The mannequin was then retrained on this expanded dataset. This course of was repeated iteratively, as much as ten occasions or till there was no extra unlabeled information.

This experiment was repeated with completely different numbers of initially labeled photos and confidence thresholds.

Outcomes

The next desk summarizes the outcomes of my experiments, evaluating the efficiency of pseudo-labeling to coaching on the total labeled dataset.

Even with a small preliminary labeled dataset, pseudo-labeling might produce outstanding outcomes, rising the accuracy by 4.87 %pt. for 1,000 preliminary labeled samples. When utilizing solely 100 preliminary samples, this impact is even stronger. Nevertheless, it could’ve been sensible to manually label greater than 100 samples.

Curiously, the ultimate take a look at accuracy of the experiment with 100 preliminary coaching samples exceeded the share of appropriate coaching labels.

Wanting on the above graphs, it turns into obvious that, on the whole, larger thresholds result in higher outcomes — so long as at the least some predictions exceed the brink. In future experiments, one may attempt to range the brink with every iteration.

Moreover, the accuracy improves even within the later iterations, indicating that the iterative nature offers a real profit.

- Pseudo-labeling is finest utilized when unlabeled information is plentiful however labeling is pricey.

- Monitor the take a look at accuracy: It’s vital to regulate the mannequin’s efficiency on a separate take a look at dataset all through the iterations.

- Handbook labeling can nonetheless be useful: You probably have the sources, concentrate on manually labeling the low confidence information. Nevertheless, people aren’t good both and labeling of excessive confidence information could also be delegated to the mannequin in good conscience.

- Preserve monitor of what labels are AI-generated. If extra manually labeled information turns into accessible afterward, you’ll possible wish to discard the pseudo-labels and repeat this process, rising the pseudo-label accuracy.

- Watch out when deciphering the outcomes: After I first did this experiment a couple of years in the past, I targeted on the accuracy on the remaining unlabeled coaching information. This accuracy falls with extra iterations! Nevertheless, that is possible as a result of the remaining information is more durable to foretell — the mannequin was by no means assured about it in earlier iterations. I ought to have targeted on the take a look at accuracy, which truly improves with extra iterations.

The repository containing the experiment’s code might be discovered here.

Associated paper: Iterative Pseudo-Labeling with Deep Feature Annotation and Confidence-Based Sampling