A step-by-step information to coaching a BlazeFace mannequin, from the Python coaching pipeline to the JavaScript demo by means of mannequin conversion.

Due to libraries resembling YOLO by Ultralytics, it’s pretty simple immediately to make strong object detection fashions with as little as a number of traces of code. Sadly, these options usually are not but quick sufficient to work in an online browser on a real-time video stream at 30 frames per second (which is often thought of the real-time restrict for video purposes) on any system. As a rule, it is going to run at lower than 10 fps on a mean cell system.

Essentially the most well-known real-time object detection answer on net browser is Google’s MediaPipe. This can be a actually handy and versatile answer, as it might work on many units and platforms simply. However what if you wish to make your individual answer?

On this put up, we suggest to construct our personal light-weight, quick and strong object detection mannequin, that runs at greater than 30 fps on virtually any units, primarily based on the BlazeFace mannequin. All of the code used for that is obtainable on my GitHub, within the blazeface folder.

The BlazeFace mannequin, proposed by Google and initially utilized in MediaPipe for face detection, is basically small and quick, whereas being strong sufficient for simple object detection duties resembling face detection. Sadly, to my information, no coaching pipeline of this mannequin is obtainable on-line on GitHub; all I may discover is this inference-only model architecture. By this put up, we’ll practice our personal BlazeFace mannequin with a completely working pipeline and apply it to browser with a working JavaScript code.

Extra particularly, we’ll undergo the next steps:

- Coaching the mannequin utilizing PyTorch

- Changing the PyTorch mannequin right into a TFLite mannequin

- Operating the thing detection within the browser because of JavaScript and TensorFlow.js

Let’s get began with the mannequin coaching.

As standard when coaching a mannequin, there are a number of typical steps in a coaching pipeline:

- Preprocessing the information: we’ll use a freely obtainable Kaggle dataset for simplicity, however any dataset with the correct format of labels would work

- Constructing the mannequin: we’ll reuse the proposed structure within the authentic paper and the inference-only GitHub code

- Coaching and evaluating the mannequin: we’ll use a easy Multibox loss as the fee perform to reduce

Let’s undergo these steps collectively.

Knowledge Preprocessing

We’re going to use a subset of the Open Images Dataset V7, proposed by Google. This dataset is made from about 9 million photos with many annotations (together with bounding containers, segmentation masks, and lots of others). The dataset itself is kind of giant and accommodates many forms of photos.

For our particular use case, I made a decision to pick photos within the validation set fulfilling two particular circumstances:

- Containing labels of human face bounding field

- Having a permissive license for such a use case, extra particularly the CC BY 2.0 license

The script to obtain and construct the dataset underneath these strict circumstances is offered within the GitHub, in order that anybody can reproduce it. The downloaded dataset with this script accommodates labels within the YOLO format (which means field heart, width and peak). Ultimately, the downloaded dataset is made from about 3k photos and 8k faces, that I’ve separated into practice and validation set with a 80%-20% break up ratio.

From this dataset, typical preprocessing it required earlier than having the ability to practice a mannequin. The information preprocessing code I used is the next:

As we will see, the preprocessing is made from the next steps:

- It masses photos and labels

- It converts labels from YOLO format (heart place, width, peak) to field nook format (top-left nook place, bottom-right nook place)

- It resizes photos to the goal dimension (e.g. 128 pixels), and provides padding if essential to maintain the unique picture side ratio and keep away from picture deformation. Lastly, it normalizes the pictures.

Optionally, this code permits for knowledge augmentation utilizing Albumentations. For the coaching, I used the next knowledge augmentations:

- Horizontal flip

- Random brightness distinction

- Random crop from borders

- Affine transformation

These augmentations will permit us to have a extra strong, regularized mannequin. In spite of everything these transformations and augmentations, the enter knowledge could seem like the next pattern:

As we will see, the preprocessed photos have gray borders due to augmentation (with rotation or translation) or padding (as a result of the unique picture didn’t have a sq. side ratio). All of them comprise faces, though the context is likely to be actually completely different relying on the picture.

Vital Observe:

Face detection is a extremely delicate activity with vital moral and security issues. Bias within the dataset, resembling underrepresentation or overrepresentation of sure facial traits, can result in false negatives or false positives, doubtlessly inflicting hurt or offense. See beneath a devoted part about moral issues.

Now that our knowledge may be loaded and preprocessed, let’s go to the following step: constructing the mannequin.

Mannequin Constructing

On this part, we’ll construct the mannequin structure of the unique BlazeFace mannequin, primarily based on the unique article and tailored from the BlazeFace repository containing inference code solely.

The entire BlazeFace structure is reasonably easy and is generally made from what the paper’s writer name a BlazeBlock, with numerous parameters.

The BlazeBlock may be outlined with PyTorch as follows:

As we will see from this code, a BlazeBlock is solely made from the next layers:

- One depthwise 2D convolution layer

- One batch norm 2D layer

- One 2D convolution layer

- One batch norm 2D layer

N.B.: You possibly can learn the PyTorch documentation for extra about these layers: Conv2D layer and BatchNorm2D layer.

This block is repeated many occasions with completely different enter parameters, to go from a 128-pixel picture as much as a typical object detection prediction utilizing tensor reshaping within the last phases. Be happy to take a look on the full code within the GitHub repository for extra in regards to the implementation of this structure.

Earlier than transferring to the following part about coaching the mannequin, notice that there are literally two architectures:

- A 128-pixel enter picture structure

- A 256-pixel enter picture structure

As you’ll be able to think about, the 256-pixel structure is barely bigger, however nonetheless light-weight and generally extra strong. This structure can also be carried out within the offered code, so as to use it if you need.

N.B.: The unique BlazeFace mannequin not solely predicts a bounding field, but in addition six approximate face landmarks. Since I didn’t have such labels, I simplified the mannequin structure to foretell solely the bounding containers.

Now that we will construct a mannequin, let’s transfer on to the following step: coaching the mannequin.

Mannequin Coaching

For anybody conversant in PyTorch, coaching fashions resembling this one is often fairly easy and easy, as proven on this code:

As we will see, the concept is to loop over your knowledge for a given variety of epochs, one batch at a time, and do the next:

- Get the processed knowledge and corresponding labels

- Make the ahead inference

- Compute the lack of the inference towards the label

- Replace the weights

I’m not stepping into all the main points for readability on this put up, however be happy to navigate by means of the code to get a greater sense of the coaching half if wanted.

After coaching on 100 epochs, I had the next outcomes on the validation set:

As we will see on these outcomes, even when the thing detection is just not excellent, it really works fairly nicely for many instances (most likely the IoU threshold was not optimum, main generally to overlapping containers). Take note it’s a really gentle mannequin; it might’t exhibit the identical performances as a YOLOv8, for instance.

Earlier than going to the following step about changing the mannequin, let’s have a brief dialogue about moral and security issues.

Moral and Security Concerns

Let’s go over a number of factors about ethics and security, since face detection generally is a very delicate matter:

- Dataset significance and choice: This dataset is used to reveal face detection strategies for instructional functions. It was chosen for its relevance to the subject, however it could not absolutely characterize the variety wanted for unbiased outcomes.

- Bias consciousness: The dataset is just not claimed to be bias-free, and potential biases haven’t been absolutely mitigated. Please concentrate on potential biases that may have an effect on the accuracy and equity of face detection fashions.

- Dangers: The educated face detection mannequin could replicate these biases, elevating potential moral issues. Customers ought to critically assess the outcomes and think about the broader implications.

To deal with these issues, anybody keen to construct a product on such matter ought to concentrate on:

- Accumulating various and consultant photos

- Verifying the information is bias-free and any class is equally represented

- Constantly evaluating the moral implications of face detection applied sciences

N.B.: A helpful strategy to handle these issues is to look at what Google did for their very own face detection and face landmarks fashions.

Once more, the used dataset is meant solely for instructional functions. Anybody keen to make use of it ought to train warning and be aware of its limitations when deciphering outcomes. Let’s now transfer to the following step with the mannequin conversion.

Do not forget that our purpose is to make our object detection mannequin work in an online browser. Sadly, as soon as we’ve a educated PyTorch mannequin, we can’t instantly use it in an online browser. We first must convert it.

Presently, to my information, essentially the most dependable option to run a deep studying mannequin in an online browser is through the use of a TFLite mannequin with TensorFlow.js. In different phrases, we have to convert our PyTorch mannequin right into a TFLite mannequin.

N.B.: Some alternative routes are rising, resembling ExecuTorch, however they don’t appear to be mature sufficient but for net use.

So far as I do know, there isn’t a strong, dependable manner to take action instantly. However there are aspect methods, by going by means of ONNX. ONNX (which stands for Open Neural Community Change) is an ordinary for storing and operating (utilizing ONNX Runtime) machine studying fashions. Conveniently, there can be found libraries for conversion from torch to ONNX, in addition to from ONNX to TensorFlow fashions.

To summarize, the conversion workflow is made from the three following steps:

- Convert from PyTorch to ONNX

- Convert from ONNX to TensorFlow

- Convert from TensorFlow to TFLite

That is precisely what the next code does:

This code may be barely extra cryptic than the earlier ones, as there are some particular optimizations and parameters used to make it work correctly. One can even attempt to go one step additional and quantize the TFLite mannequin to make it even smaller. If you’re involved in doing so, you’ll be able to take a look on the official documentation.

N.B.: The conversion code is very delicate of the variations of the libraries. To make sure a clean conversion, I might strongly suggest utilizing the desired variations within the necessities.txt file on GitHub.

On my aspect, after TFLite conversion, I lastly have a TFLite mannequin of solely about 400kB, which is light-weight and fairly acceptable for net utilization. Subsequent step is to truly try it out in an online browser, and to verify it really works as anticipated.

On a aspect notice, remember that one other answer is at the moment being developed by Google for PyTorch mannequin conversion to TFLite format: AI Edge Torch. Sadly, that is fairly new and I couldn’t make it work for my use case. Nevertheless, any suggestions about this library could be very welcome.

Now that we lastly have a TFLite mannequin, we’re capable of run it in an online browser utilizing TensorFlow.js. If you’re not conversant in JavaScript (since this isn’t often a language utilized by knowledge scientists and machine studying engineers) don’t worry; all of the code is offered and is reasonably simple to know.

I gained’t remark all of the code right here, simply essentially the most related components. In case you take a look at the code on GitHub, you will note the next within the javascript folder:

- index.html: accommodates the house web page operating the entire demo

- belongings: the folder containing the TFLite mannequin that we simply transformed

- js: the folder containing the JavaScript codes

If we take a step again, all we have to do within the JavaScript code is to loop over the frames of the digicam feed (both a webcam on a pc or the front-facing digicam on a cell phone) and do the next:

- Preprocess the picture: resize it as a 128-pixel picture, with padding and normalization

- Compute the inference on the preprocessed picture

- Postprocess the mannequin output: apply thresholding and non max suppression to the detections

We gained’t remark the picture preprocessing since this could be redundant with the Python preprocessing, however be happy to take a look on the code. On the subject of making an inference with a TFLite mannequin in JavaScript, it’s pretty simple:

The difficult half is definitely the postprocessing. As chances are you’ll know, the output of a SSD object detection mannequin is just not instantly usable: this isn’t the bounding containers places. Right here is the postprocessing code that I used:

Within the code above, the mannequin output is postprocessed with the next steps:

- The containers places are corrected with the anchors

- The field format is transformed to get the top-left and the bottom-right corners

- Non-max suppression is utilized to the containers with the detection rating, permitting the removing of all containers beneath a given threshold and overlapping different already-existing containers

That is precisely what has been completed in Python too to show the ensuing bounding containers, if it could assist you get a greater understanding of that half.

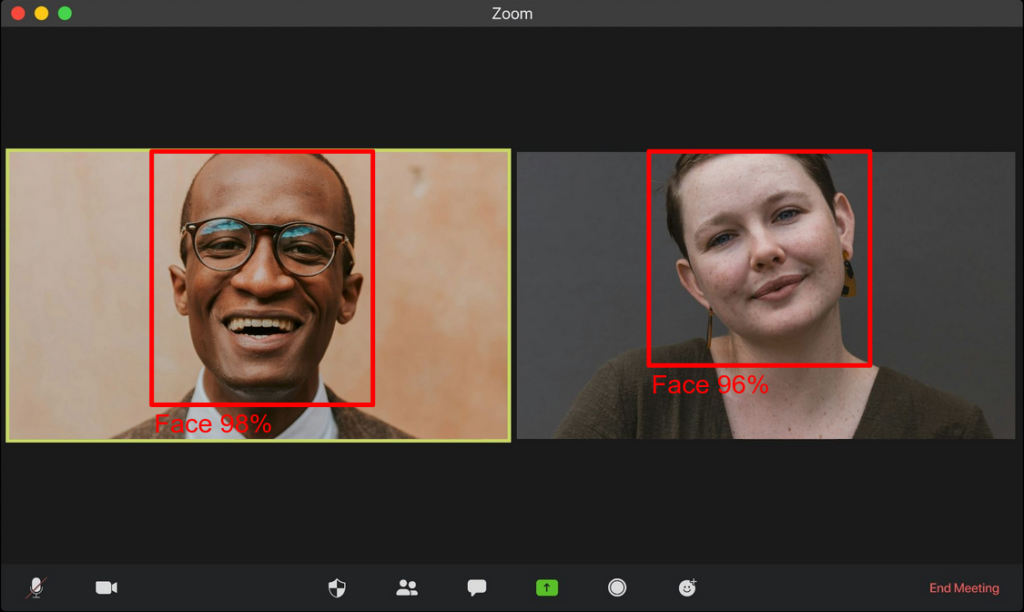

Lastly, beneath is a screenshot of the ensuing net browser demo:

As you’ll be able to see, it correctly detects the face within the picture. I made a decision to make use of a static image from Unsplash, however the code on GitHub permits you to run it in your webcam, so be happy to check it your self.

Earlier than concluding, notice that when you run this code by yourself pc or smartphone, relying in your system chances are you’ll not attain 30 fps (on my private laptop computer having a reasonably outdated 2017 Intel® Core™ i5–8250U, it runs at 36fps). If that’s the case, a number of tips could assist you get there. The best one is to run the mannequin inference solely as soon as each N frames (N to be high quality tuned relying in your software, after all). Certainly, usually, from one body to the following, there usually are not many adjustments, and the containers can stay virtually unchanged.

I hope you loved studying this put up and thanks when you acquired this far. Regardless that doing object detection is pretty simple these days, doing it with restricted assets may be fairly difficult. Studying about BlazeFace and changing fashions for net browser provides some insights into how MediaPipe was constructed, and opens the way in which to different attention-grabbing purposes resembling blurring backgrounds in video name (like Google Meets or Microsoft Groups) in actual time within the browser.