5. Univariate sequential encoding

It’s time to construct the sequential mechanism to maintain monitor of person selections over time. The mechanism I idealized works on two separate vectors (that after the method find yourself being one, therefore univariate), a historic vector and a caching vector.

The historic vector is the one that’s used to carry out knn on the present clusters. As soon as a session is concluded, we replace the historic vector with the brand new person selections. On the identical time, we alter current values with a decay perform that diminishes the present weights over time. By doing so, we ensure that to maintain up with the shopper developments and give extra weight to new selections, fairly than older ones.

Slightly than updating the vector at every person makes a selection (which isn’t computationally environment friendly, as well as, we threat letting older selections decay too shortly, as each person interplay will set off the decay mechanism), we will retailer a brief vector that’s solely legitimate for the present session. Every person interplay, transformed right into a vector utilizing the tag frequency as one scorching weight, shall be summed to the present cached vector.

As soon as the session is closed, we’ll retrieve the historic vector from the database, merge it with the cached vector, and apply the adjustment mechanisms, such because the decay perform and pruning, as we’ll see later). After the historic vector has been up to date, will probably be saved within the database changing the previous one.

The 2 causes to comply with this strategy are to reduce the load distinction between older and newer interactions and to make the whole course of scalable and computationally environment friendly.

6. Pruning Mechanism

The system has been accomplished. Nevertheless, there’s a further downside: covariate encoding has one flaw: its base vector is scaled proportionally to the variety of encoded tags. For instance, if our database have been to achieve 100k tags, the vector would have an equal variety of dimensions.

The unique covariate encoding structure already takes this downside under consideration, proposing a PCA compression mechanism as an answer. Nevertheless, utilized to our recommender, PCA causes points when iteratively summing vectors, leading to data loss. As a result of each person selection will trigger a summation of current vectors with a brand new one, this answer just isn’t advisable.

Nevertheless, If we can’t compress the vector we will prune the scale with the bottom scores. The system will execute a knn based mostly on essentially the most related scores of the vector; this direct technique of characteristic engineering received’t have an effect on negatively (higher but, not excessively) the outcomes of the ultimate advice.

By pruning our vector, we will arbitrarily set a most variety of dimensions to our vectors. With out altering the tag indexes, we will begin working on sparse vectors, fairly than a dense one, a knowledge construction that solely saves the energetic indexes of our vectors, with the ability to scale indefinitely. We are able to examine the suggestions obtained from a full vector (dense vector) in opposition to a sparse vector (pruned vector).

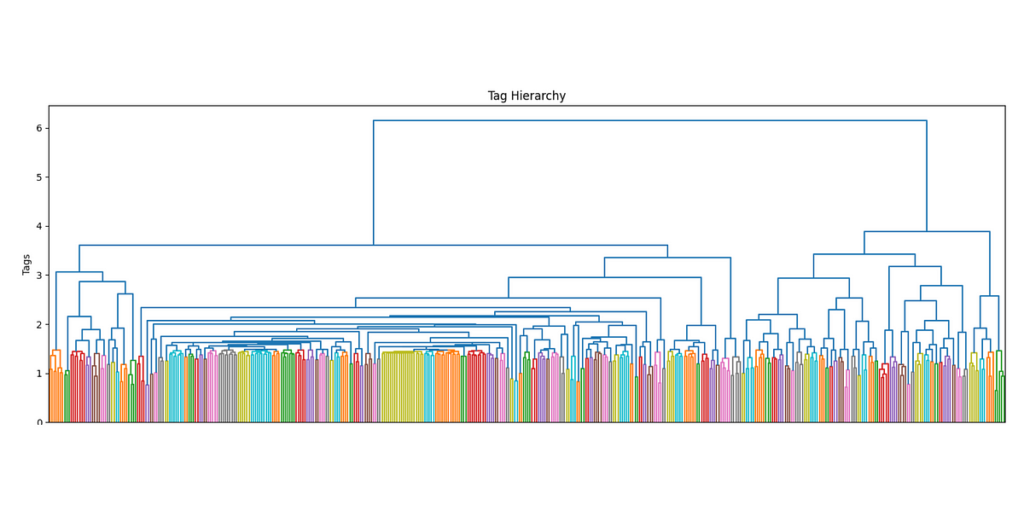

As we will see, we will spot minor variations, however the total integrity of the vector has been maintained in alternate for scalability. A really intuitive various to this course of is by performing clustering on the tag degree, sustaining the vector dimension fastened. On this case, a tag will should be assigned to the closest tag semantically, and won’t occupy its devoted dimension.

7. Exemplar estimation

Now that you’ve absolutely grasped the speculation behind this new strategy, we will examine them extra clearly. In a multivariate strategy, step one was to determine the highest person preferences utilizing clustering. As we will see, this course of required us to retailer as many vectors as discovered exemplars.

Nevertheless, in a univariate strategy, as a result of covariate encoding works on a transposed model of the encoded information, we will use sections of our historic vector to retailer person preferences, therefore solely utilizing a single vector for the whole course of. Utilizing the historic vector as a question to go looking by way of encoded tags: its top-k outcomes from a knn search shall be equal to the top-k preferential clusters.

8. Suggestion approaches

Now that we have now captured a couple of desire, how will we plan to suggest gadgets? That is the main distinction between the 2 techniques. The standard multivariate recommender will use the exemplar to suggest ok gadgets to a person. Nevertheless, our system has assigned our buyer one supercluster and the highest subclusters below it (relying on our degree of tag segmentation, we will improve the variety of ranges). We is not going to suggest the highest ok gadgets, however the prime ok subclusters.

Utilizing groupby as an alternative of vector search

Up to now, we have now been utilizing a vector to retailer information, however that doesn’t imply we have to depend on vector search to carry out suggestions, as a result of will probably be a lot slower than a SQL operation. Observe that getting the identical precise outcomes utilizing vector search on the person array is certainly potential.

If you’re questioning why you’ll be switching from a vector-based system to a count-based system, it’s a respectable query. The straightforward reply to that’s that that is essentially the most loyal reproduction of the multivariate system (as portrayed within the reference pictures), however rather more scalable (it could actually attain as much as 3000 suggestions/s on 16 CPU cores utilizing pandas). Initially, the univariate recommender was designed to make use of vector search, however, as showcased, there are less complicated and higher search algorithms.

Allow us to run a full check that we will monitor. We are able to use the code from the pattern pocket book: for our easy instance, the person selects a minimum of one sport labeled with corresponding tags.

# if no vector exists, the primary selections are the historic vector

historical_vector = user_choices(5, tag_lists=[['Shooter', 'Fantasy']], tag_frequency=tag_frequency, display_tags=False)# day1

cached_vector = user_choices(3, tag_lists=[['Puzzle-Platformer'], ['Dark Fantasy'], ['Fantasy']], tag_frequency=tag_frequency, display_tags=False)

historical_vector = update_vector(historical_vector, cached_vector, 1, 0.8)

# day2

cached_vector = user_choices(3, tag_lists=[['Puzzle'], ['Puzzle-Platformer']], tag_frequency=tag_frequency, display_tags=False)

historical_vector = update_vector(historical_vector, cached_vector, 1, 0.8)

# day3

cached_vector = user_choices(3, tag_lists=[['Adventure'], ['2D', 'Turn-Based']], tag_frequency=tag_frequency, display_tags=False)

historical_vector = update_vector(historical_vector, cached_vector, 1, 0.8)

compute_recommendation(historical_vector, label_1_max=3)

On the finish of three periods, these are the highest 3 exemplars (label_1) extracted from our recommender:

Within the pocket book, you will see the choice to carry out Monte Carlo simulations, however there could be no straightforward technique to validate them (principally as a result of crew video games usually are not tagged with the very best accuracy, and I seen that almost all small video games checklist too many unrelated or widespread tags).

The architectures of the preferred recommender techniques nonetheless don’t keep in mind session historical past, however with the event of latest algorithms and the rise in computing energy, it’s now potential to deal with a better degree of complexity.

This new strategy ought to supply a complete various to the sequential recommender techniques obtainable in the marketplace, however I’m satisfied that there’s all the time room for enchancment. To additional improve this structure it will be potential to modify from a clustering-based to a network-based strategy.

You will need to word that this recommender system can solely excel when utilized to a restricted variety of domains however has the potential to shine in situations of scarce computational assets or extraordinarily excessive demand.