Welcome to Half 2 of our NLP sequence. For those who caught Part 1, you’ll keep in mind that the problem we’re tackling is translating textual content into numbers in order that we are able to feed it into our machine studying fashions or neural networks.

Beforehand, we explored some primary (and fairly naive) approaches to this, like Bag of Phrases and TF-IDF. Whereas these strategies get the job achieved, we additionally noticed their limitations — primarily that they don’t seize the deeper which means of phrases or the relationships between them.

That is the place phrase embeddings are available in. They provide a wiser option to characterize textual content as numbers, capturing not simply the phrases themselves but in addition their which means and context.

Let’s break it down with a easy analogy that’ll make this idea tremendous intuitive.

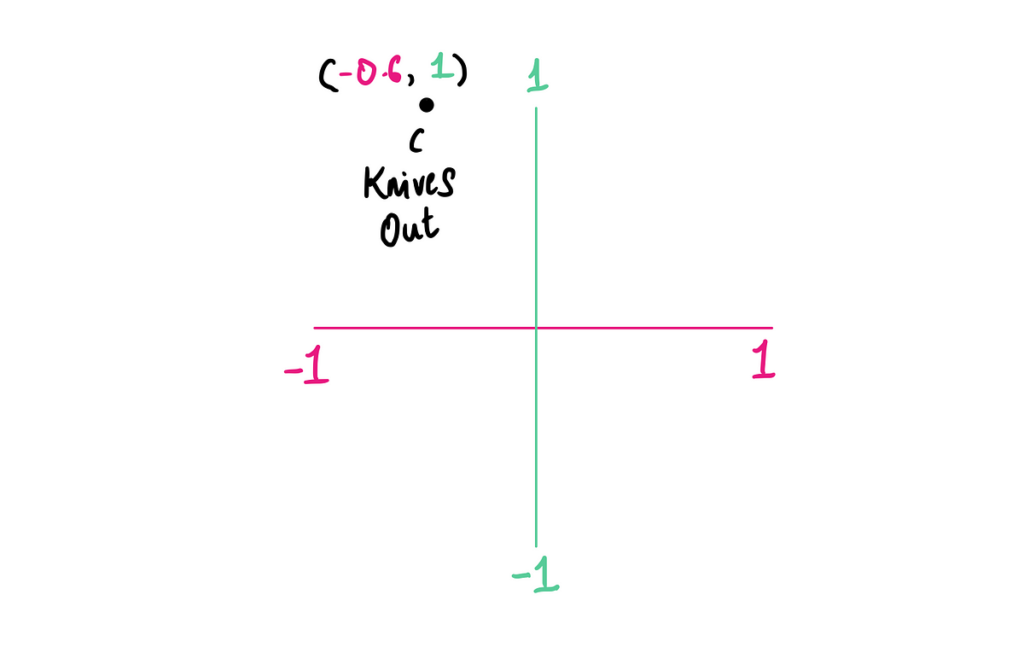

Think about we need to characterize motion pictures as numbers. Take the film Knives Out for instance.

We will characterize a film numerically by scoring it throughout totally different options, such…